Introduction

automesh is an open-source Rust software program that uses a segmentation,

typically generated from a 3D image stack,

to create a finite element mesh,

composed either of hexahedral (volumetric)

or triangular (isosurface) elements.

automesh converts between

segmentation formats (.npy, .spn)

and

mesh formats (.exo, .inp, .mesh, .stl, .vtk).

automesh can defeature voxel domains,

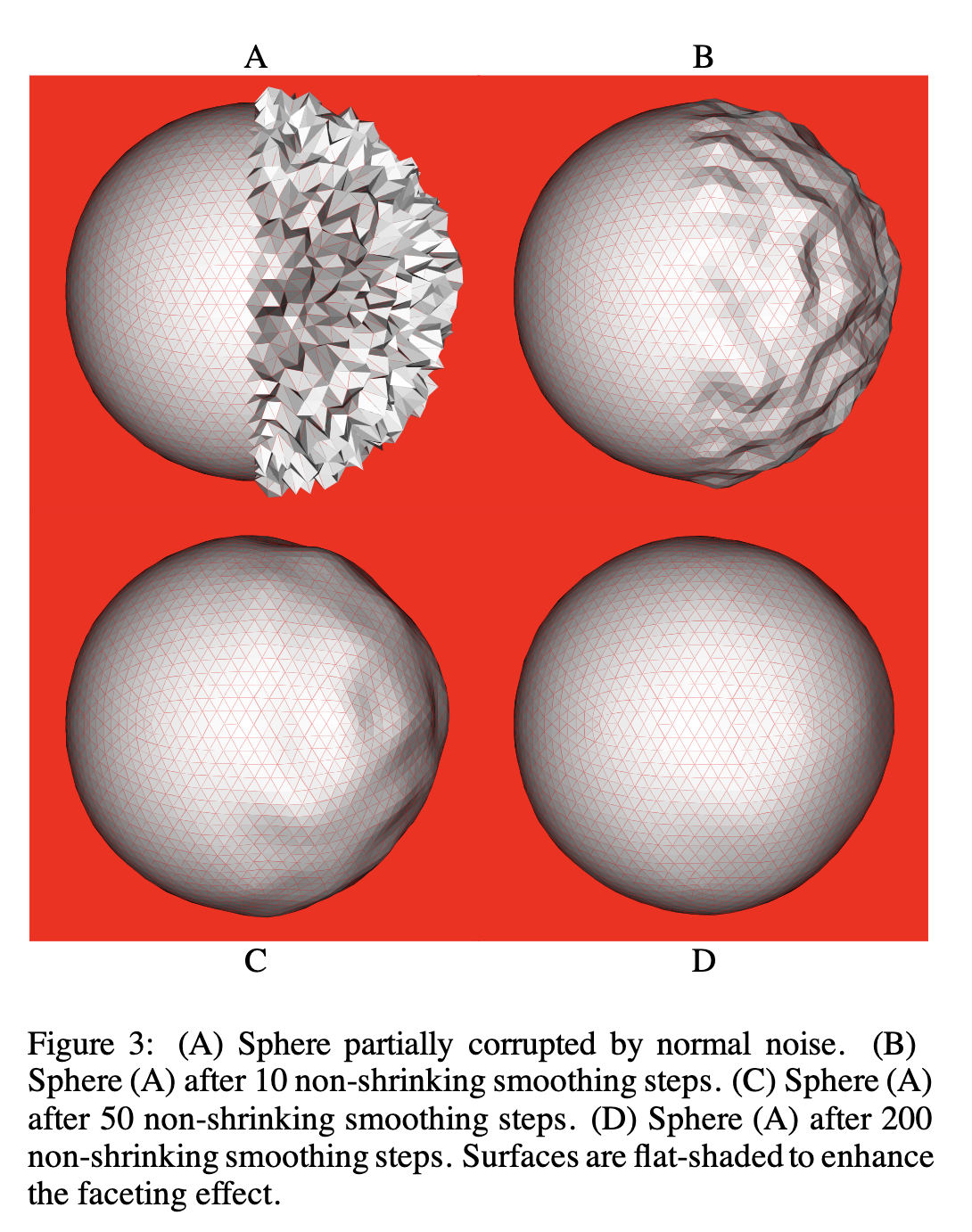

apply Laplacian and Taubin smoothing,

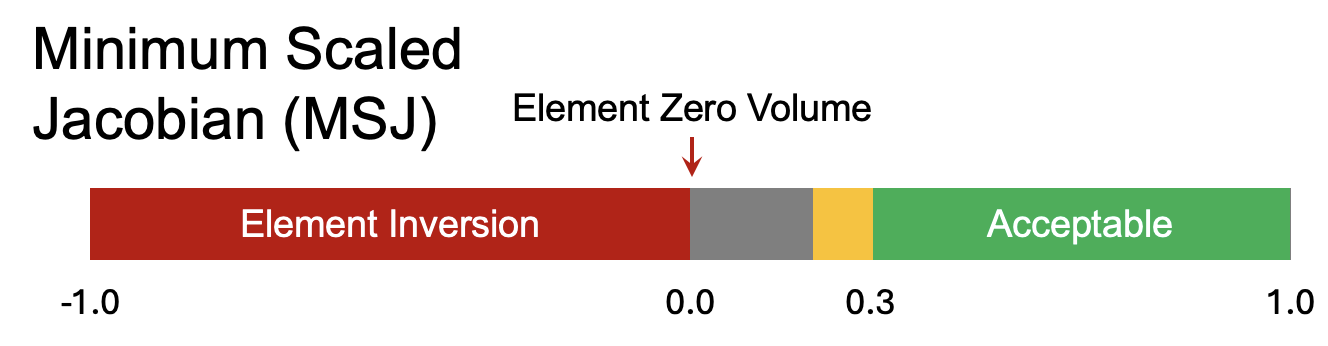

and output mesh quality metrics.

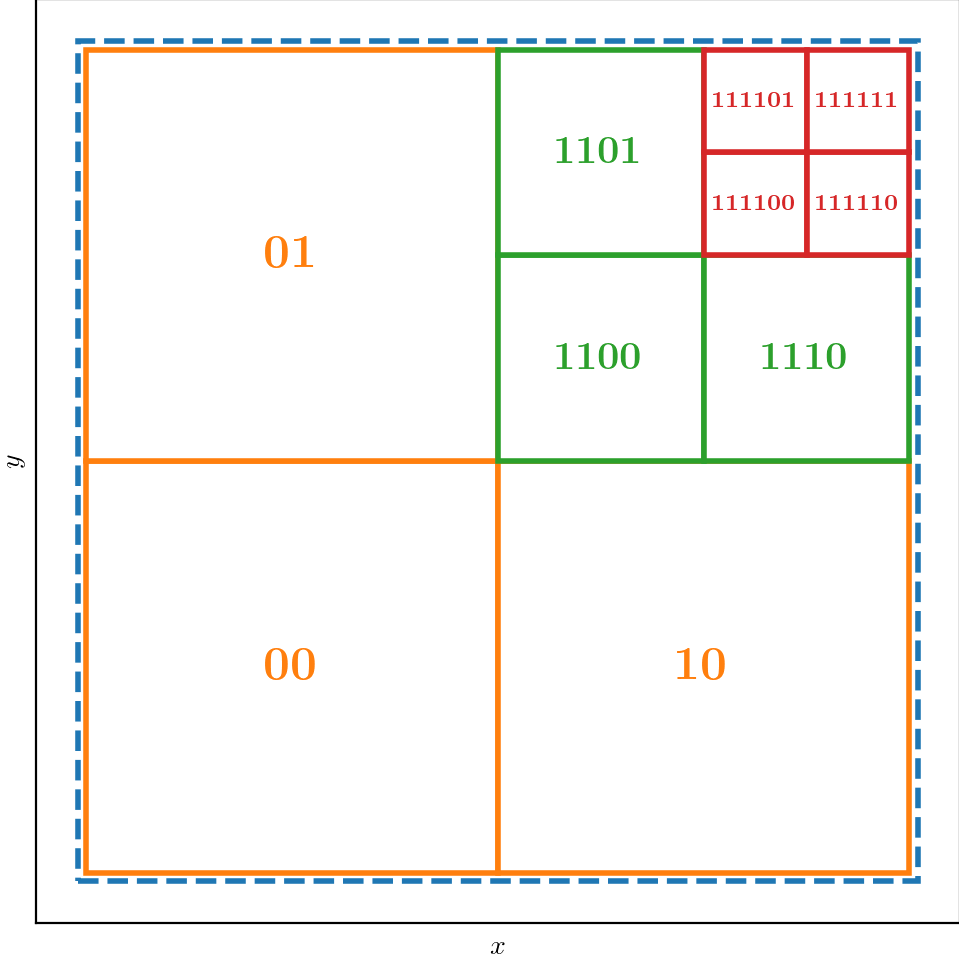

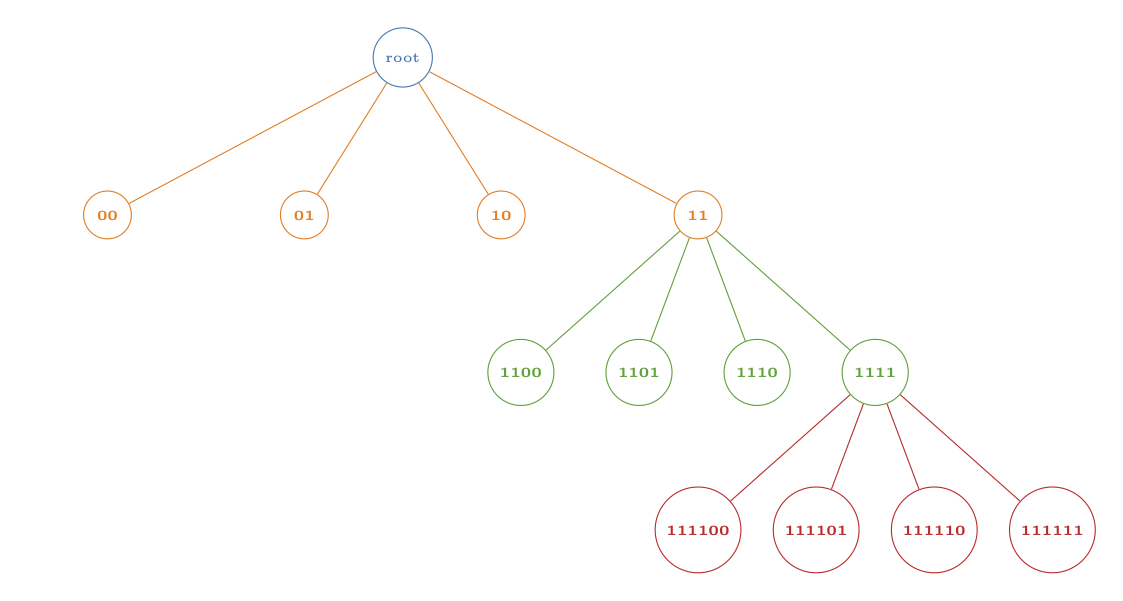

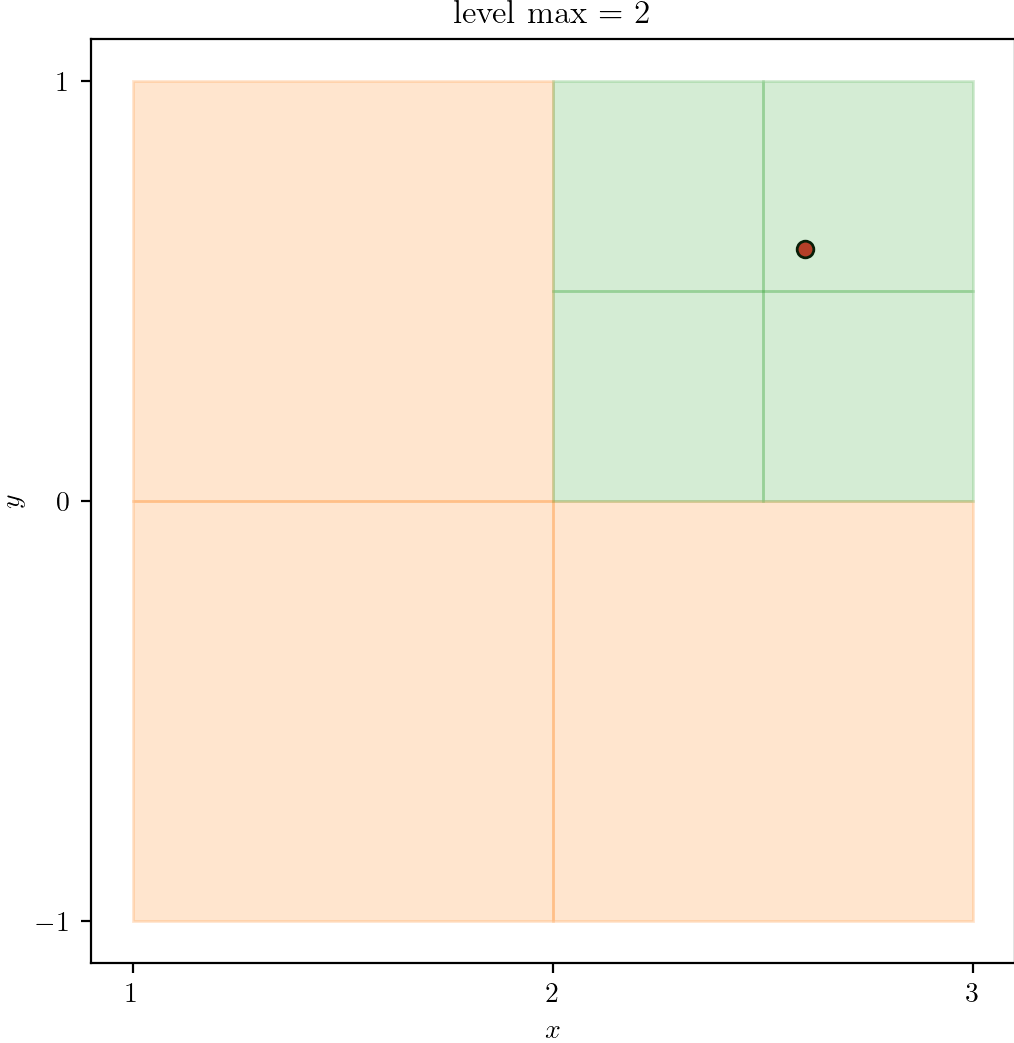

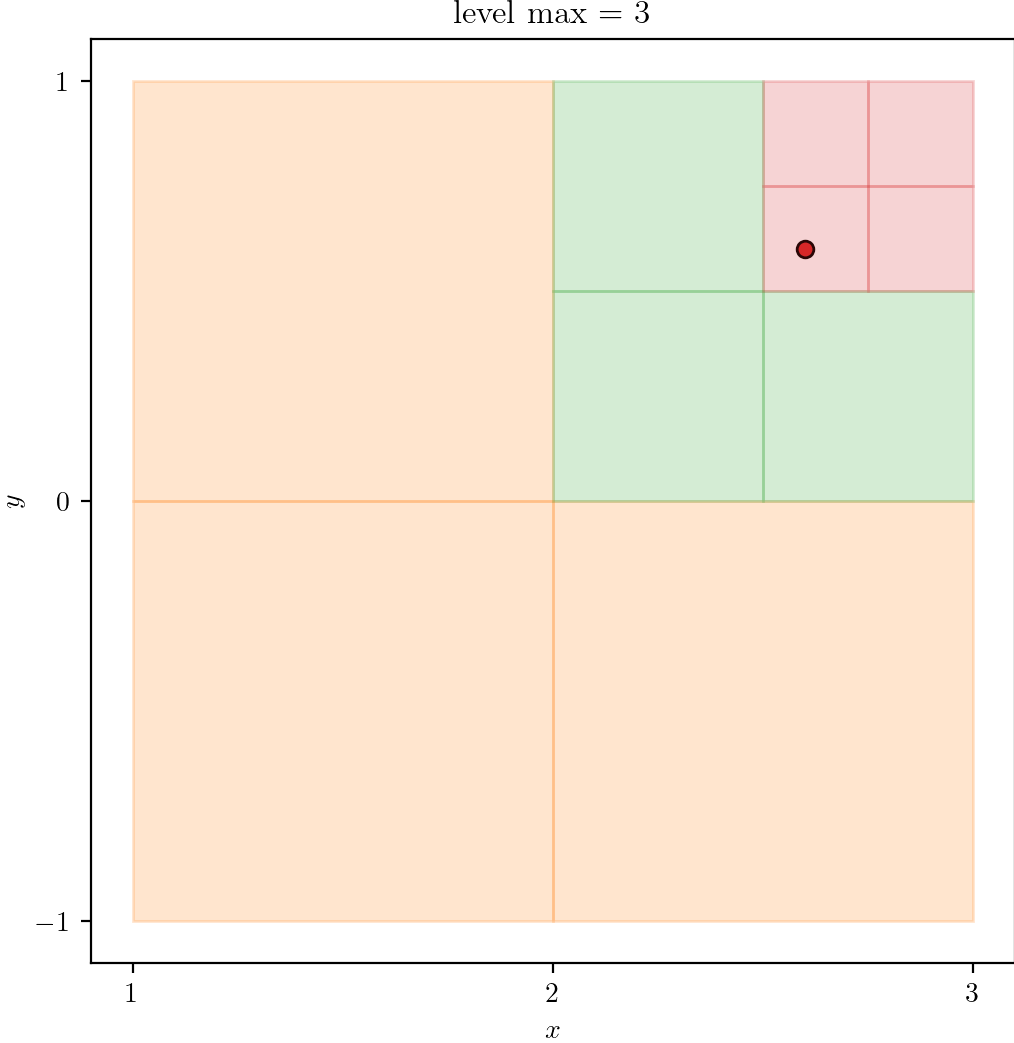

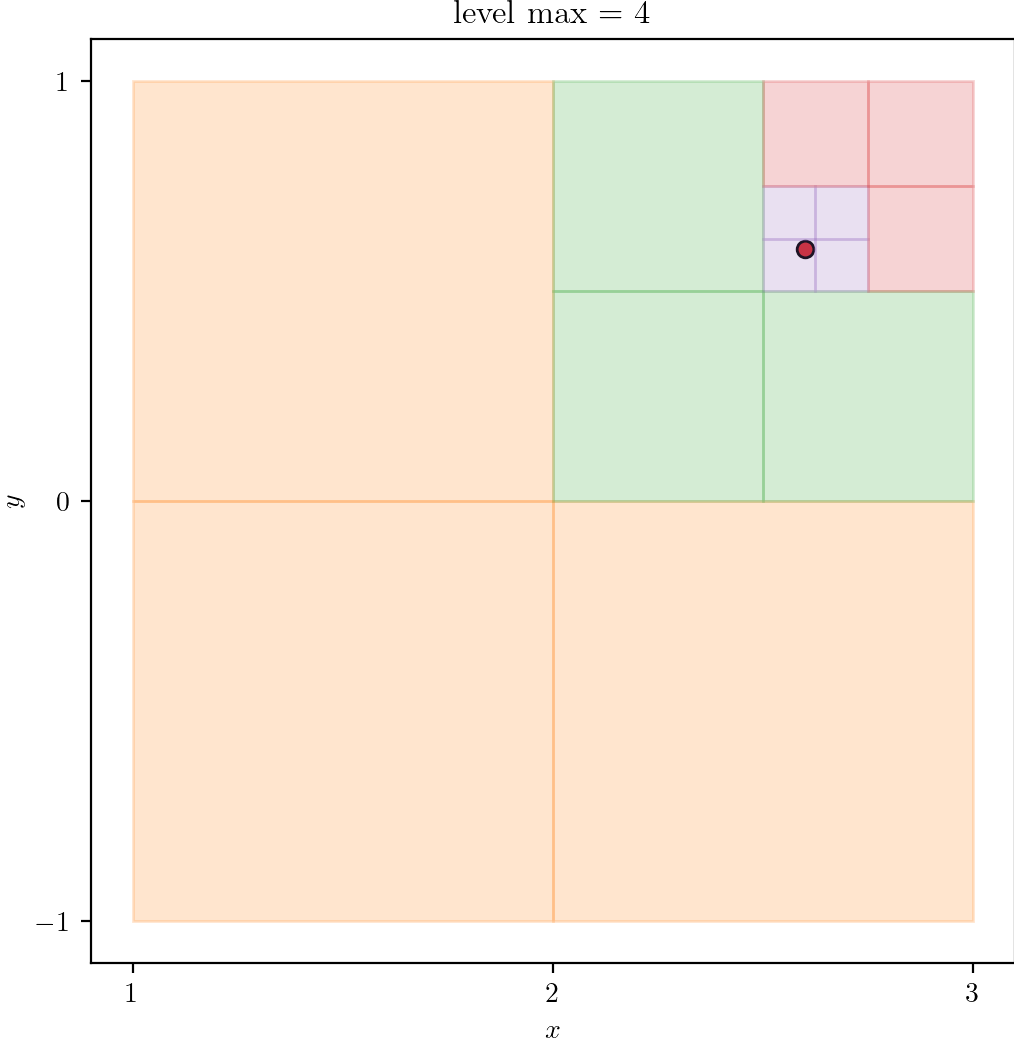

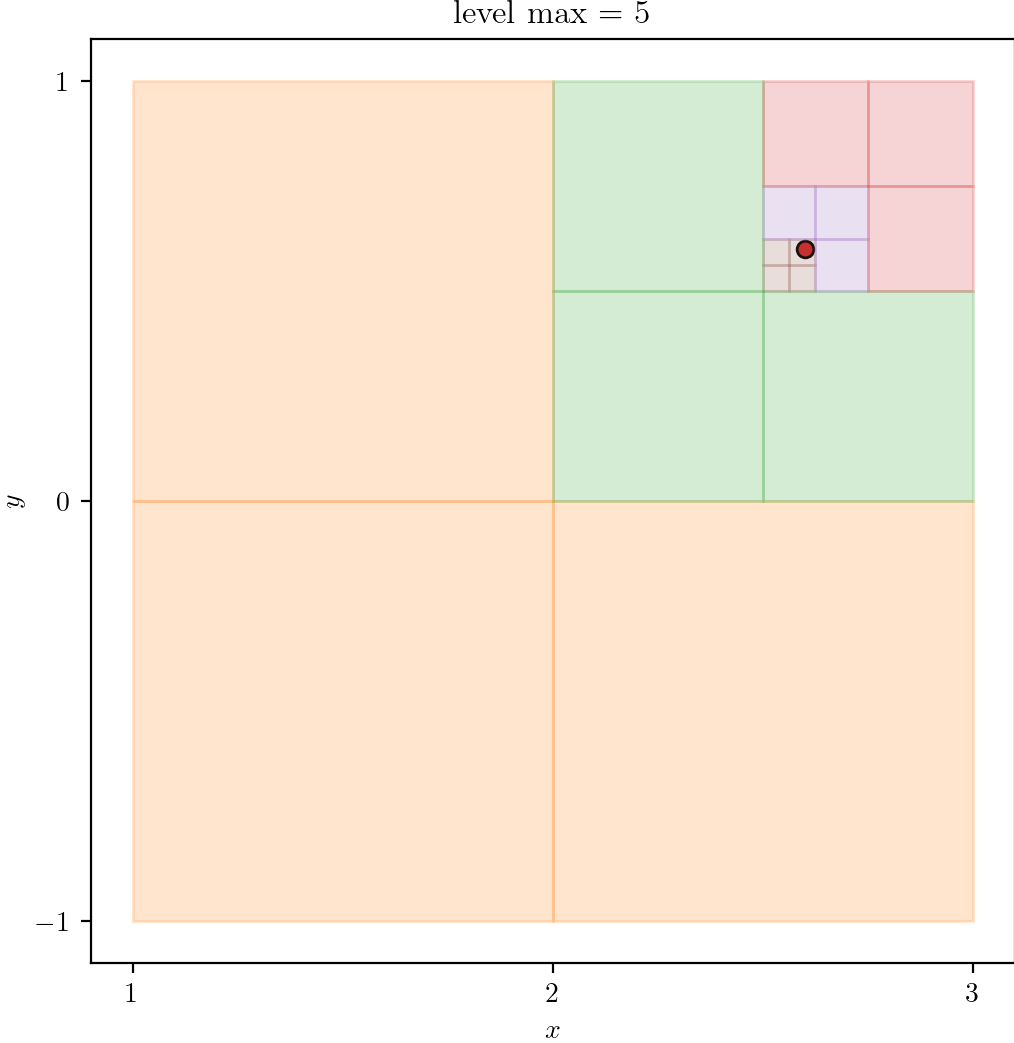

automesh uses an internal octree for fast performance.

Segmentation

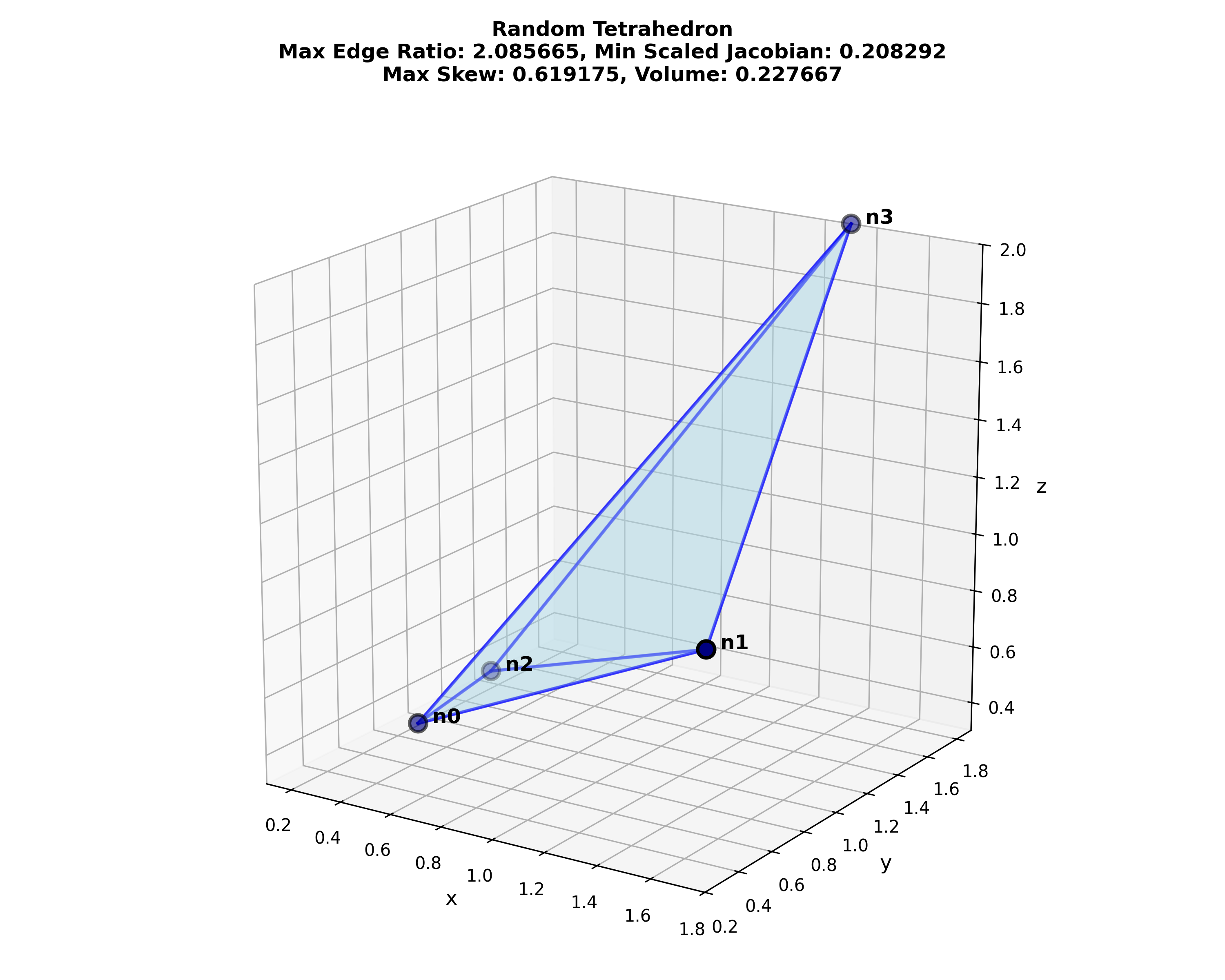

Segmentation is the process of categorizing pixels that compose a digital image into a class that represents some subject of interest. For example, in the image below, the image pixels are classified into classes of sky, trees, cat, grass, and cow.

Figure: Example of semantic segmentation, from Li et al.1

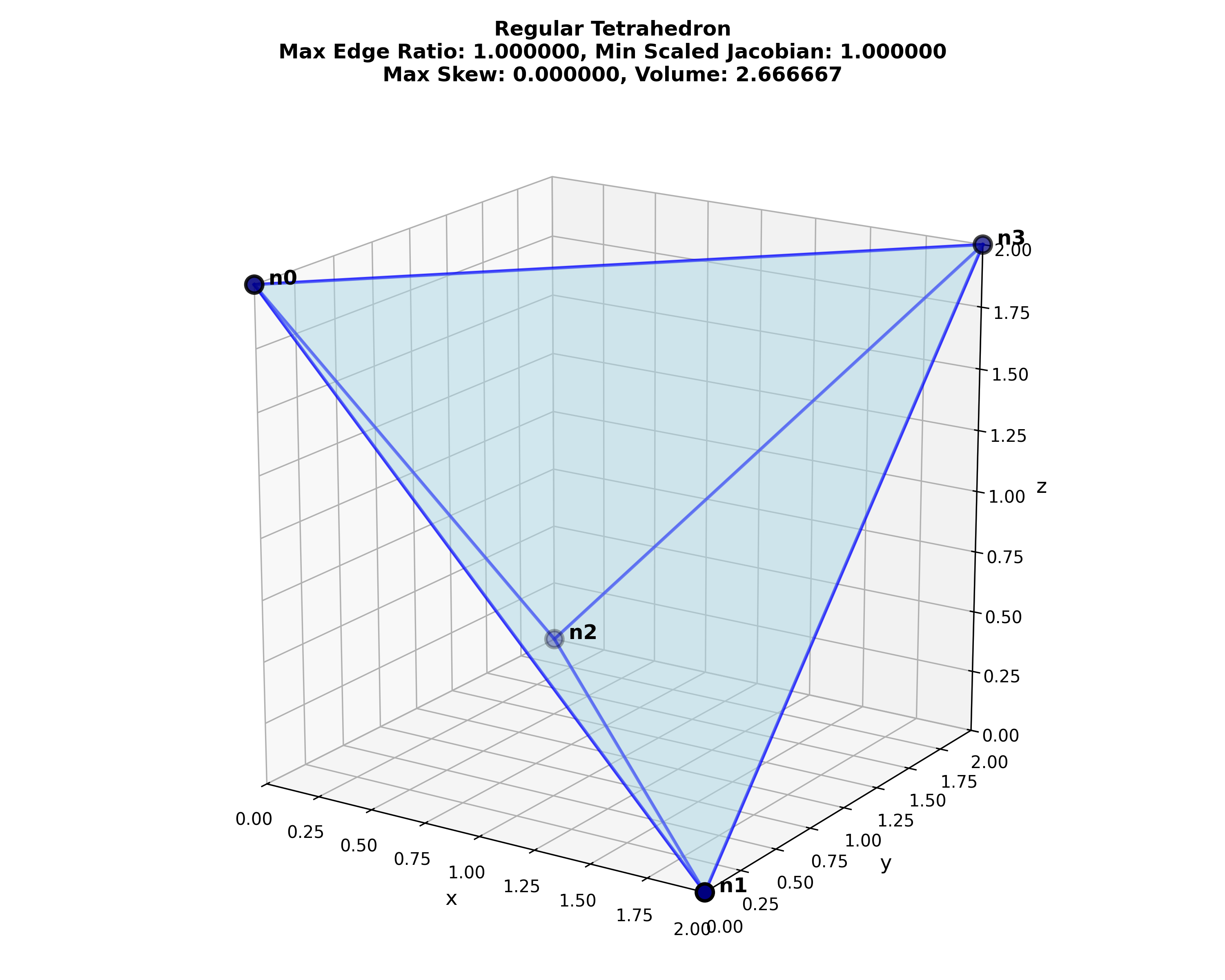

- Semantic segmentation does not differentiate between objects of the same class.

- Instance segmentation does differentiate between objects of the same class.

These two concepts are shown below:

Figure: Distinction between semantic segmentation and instance segmentation, from Lin et al.2

Both segmentation types, semantic and instance, can be used with automesh. However, automesh operates on a 3D segmentation, not a 2D segmentation, as present in a digital image. To obtain a 3D segmentation, two or more images are stacked to compose a volume.

The structured volume of a stacked of pixel composes a volumetric unit called a voxel. A voxel, in the context of this work, will have the same dimensionality in the x and y dimension as the pixel in the image space, and will have the z dimensionality that is the stack interval distance between each image slice. All pixels are rectangular, and all voxels are cuboid.

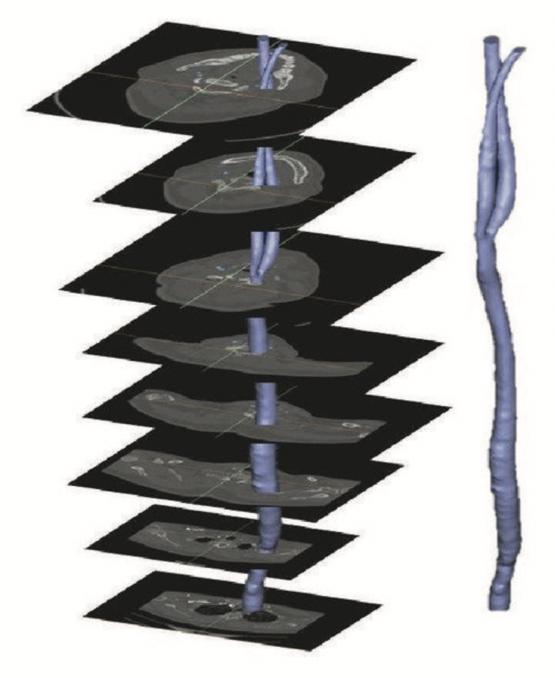

The figure below illustrates the concept of stacked images:

Figure: Example of stacking several images to create a 3D representation, from Bit et al.3

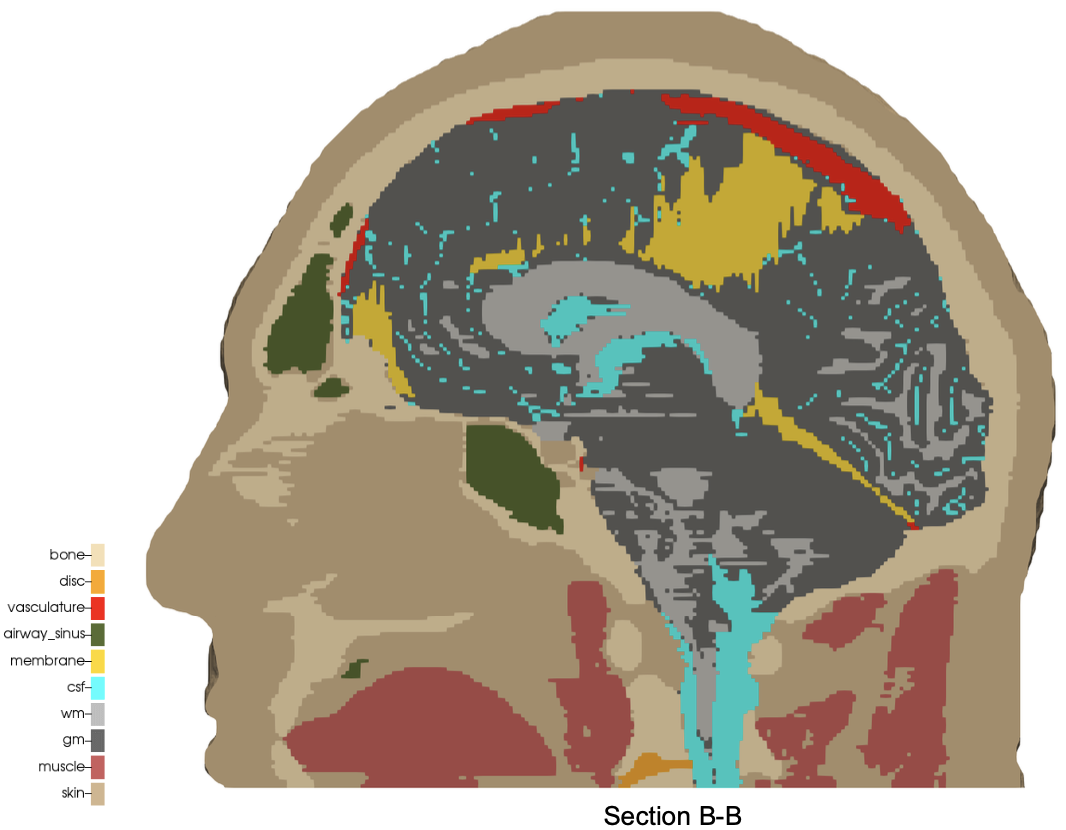

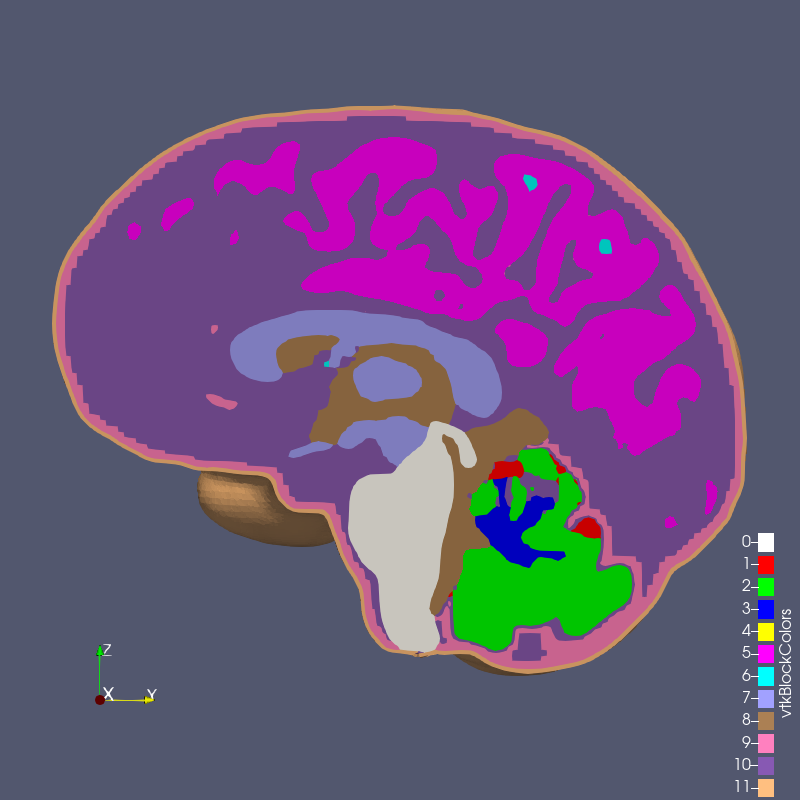

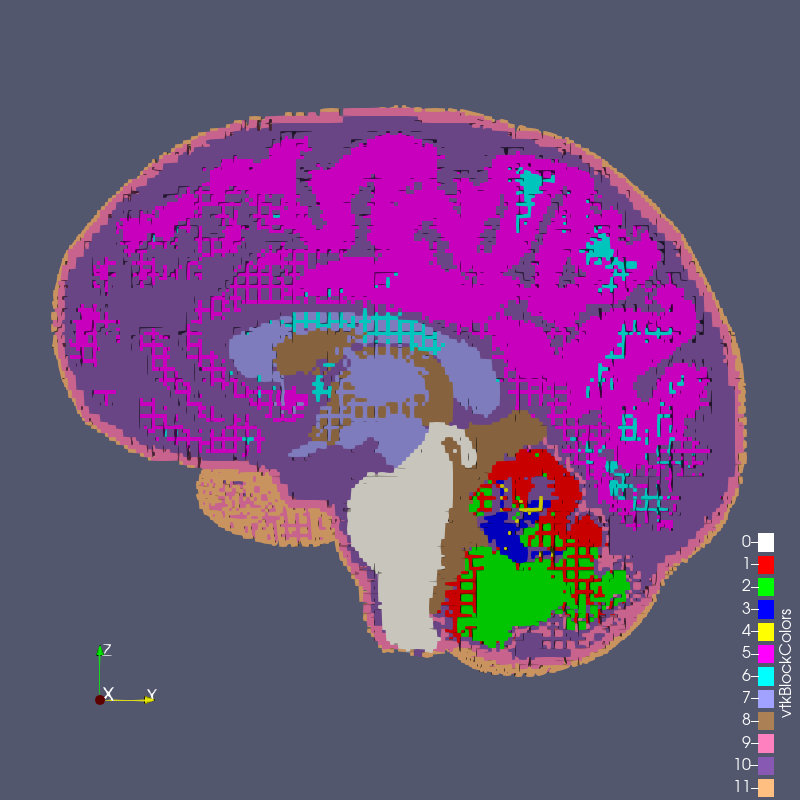

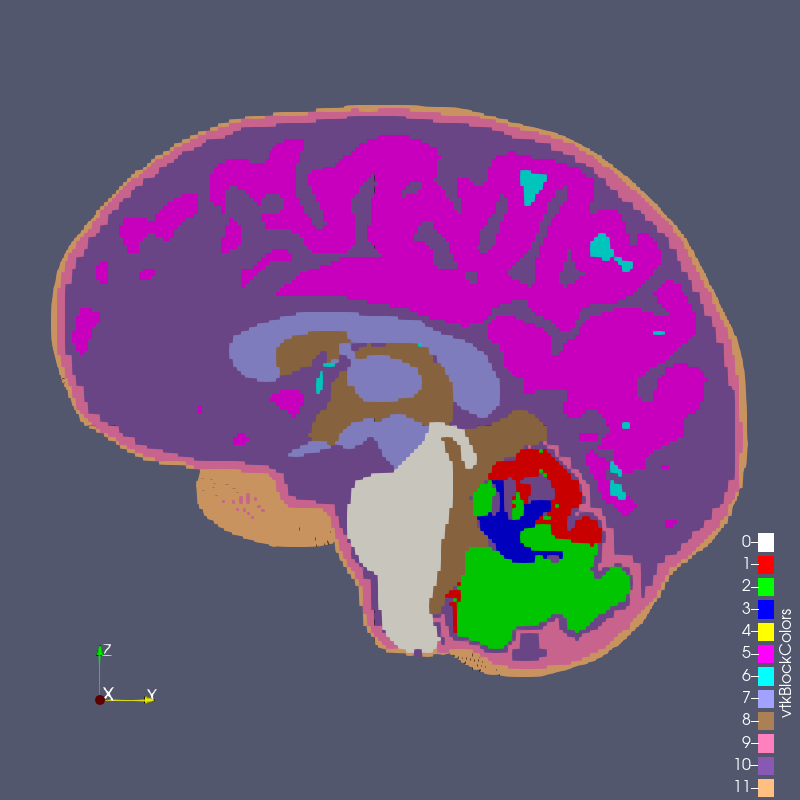

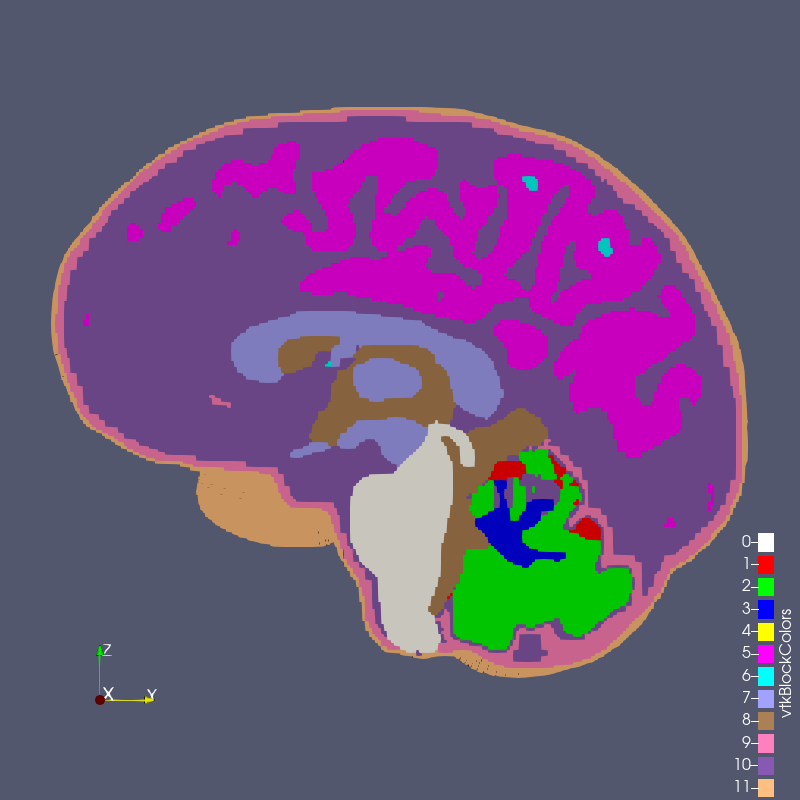

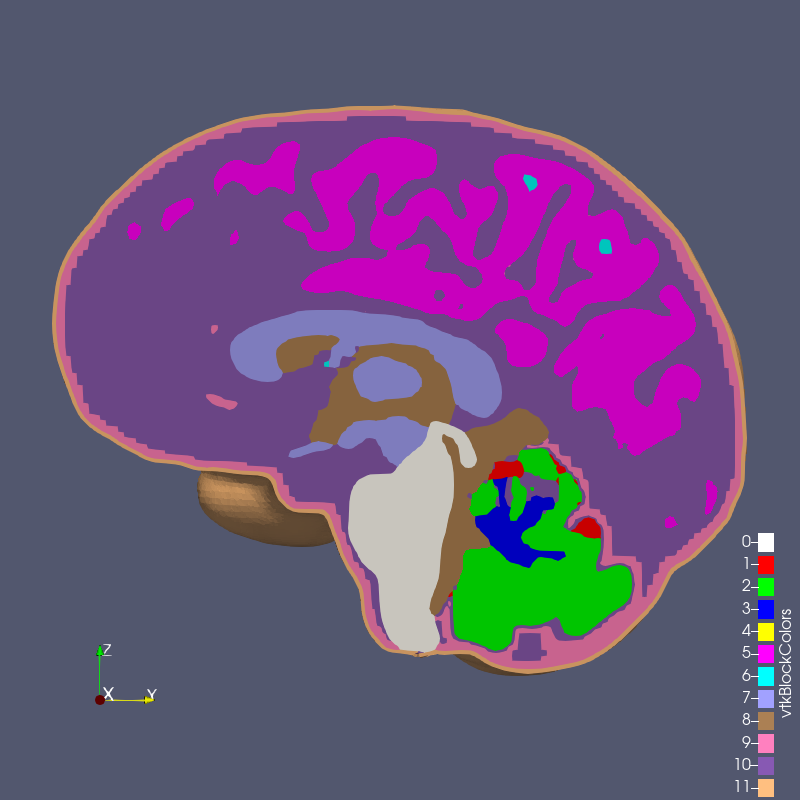

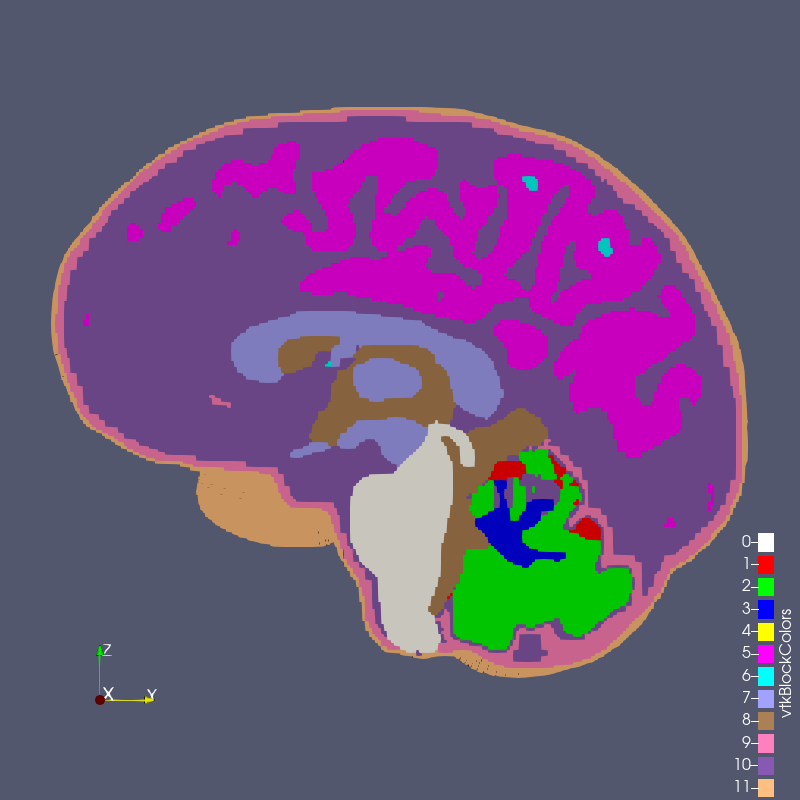

The digital image sources are frequently medical images, obtained by CT or MR, though automesh can be used for any subject that can be represented as a stacked segmentation. Anatomical regions are classified into categories. For example, in the image below, ten unique integers have been used to represent

bone, disc, vasculature, airway/sinus, membrane, cerebral spinal fluid, white matter, gray matter, muscle, and skin.

Figure: Example of a 3D voxel model, segmented into 10 categories, from Terpsma et al.4

Given a 3D segmentation, for any image slice that composes it, the pixels have been classified

into categories that are designated with unique, non-negative integers.

The range of integer values is limited to 256 = 2^8, since the uint8 data type is specified.

A practical example of a range could be [0, 1, 2, 3, 4]. The integers do not need to be sequential,

so a range of [4, 501, 2, 0, 42] is also valid, but not conventional.

Segmentations are frequently serialized (saved to disc) as either a NumPy (.npy) file

or a SPN (.spn) file.

A SPN file is a text (human-readable) file that contains a single a column of non-negative integer values. Each integer value defines a unique category of a segmentation.

Axis order (for example,

x, y, then z; or, z, y, x, etc.) is not implied by the SPN structure;

so additional data, typically provided through a configuration file, is

needed to uniquely interpret the pixel tile and voxel stack order

of the data in the SPN file.

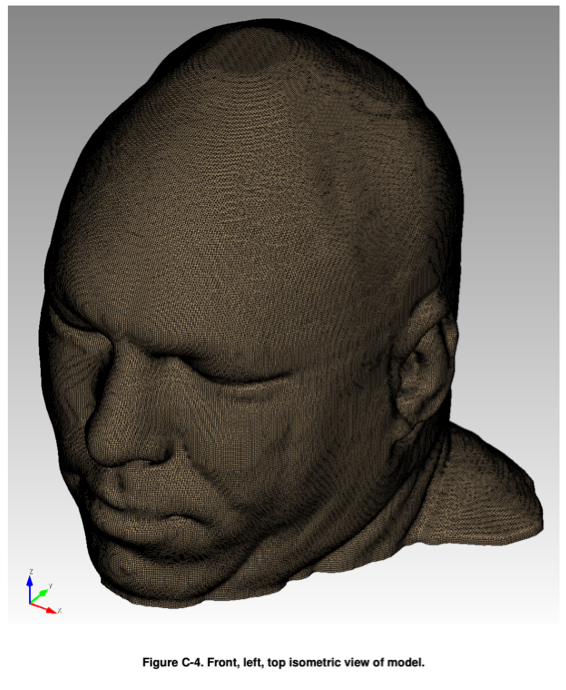

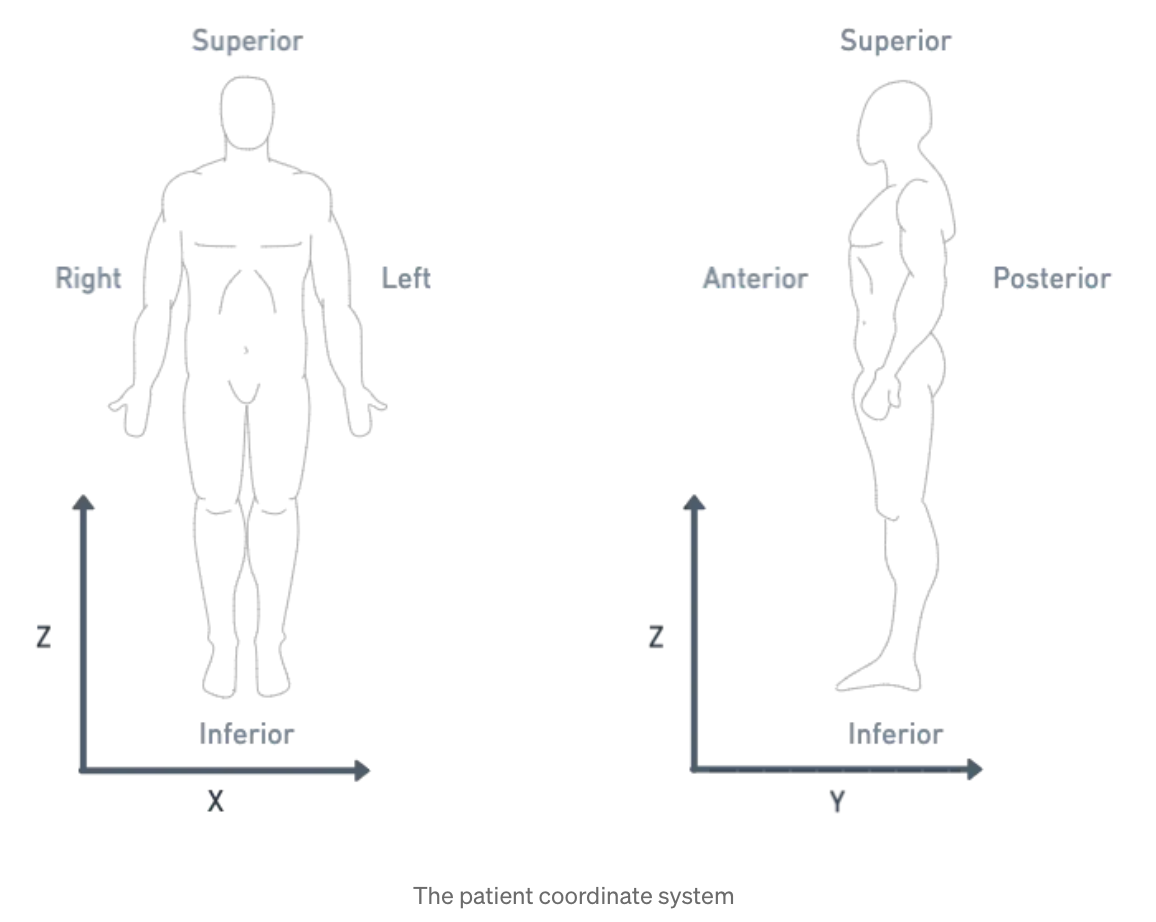

For subjects that the human anatomy, we use the Patient Coordinate System (PCS), which directs the

x, y, and z axes to the left, posterior, and superior, as shown below:

| Patient Coordinate System: | Left, Posterior, Superior (x, y, z) |

|---|---|

|  |

Figure: Illustration of the patient coordinate system, left figure from Terpsma et al.4 and right figure from Sharma.5

Finite Element Mesh

The main automesh file output type is .exo, which refers to the EXODUS II file format, or simply "Exodus" format.

Other mesh output types, such as .inp, .mesh, and .vtk, are also available.

EXODUS II is a model developed to store and retrieve data for finite element analyses. It is used for preprocesing (problem definition), postprocessing (results visualization), as well as code to code data transfer. An EXODUS II data file is a random access, machine independent binary file.6

EXODUS II depends on the Network Common Data Form (NetCDF) library.

NetCDF is a public domain database library that provides low-level data storage. The NetCDF library stores data in eXternal Data Representation (XDR) format, which provides machine independency.

EXODUS II library functions provide a map between finite element data objects and NetCDF dimensions, attributes, and variables.

EXODUS II data objects:

-

Initialization Data

- Number of nodes

- Number of elements

- optional informational text

- etc.

-

Model - static objects (i.e., objects that do not change over time)

- Nodal coordinates

- Element connectivity

- Node sets

- Side sets

-

optional Results

- Nodal results

- Element results

- Global results

Note:

automeshwill use Initialization Data and Model sections; it will not use the Results section.

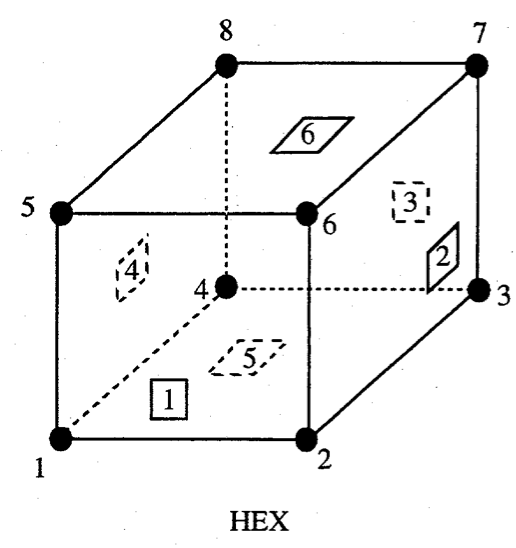

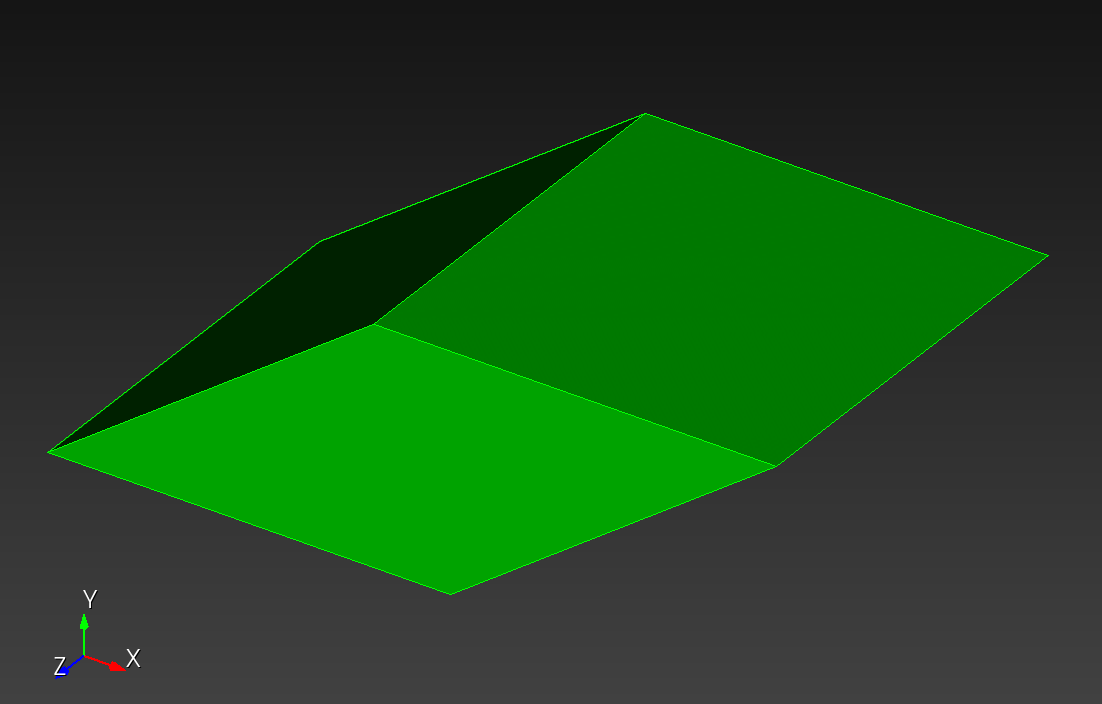

We use the Exodus II convention for a hexahedral element local node numbering:

Figure: Exodus II hexahedral local finite element numbering scheme, from Schoof et al.6

References

-

Li FF, Johnson J, Yeung S. Lecture 11: Dection and Segmentation, CS 231n, Stanford Unveristy, 2017. link ↩

-

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13 2014 (pp. 740-755). Springer International Publishing. link ↩

-

Bit A, Ghagare D, Rizvanov AA, Chattopadhyay H. Assessment of influences of stenoses in right carotid artery on left carotid artery using wall stress marker. BioMed research international. 2017;2017(1):2935195. link ↩

-

Terpsma RJ, Hovey CB. Blunt impact brain injury using cellular injury criterion. Sandia National Lab. (SNL-NM), Albuquerque, NM (United States); 2020 Oct 1. link ↩ ↩2

-

Sharma S. DICOM Coordinate Systems — 3D DICOM for computer vision engineers, Medium, 2021-12-22. link ↩

-

Schoof LA, Yarberry VR. EXODUS II: a finite element data model. Sandia National Lab. (SNL-NM), Albuquerque, NM (United States); 1994 Sep 1. link ↩ ↩2

Installation

Use automesh from one of the following interfaces:

- command line interface,

- Rust interface, or

- Python interface.

All interfaces are independent from each other:

- The Rust interfaces can be used without the Python interface.

- The Python interface can be used without the Rust interfaces.

For macOS and Linux, use a terminal. For Windows, use a Command Prompt (CMD) or PowerShell.

Some macOS users have encountered a build error with the netcdf-src crate. See Troubleshooting for a solution to this error.

Step 1: Install Prerequisites

- The command line interface and Rust interface depend on Rust and Cargo.

- Cargo is the Rust package manager.

- Cargo is included with the Rust installation.

- The Python interface depends on Python and pip.

- pip is the Python package installer.

- pip is included with the standard installation of Python starting from Python 3.4.

Rust Prerequisites

It is recommended to install Rust using Rustup, which is an installer and version management tool.

Python Prerequisites

macOS

- Install Homebrew (if you don't have it already). Open the Terminal and run:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

- Install Python. After Homebrew is installed, run:

brew install python

- Verify Python and pip are installed:

python3 --version

pip3 --version

Linux

- Update Package List. Open a terminal and run:

sudo apt update

- Install Python and pip. For Ubuntu or Debian-based systems, run:

sudo apt install python3 python3-pip

- Verify Python and pip are installed:

python3 --version

pip3 --version

Windows

- Download Python. Go to the official Python website and download the latest version of Python for Windows.

- Run the Installer. During installation, make sure to check the box that says "Add Python to PATH."

- Verify Python and pip are installed:

python --version

pip --version

All Environments

On all environments, a virtual environment is recommended, but not required. Create a virtual environment:

python3 -m venv .venv # venv, or

uv venv .venv # using uv

uv is a fast Python package manager, written in Rust. It is an alternative to pip.

Activate the virtual environment:

source .venv/bin/activate # for bash shell

source .venv/bin/activate.csh # for c shell

source .venv/bin/activate.fish # for fish shell

.\.venv\Scripts\activate # for powershell

Step 2: Install automesh

Install the desired interface.

Command Line Interface

cargo install automesh

Rust Interface

cargo add automesh

Python Interface

pip install automesh # using pip, or

uv pip install automesh # using uv

Step 3: Verify Installation

Rust Interfaces

Run the command line help:

automesh

which should display the following:

@@@@@@@@@@@@@@@@

@@@@ @@@@@@@@@@

@@@@ @@@@@@@@@@@ automesh: Automatic mesh generation

@@@@ @@@@@@@@@@@@

@@ @@ @@ v0.3.8 linux x86_64

@@ @@ @@ build d01ddd6 2026-02-27T16:44:12+0000

@@@@@@@@@@@@ @@@ Chad B. Hovey <chovey@sandia.gov>

@@@@@@@@@@@ @@@@ Michael R. Buche <mrbuche@sandia.gov>

@@@@@@@@@@ @@@@@ @

@@@@@@@@@@@@@@@@

Usage: automesh [COMMAND]

Commands:

convert Converts between mesh or segmentation file types

defeature Defeatures and creates a new segmentation

diff Show the difference between two segmentations

extract Extracts a specified range of voxels from a segmentation

mesh Creates a finite element mesh from a tessellation or segmentation

metrics Quality metrics for an existing finite element mesh

remesh Applies isotropic remeshing to an existing mesh

segment Creates a segmentation or voxelized mesh from an existing mesh

smooth Applies smoothing to an existing mesh

help Print this message or the help of the given subcommand(s)

Options:

-h, --help Print help

-V, --version Print version

Python Interface

python

# In Python, import the module

>>> import automesh

# List all attributes and methods of the module

>>> dir(automesh)

# Get help on the module

>>> help(automesh)

Troubleshooting

ZLIB target not found

Some users have encountered an error when trying to build the netcdf crate, e.g.,

...

error: failed to run custom build command for `netcdf-src v0.4.3`

...

The link interface of target "hdf5-static" contains:

ZLIB::ZLIB

but the target was not found. Possible reasons include:

* There is a typo in the target name.

* A find_package call is missing for an IMPORTED target.

* An ALIAS target is missing.

...

HDF5 is looking for ZLIB::ZLIB as a CMake target, but it's not being found

even though ZLIB is present.

A solution is to use a dynamically-linked netcdf from Homebrew instead of

trying to build it statically. This should avoid the CMake ZLIB::ZLIB target

issue entirely.

Update the Cargo.toml for automesh to avoid static linking:

...

# netcdf = { version = "=0.11.1", features = ["ndarray", "static"] }

netcdf = { version = "=0.11.1", features = ["ndarray"] }

...

Then,

# Make sure netcdf is installed via Homebrew

brew install netcdf

# Set the environment variable to use system netcdf

export NETCDF_DIR=/opt/homebrew

# Clean and build

cargo clean

cargo build

If you still encounter errors about netcdf not being found, also try:

export PKG_CONFIG_PATH=/opt/homebrew/lib/pkgconfig

export DYLD_LIBRARY_PATH=/opt/homebrew/lib

Getting Started

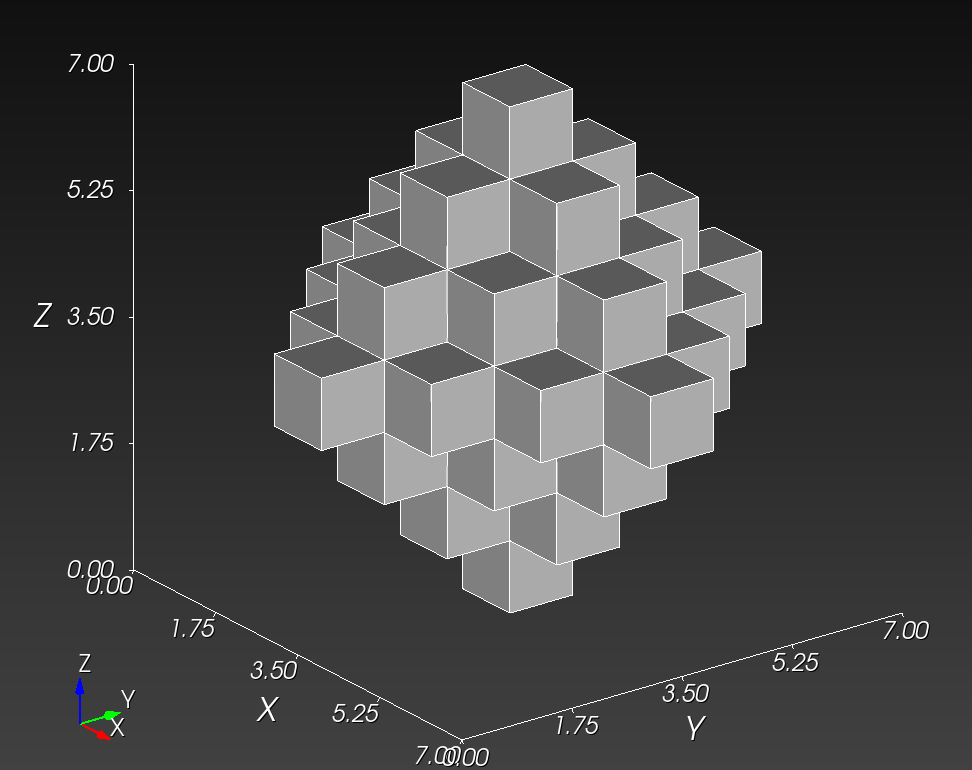

In this section, we use a simple segmentation to create a finite element mesh, a smoothed finite element mesh, and an isosurface.

Segmentation Input

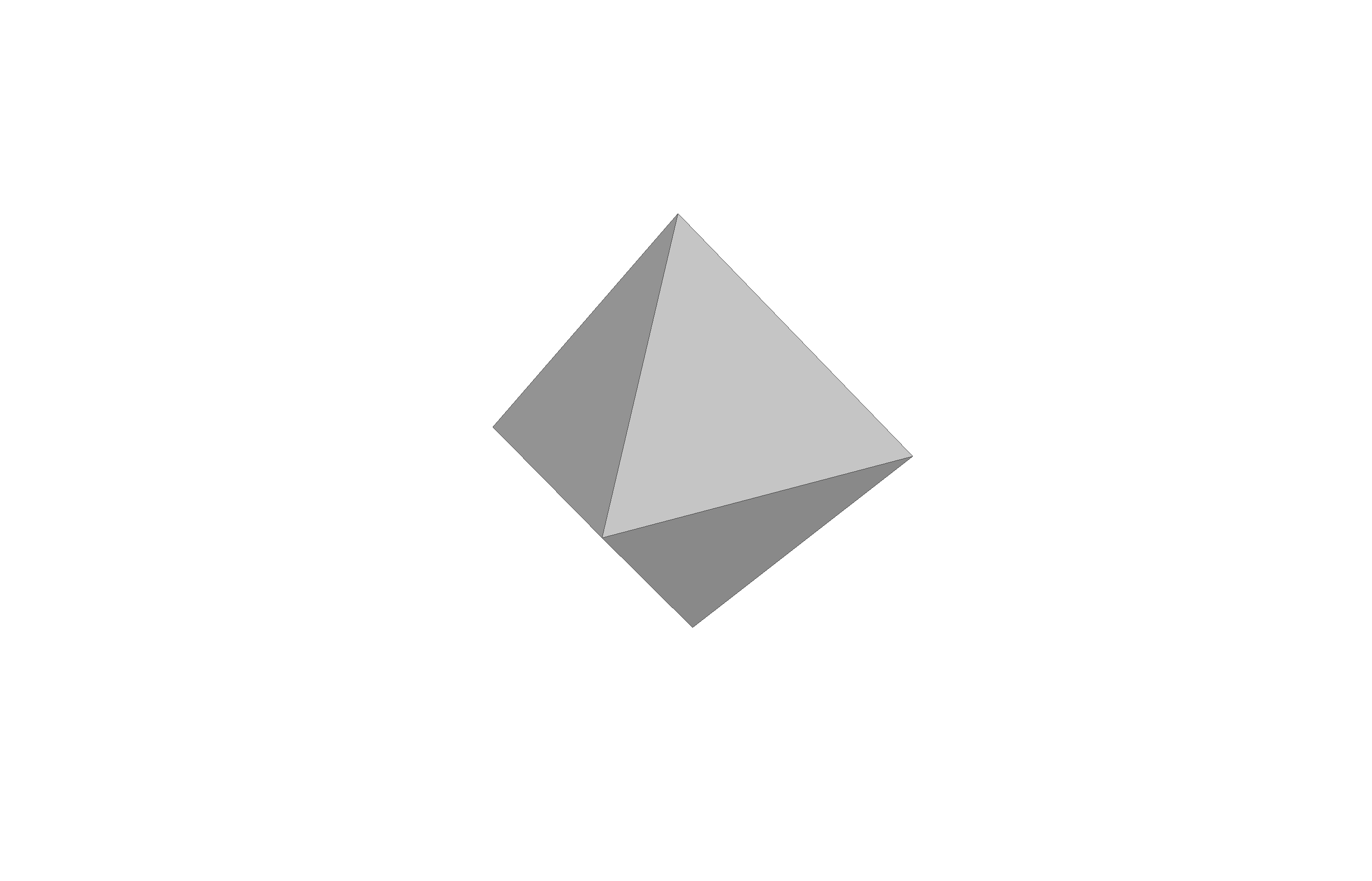

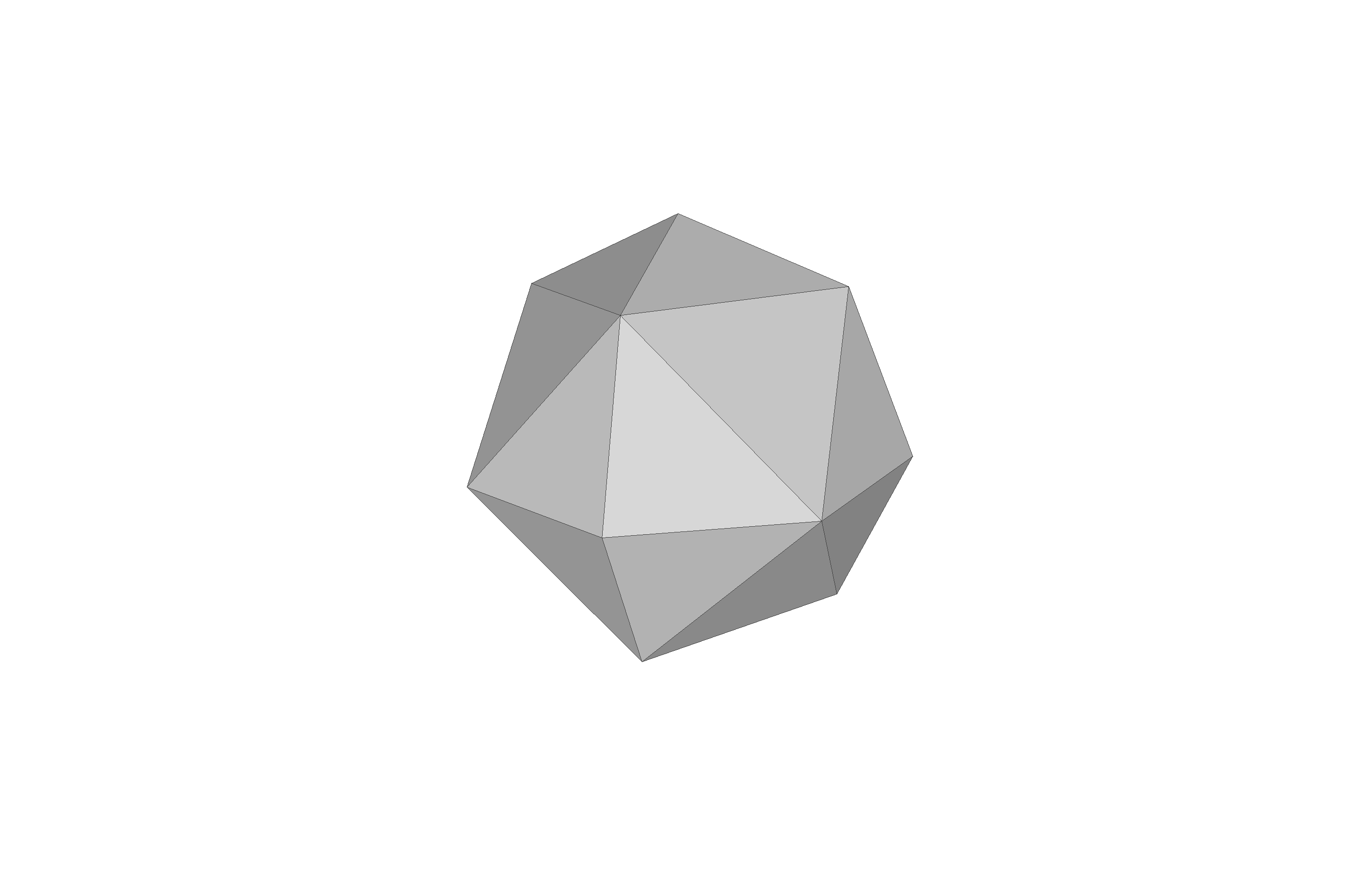

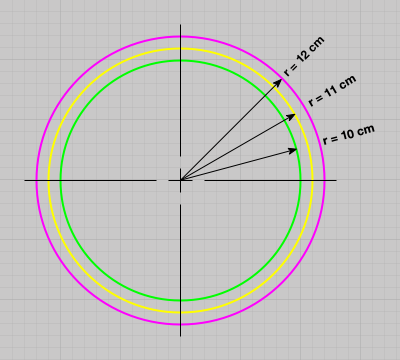

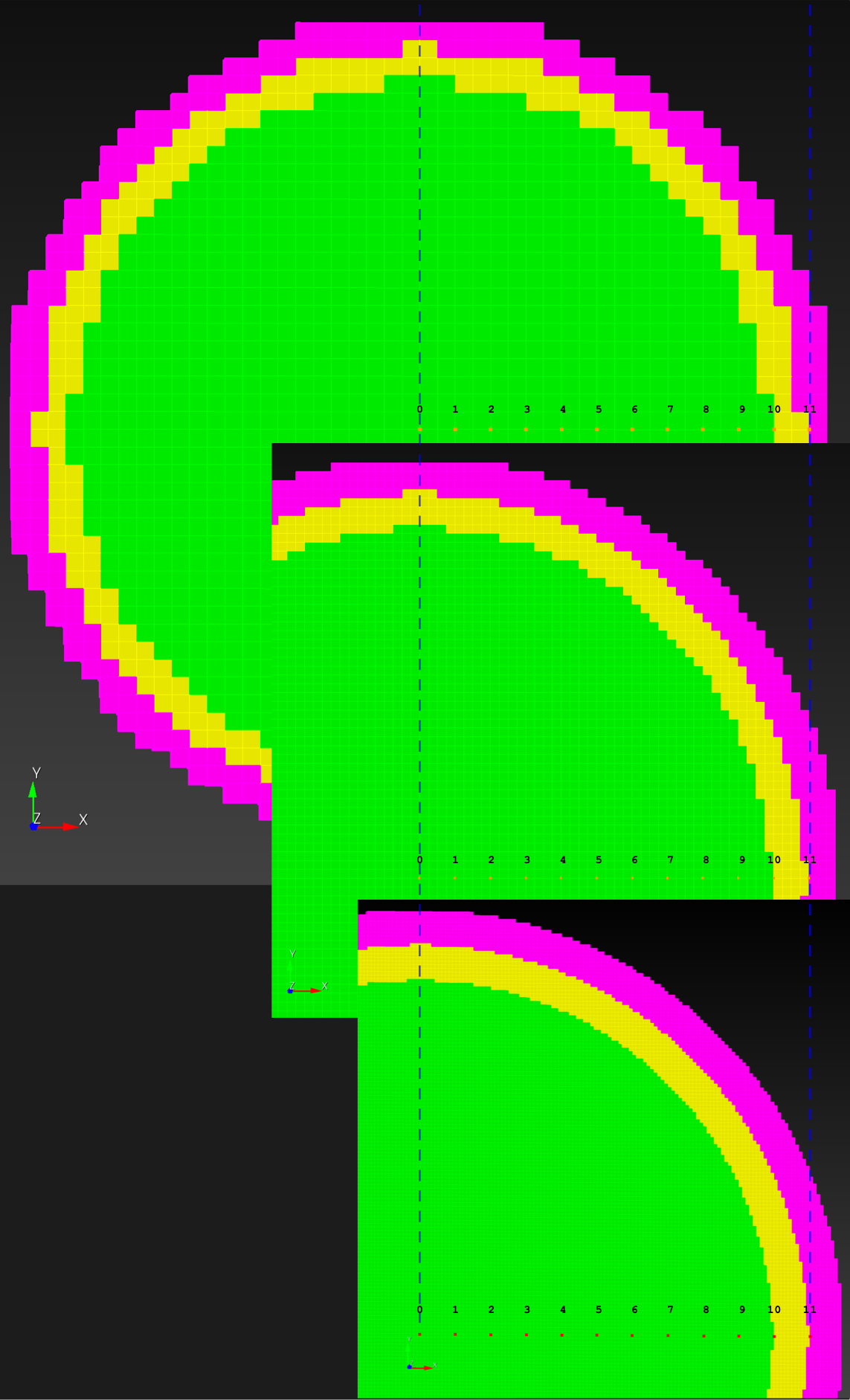

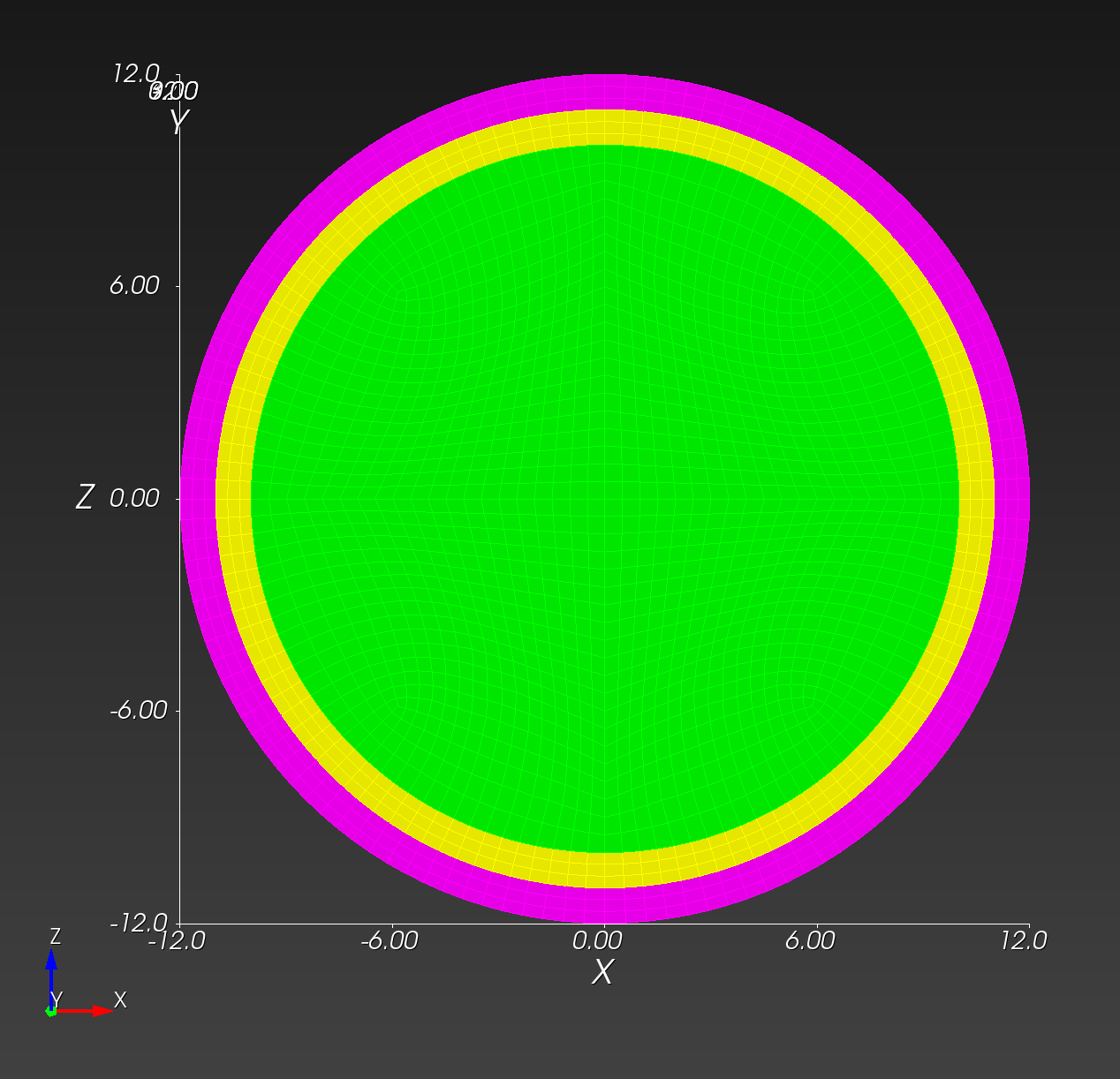

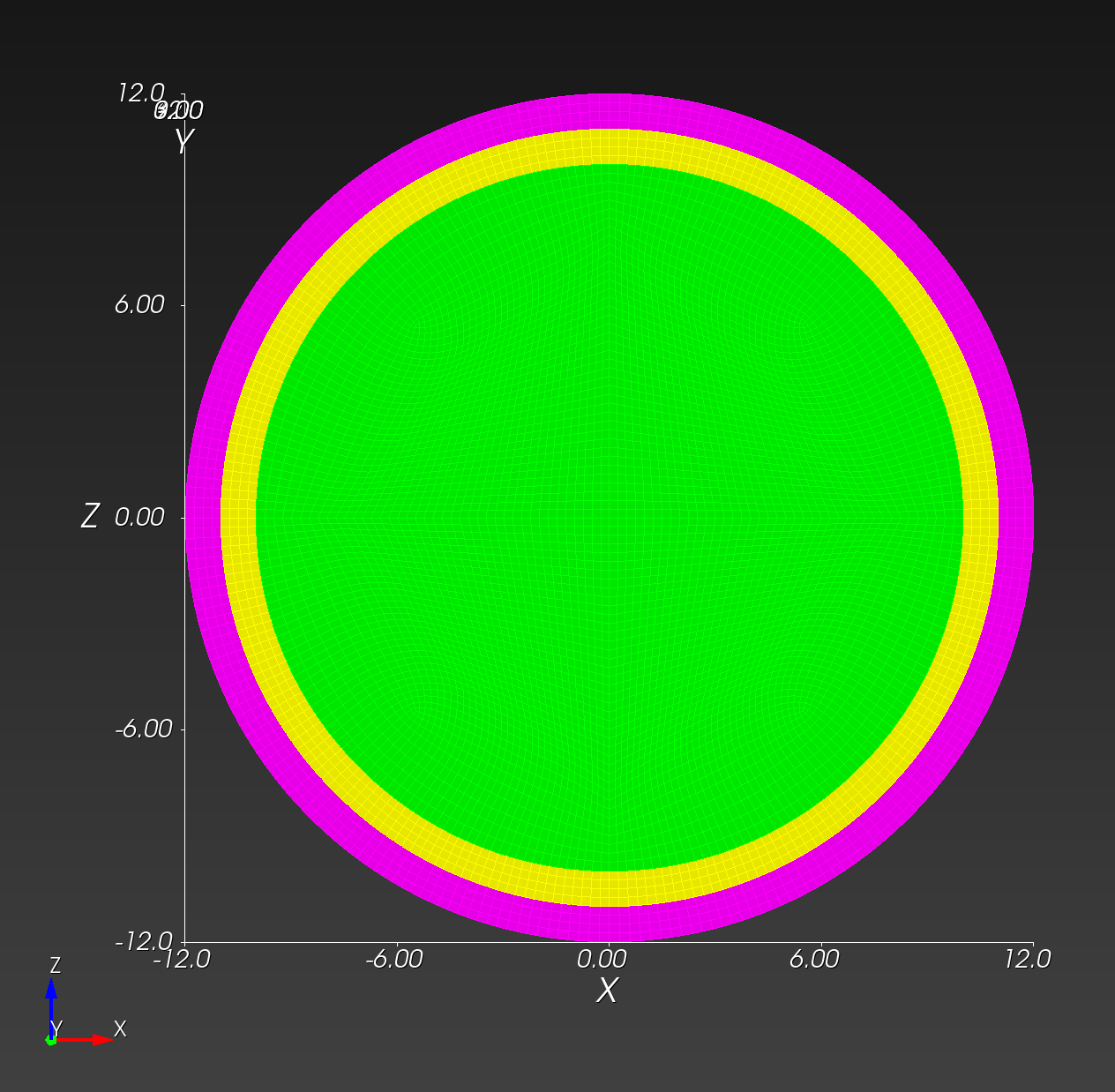

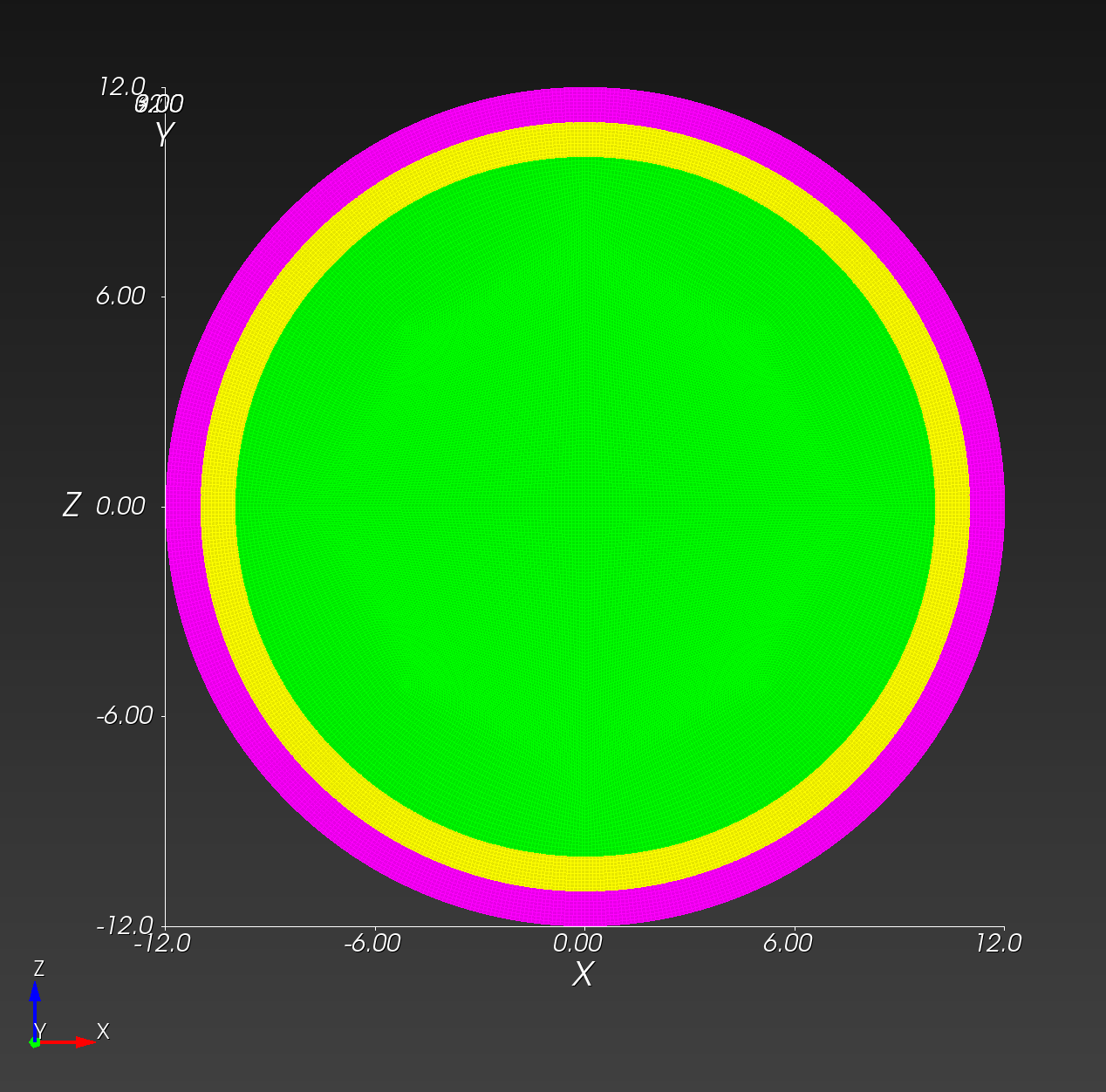

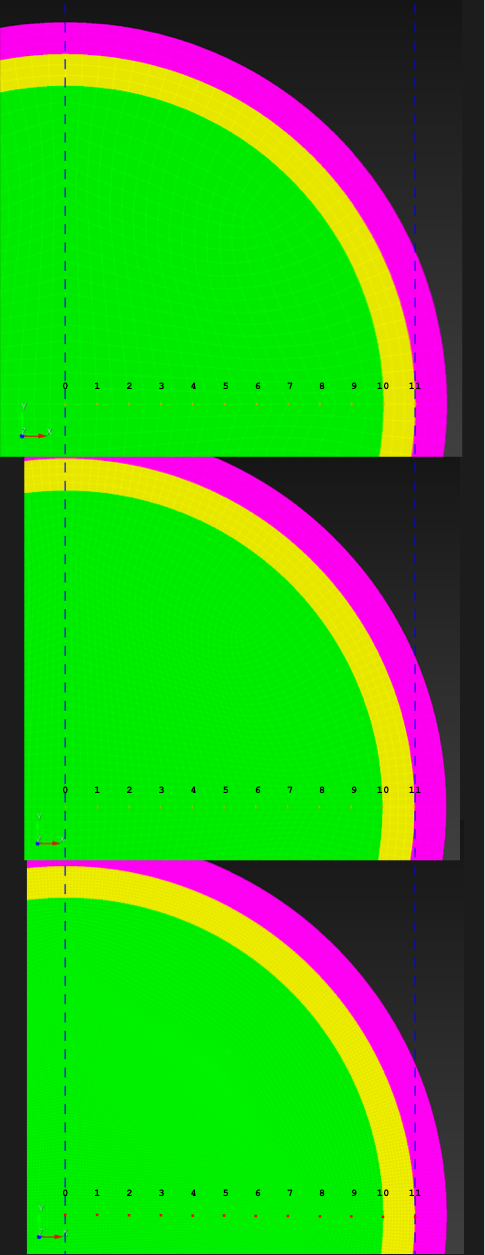

We start with a segmentation of a regular octahedron composed of three materials. The segmentation encodes

0for void (or background), shown in gray,1for the inner domain, shown in green,2for the intermediate layer, shown in yellow, and3for the outer layer, shown in magenta.

The (7 x 7 x 7) segmentation, at the midline cut plane,

appears as follows:

Consider each slice, 1 to 7, in succession:

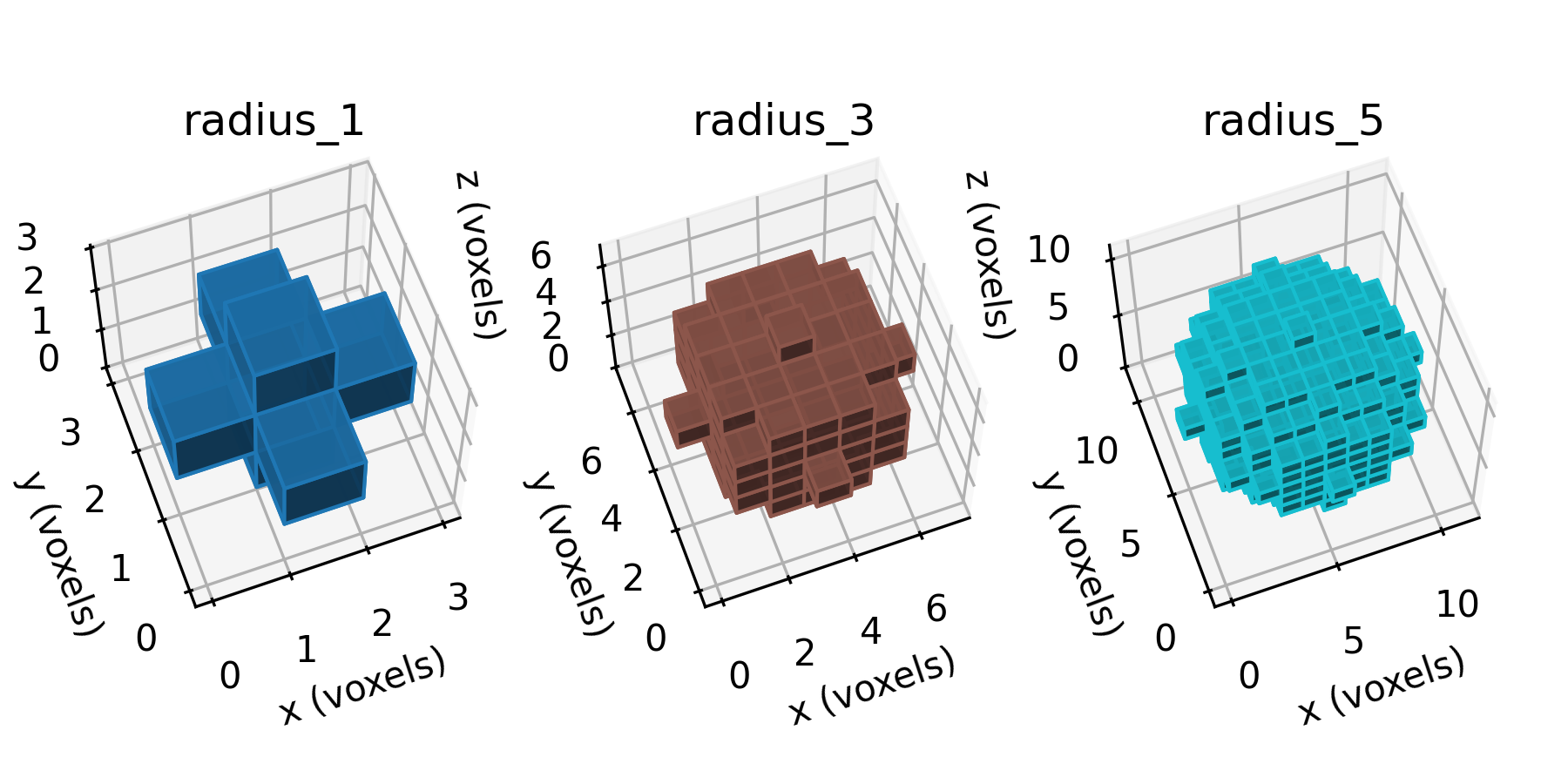

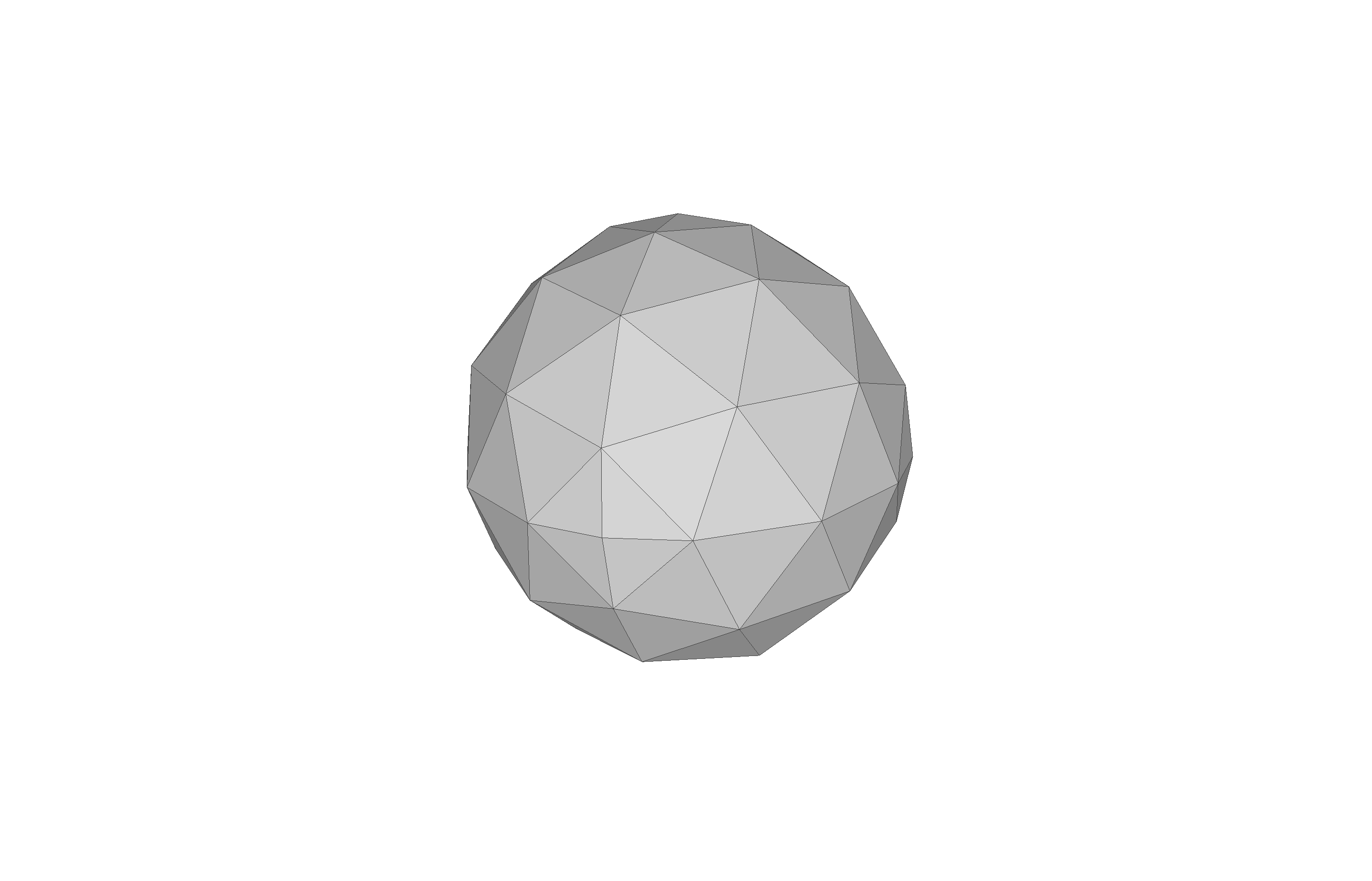

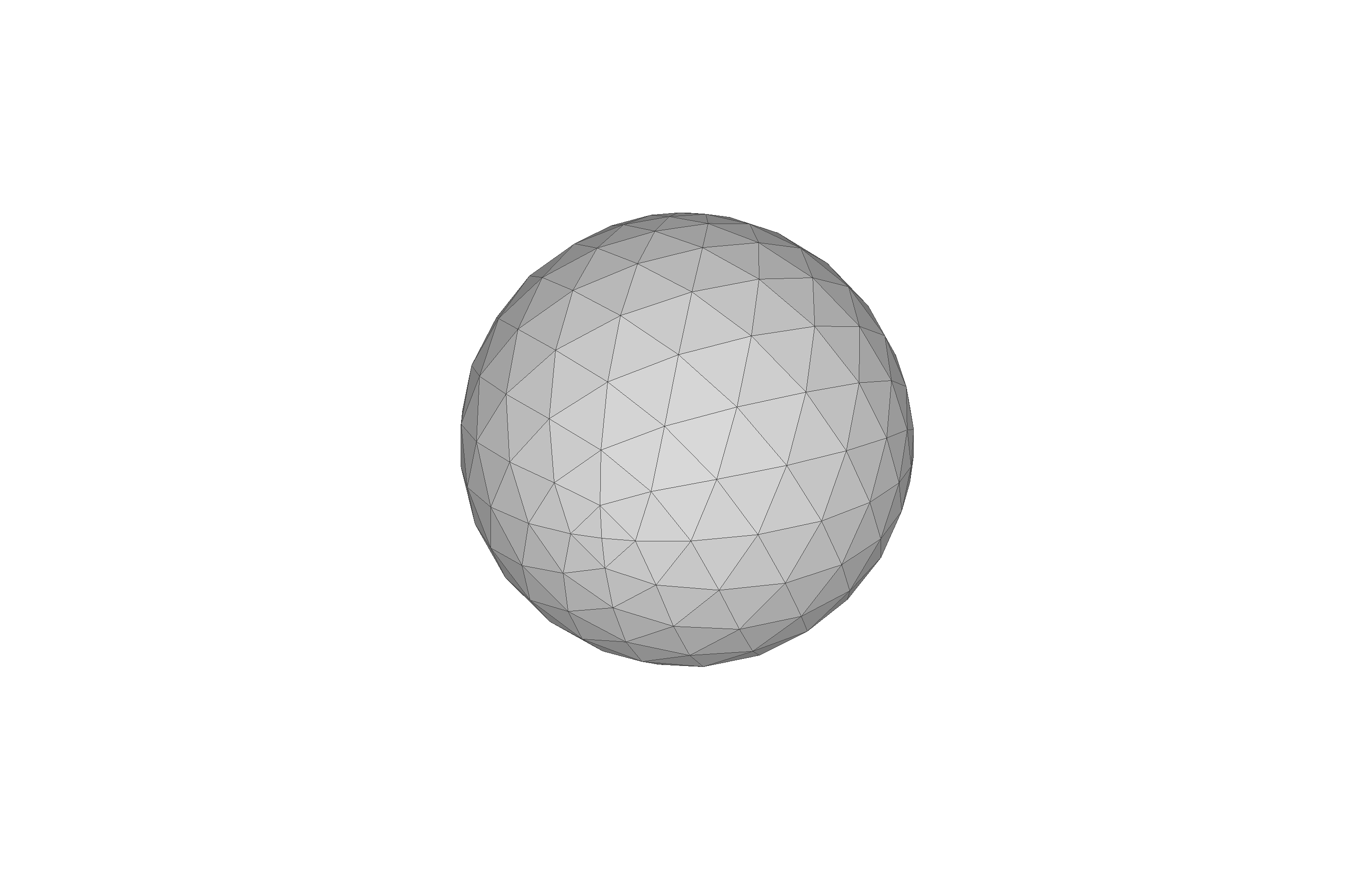

Remark: The (

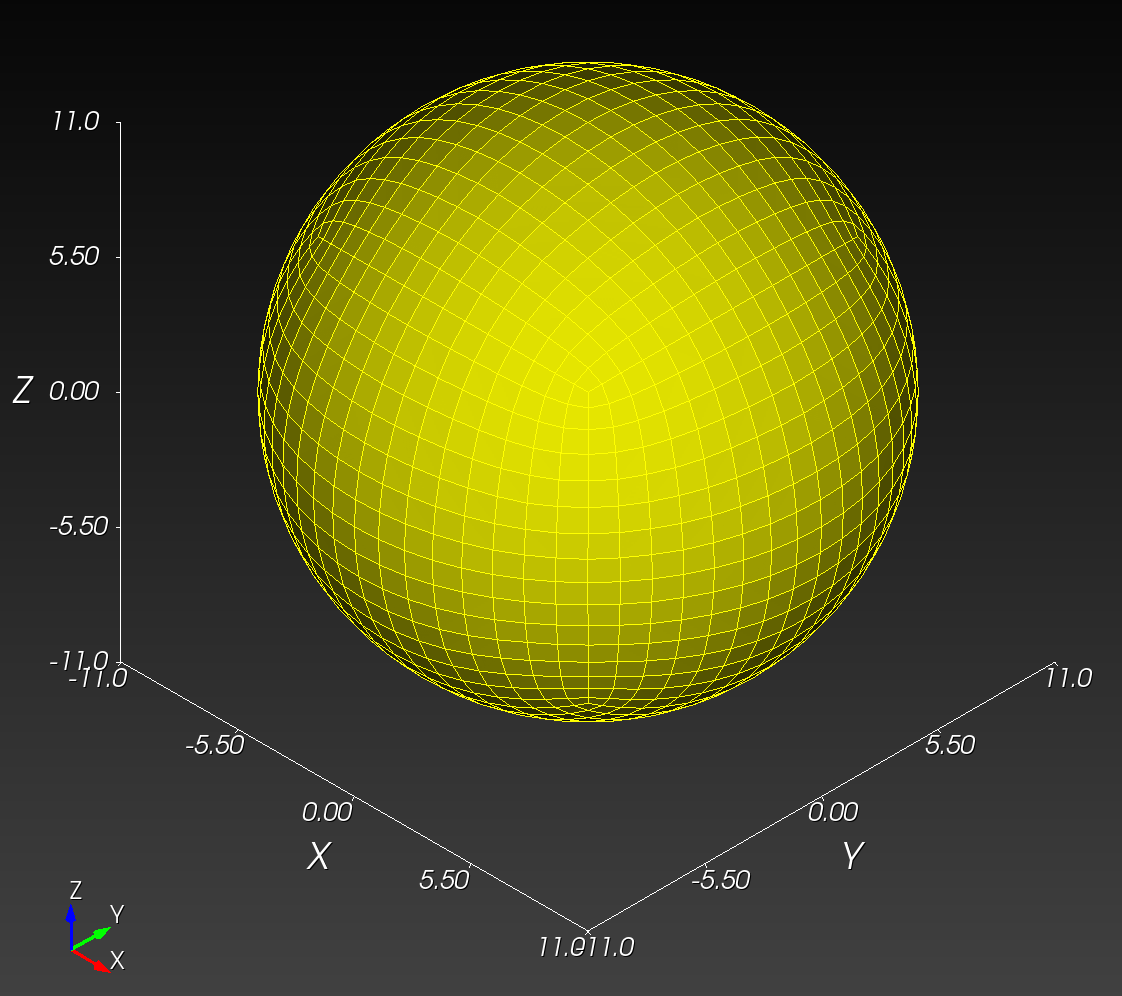

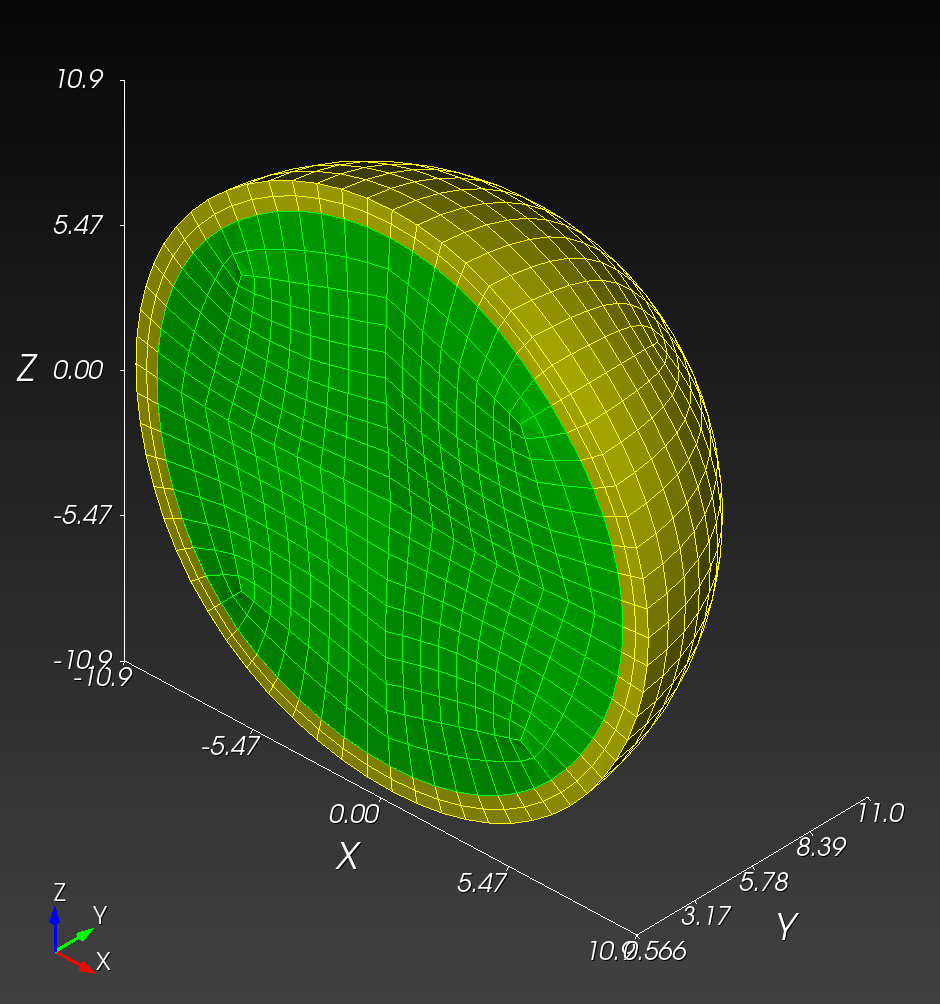

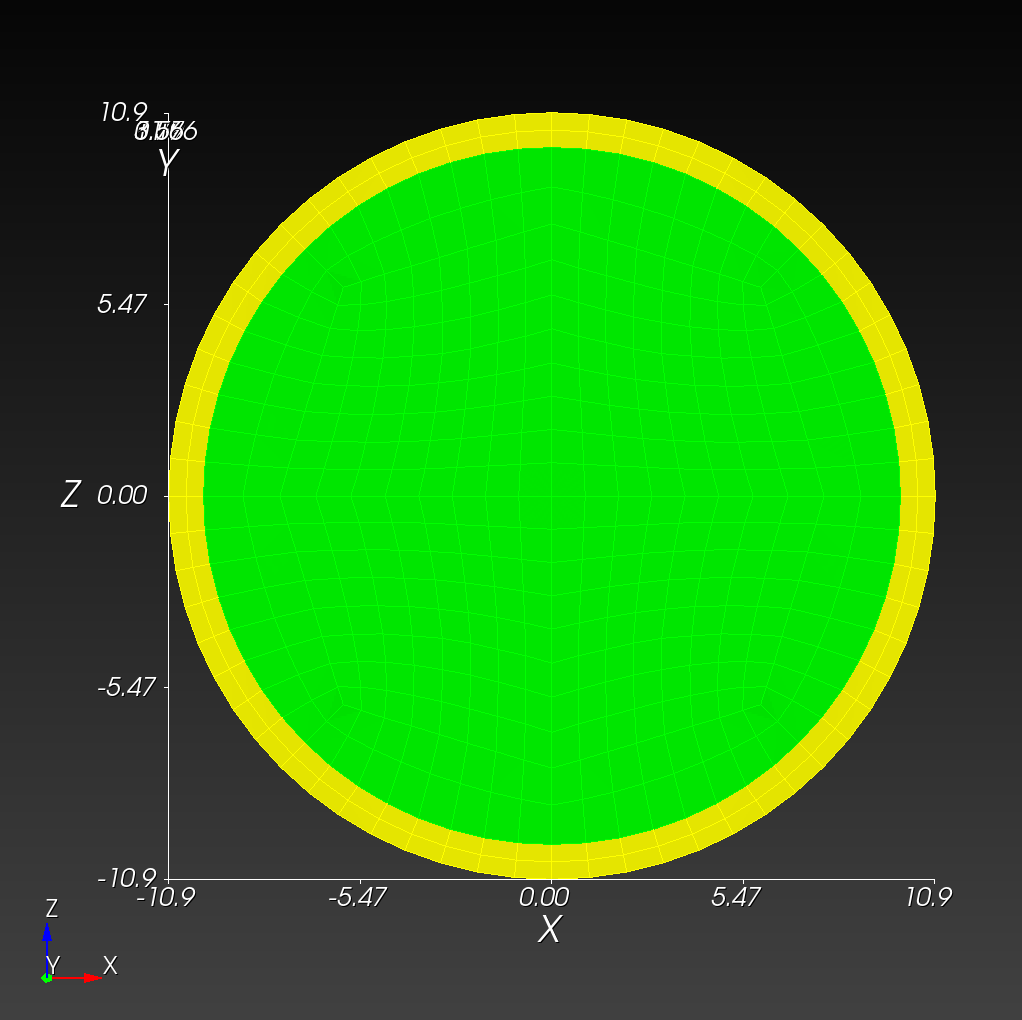

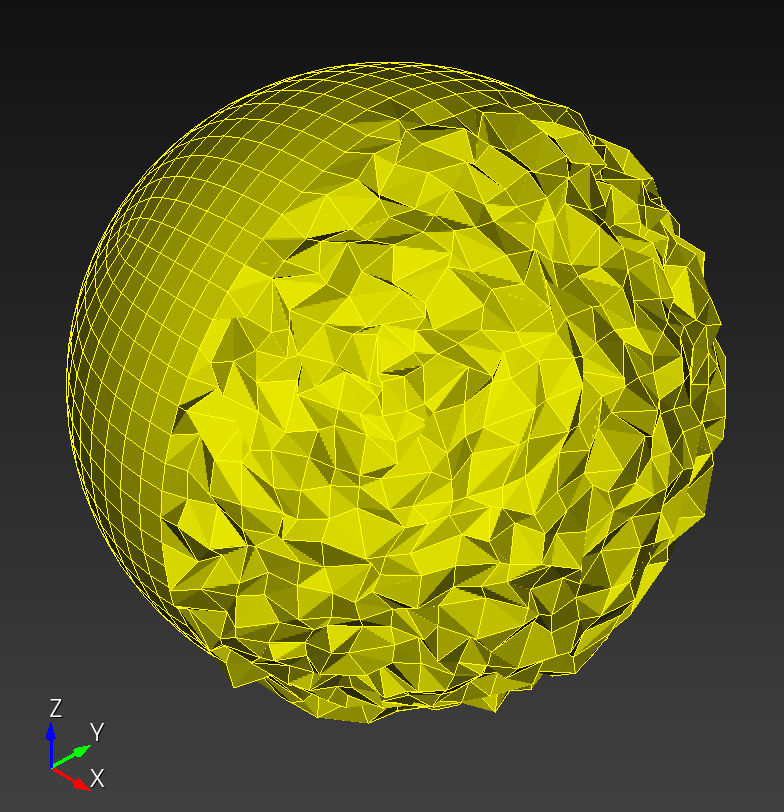

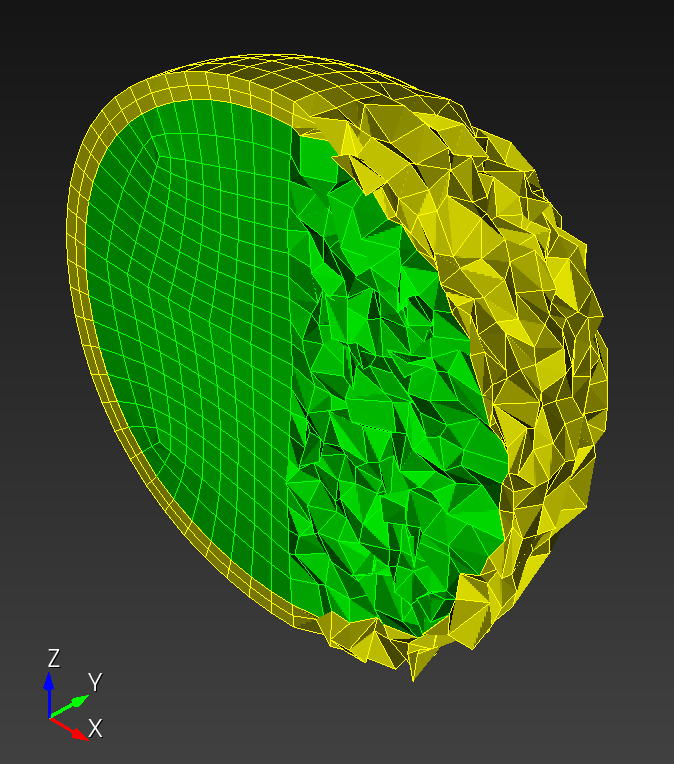

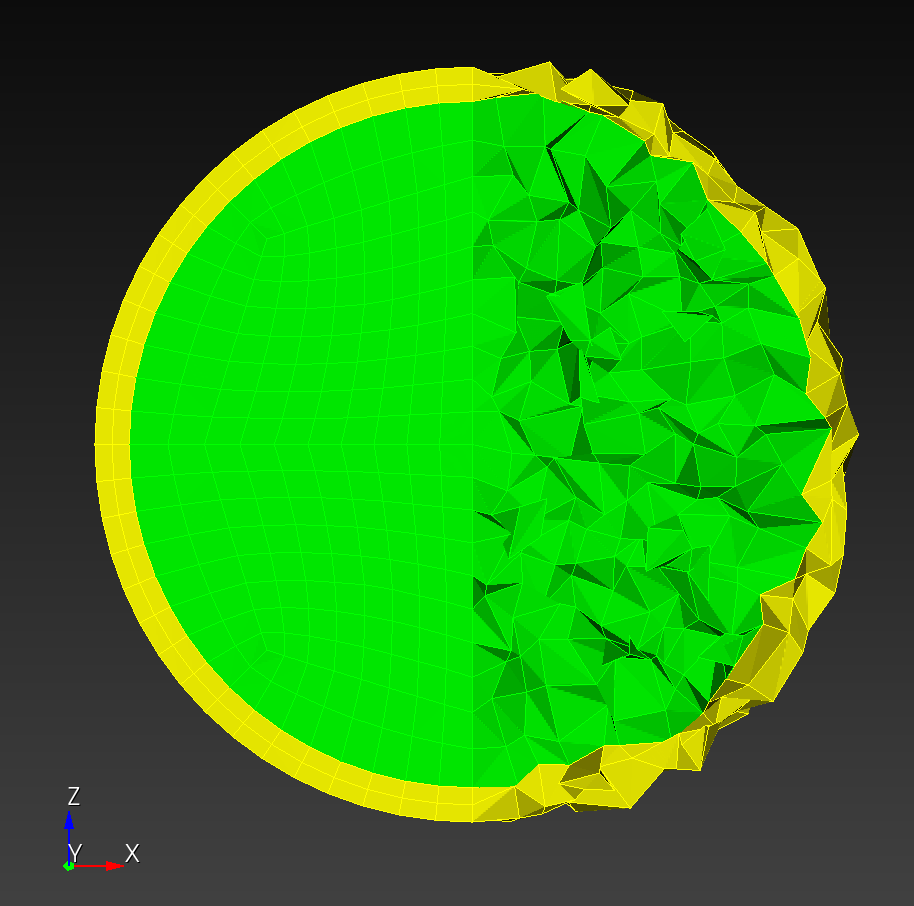

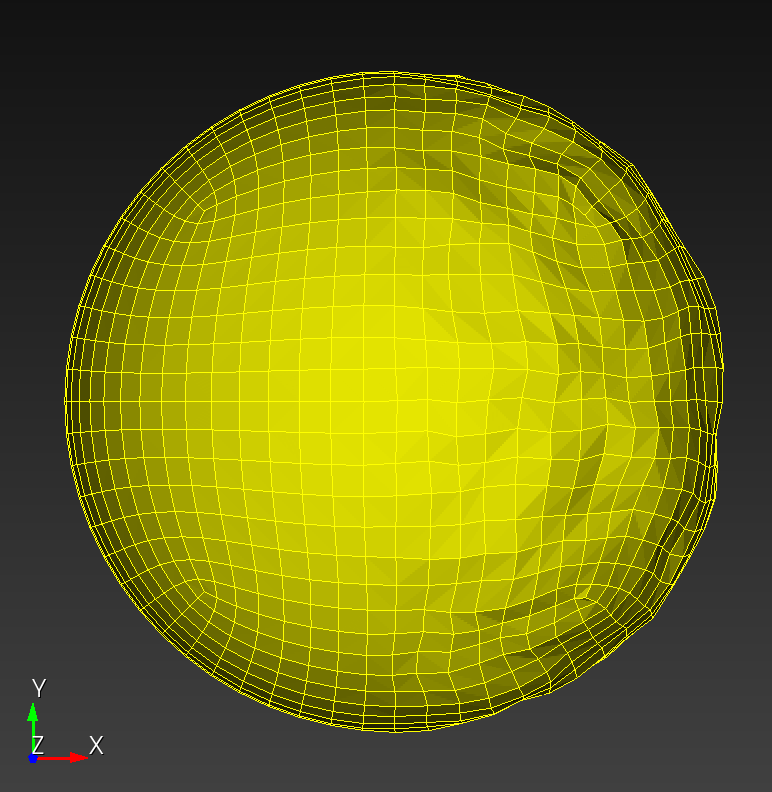

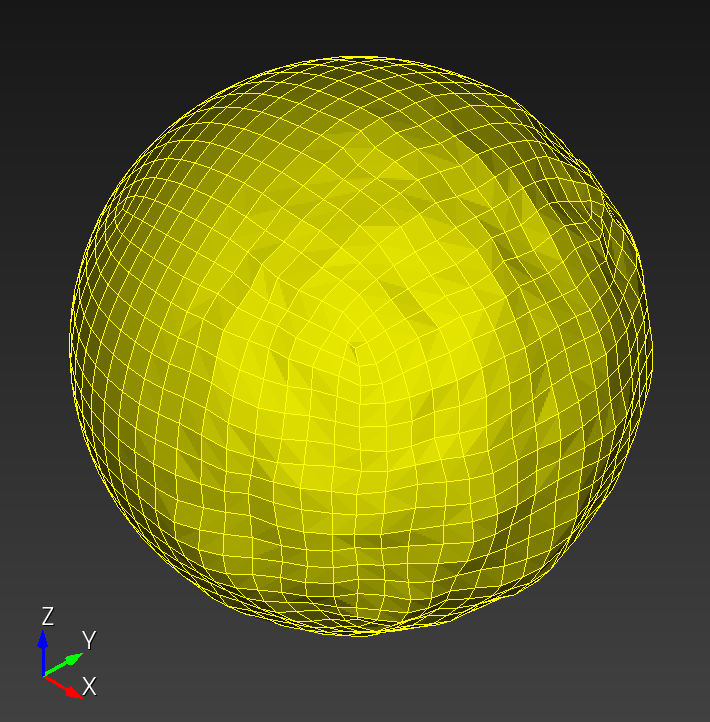

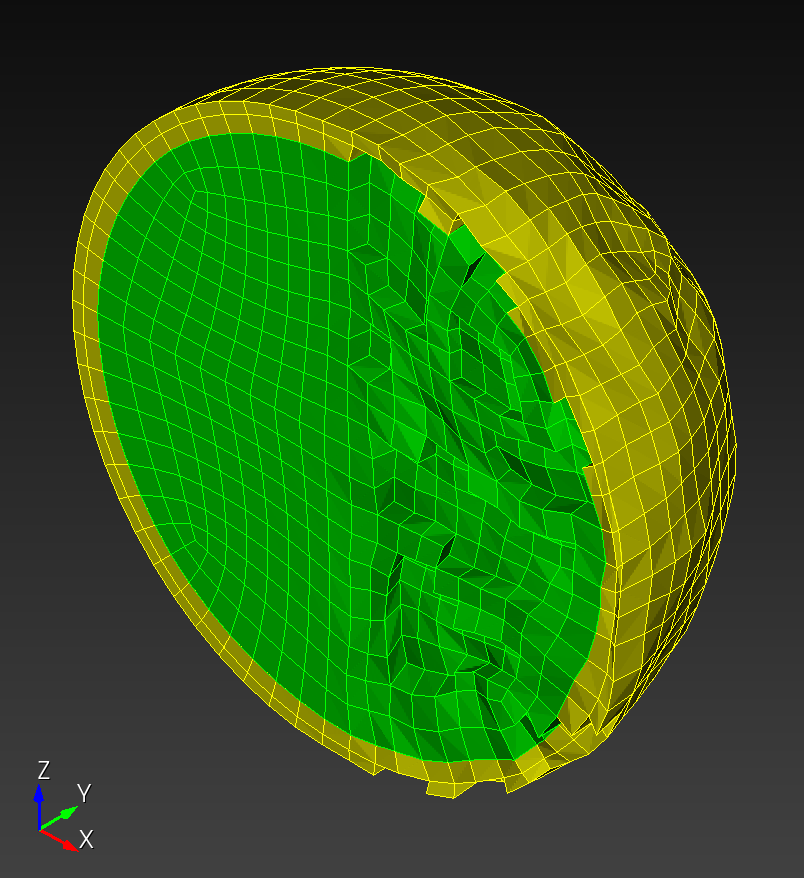

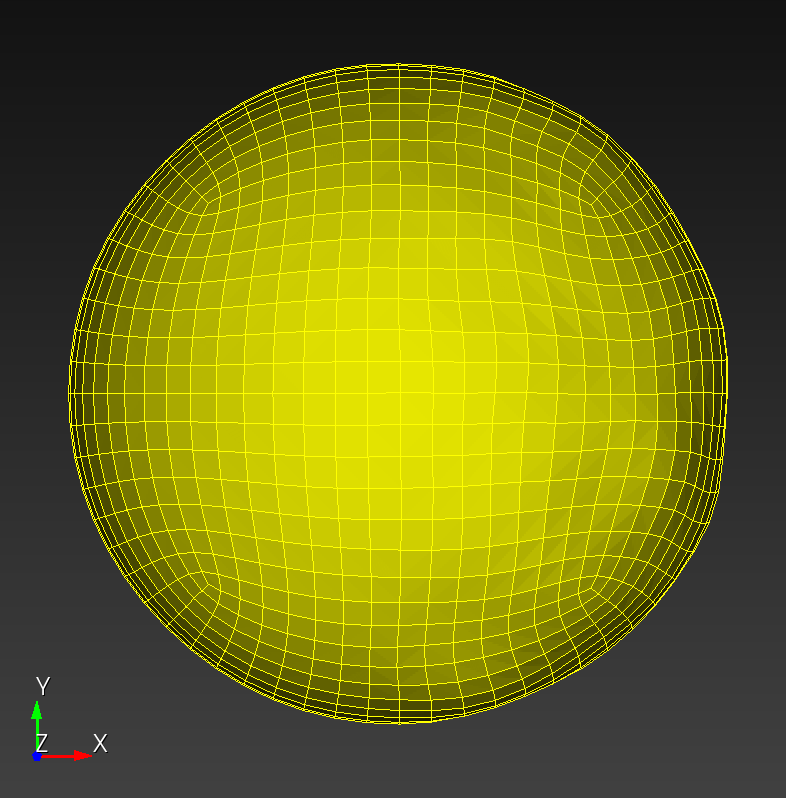

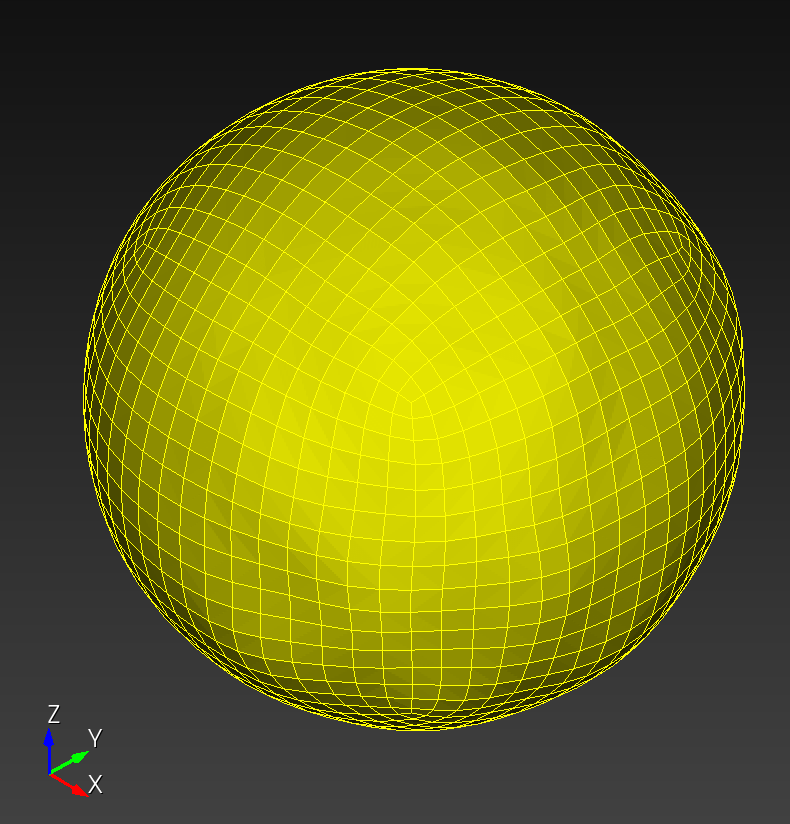

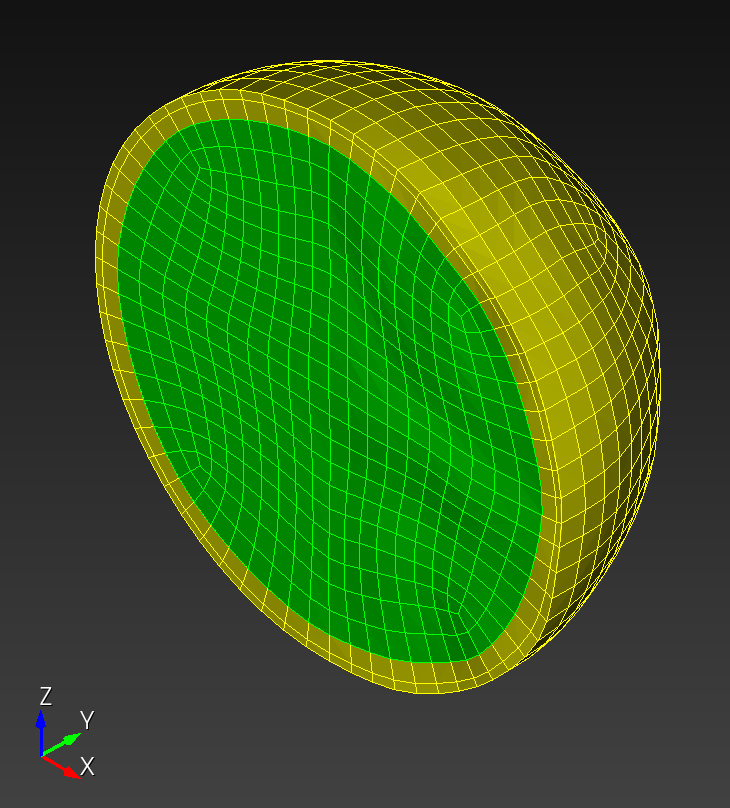

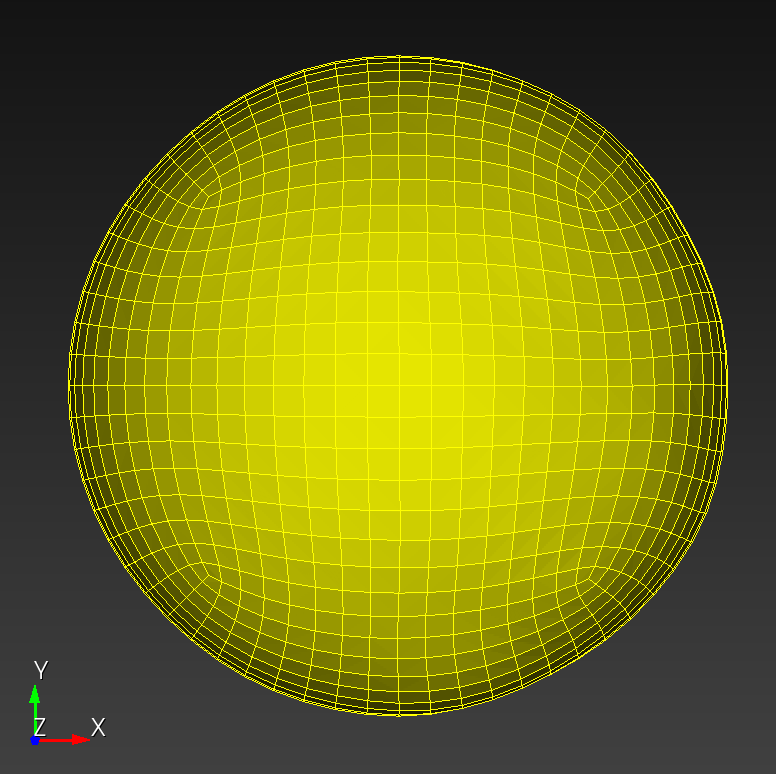

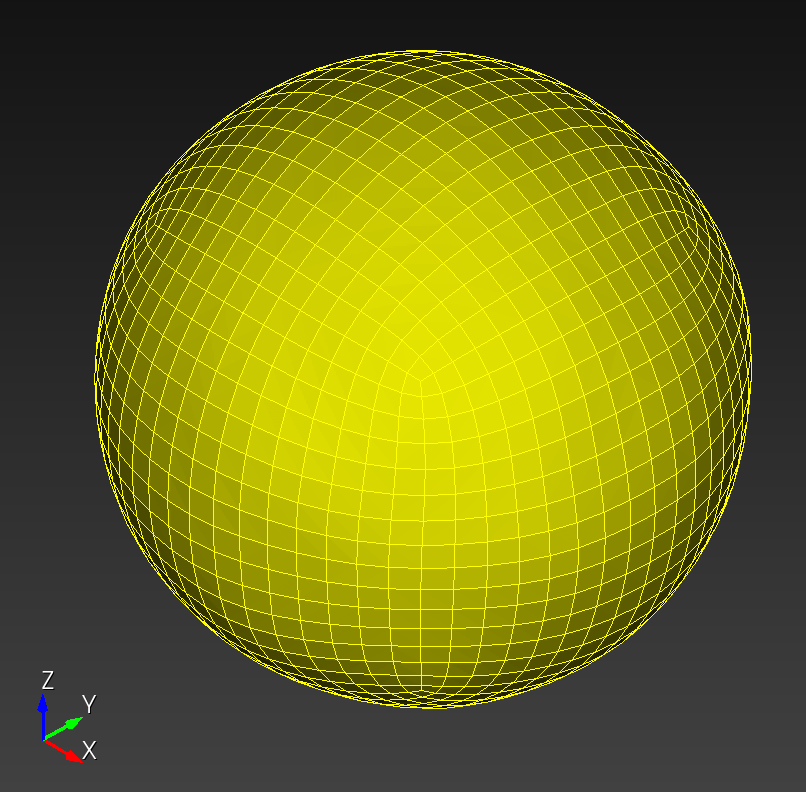

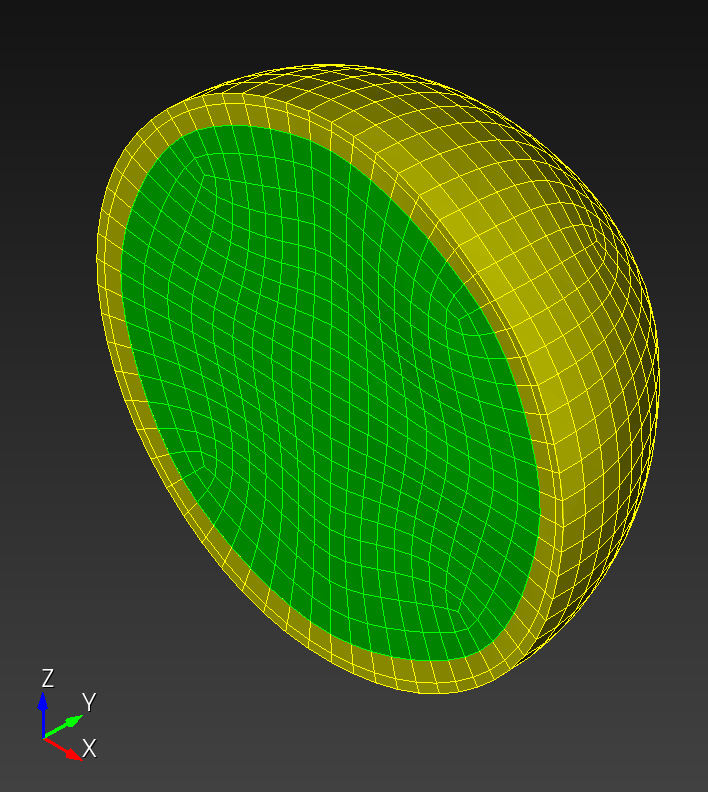

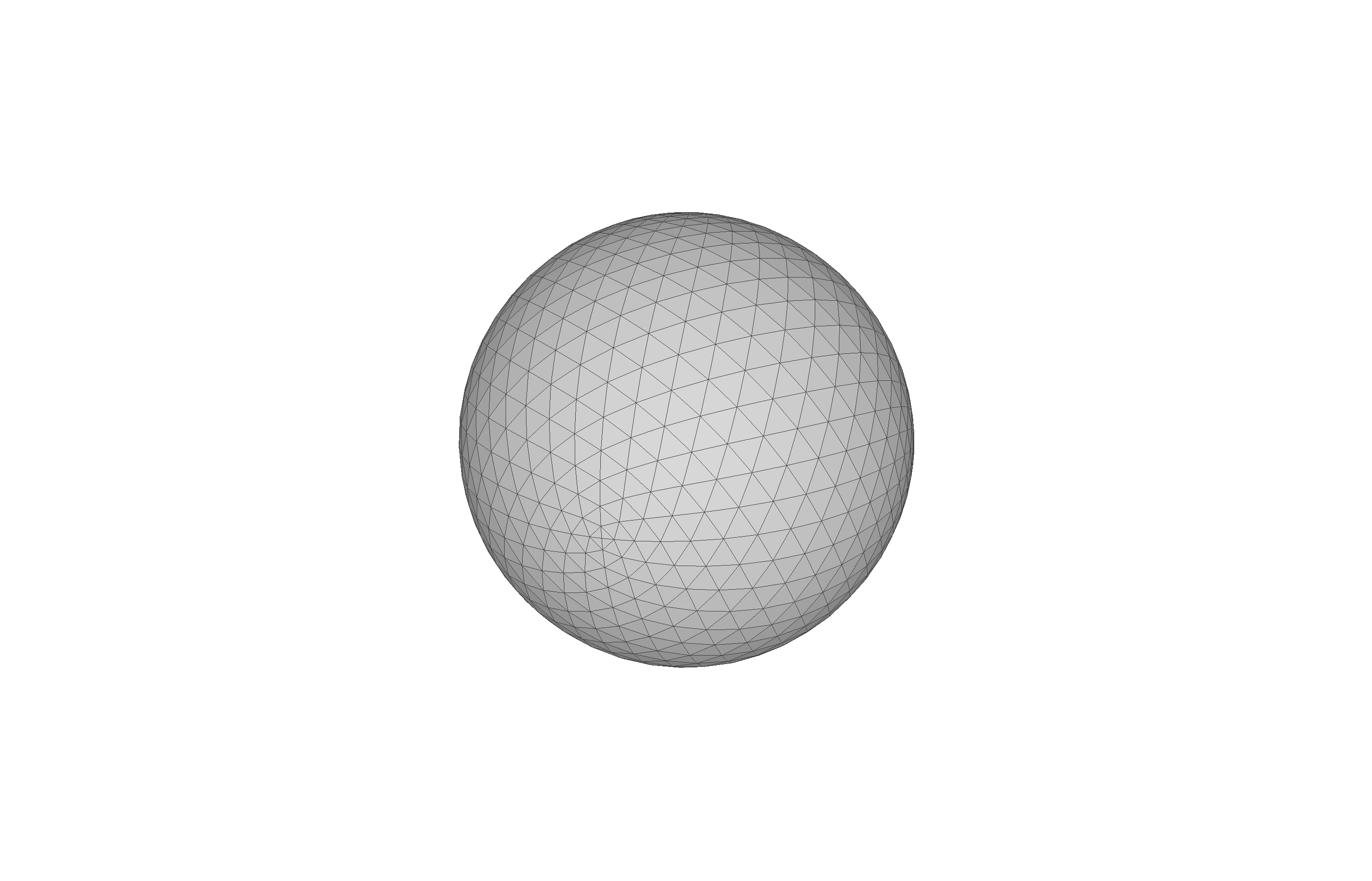

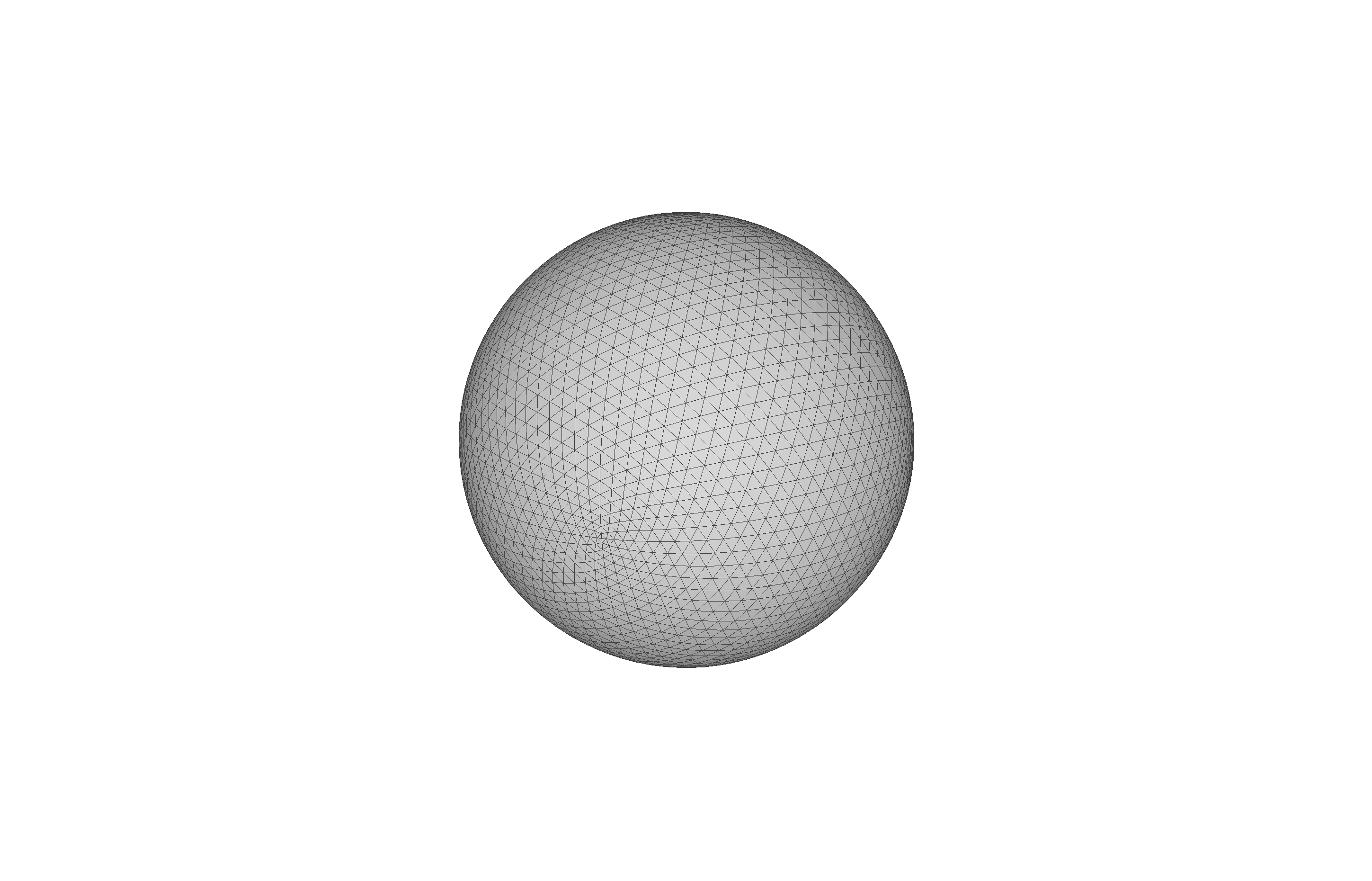

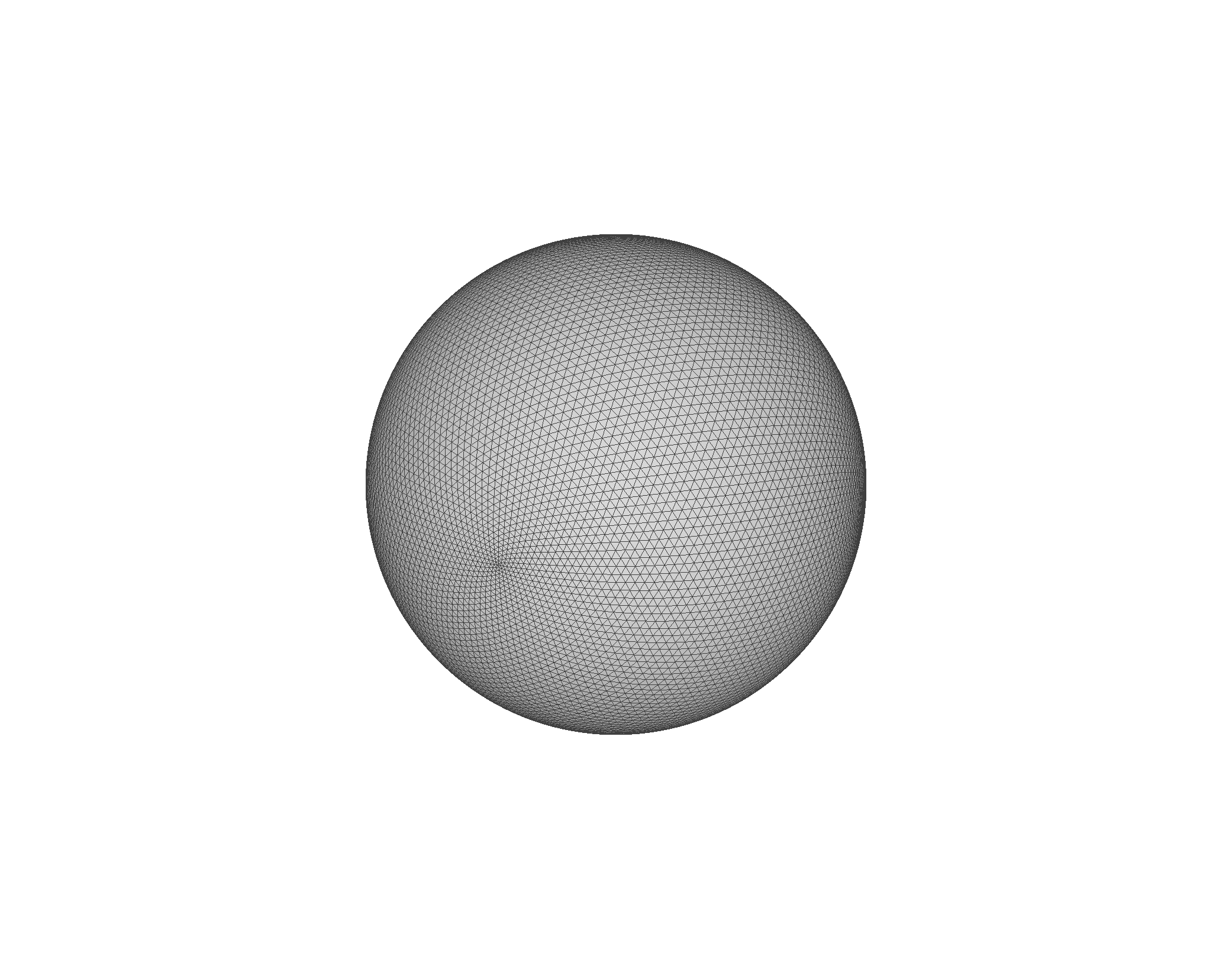

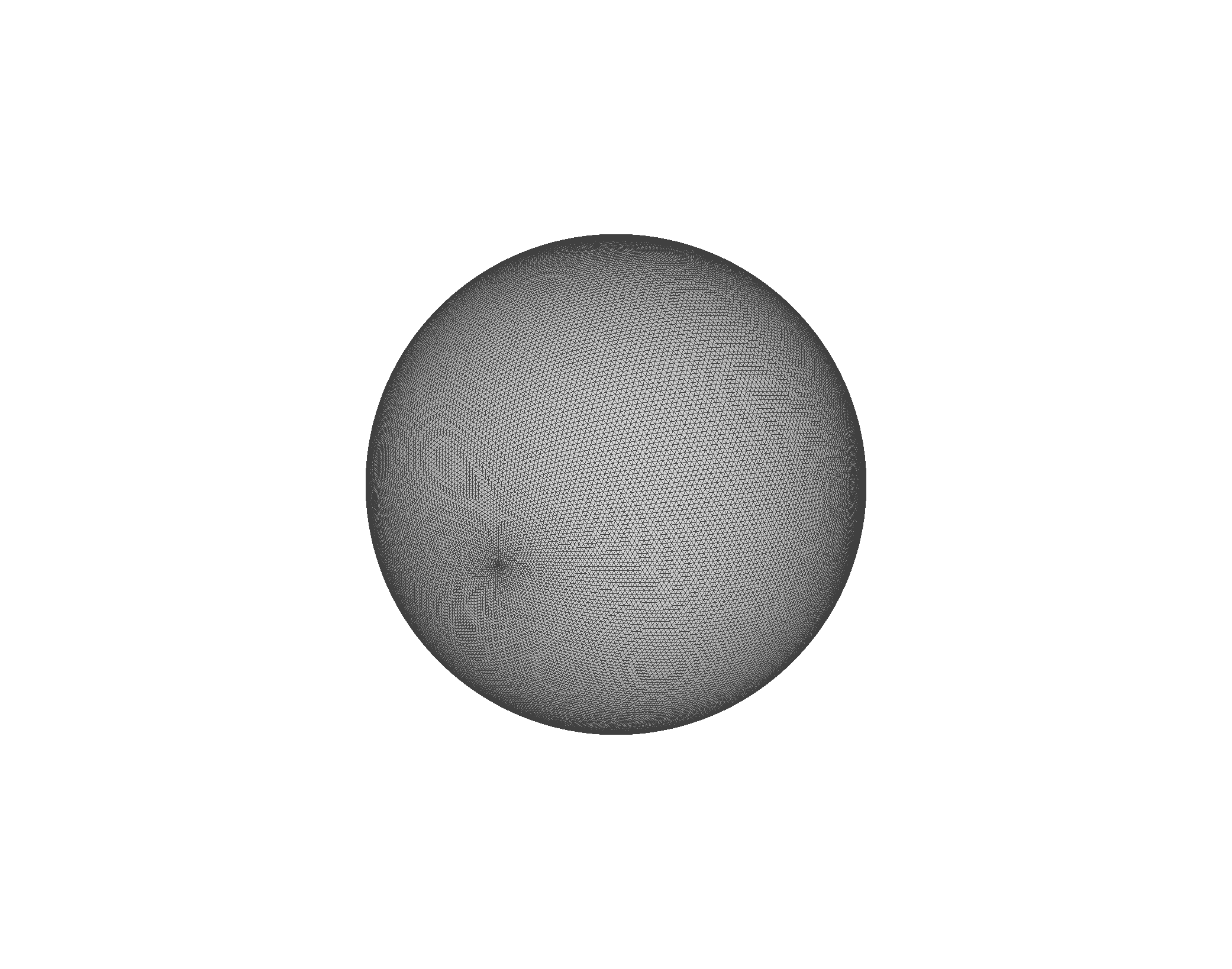

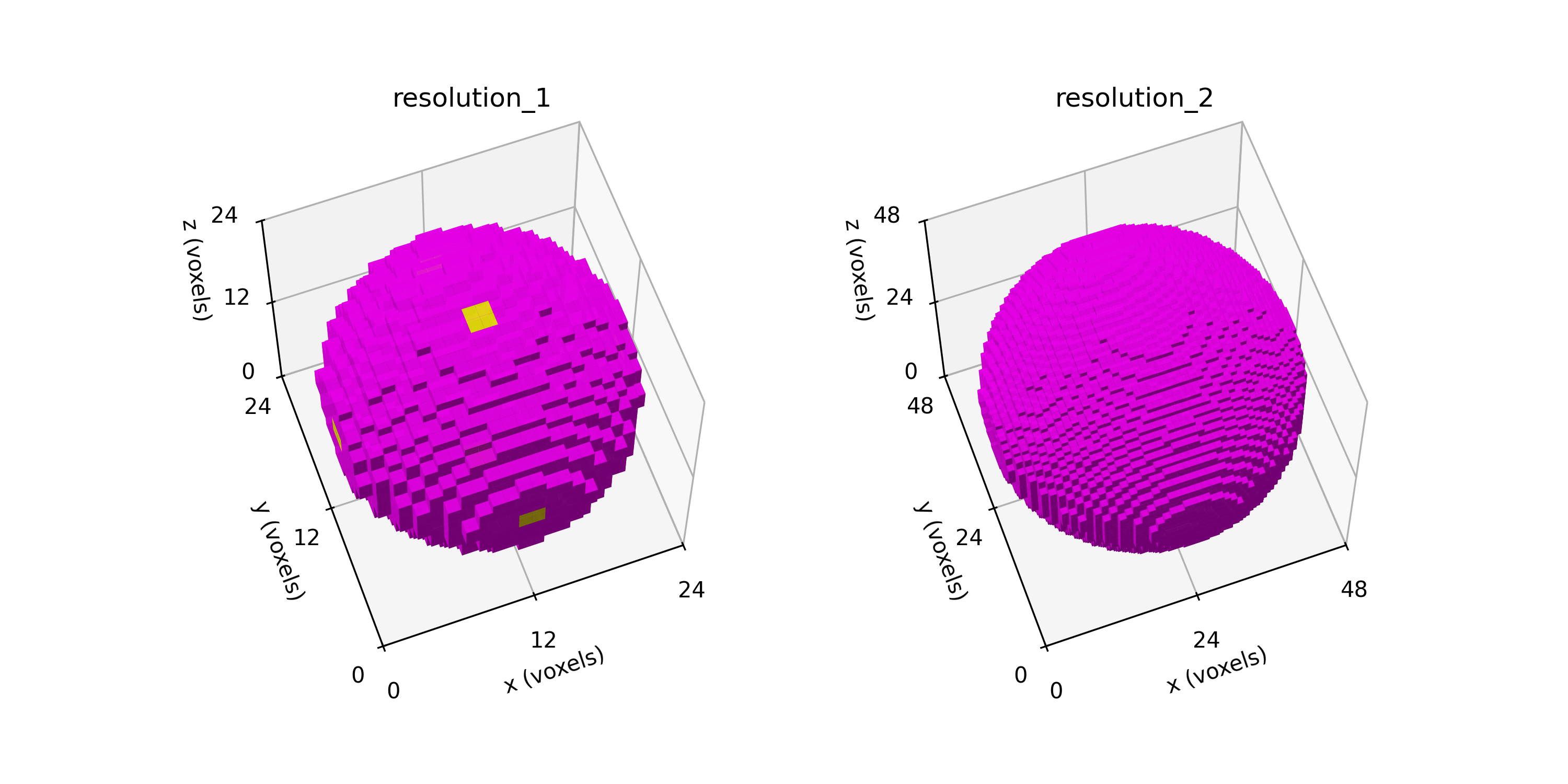

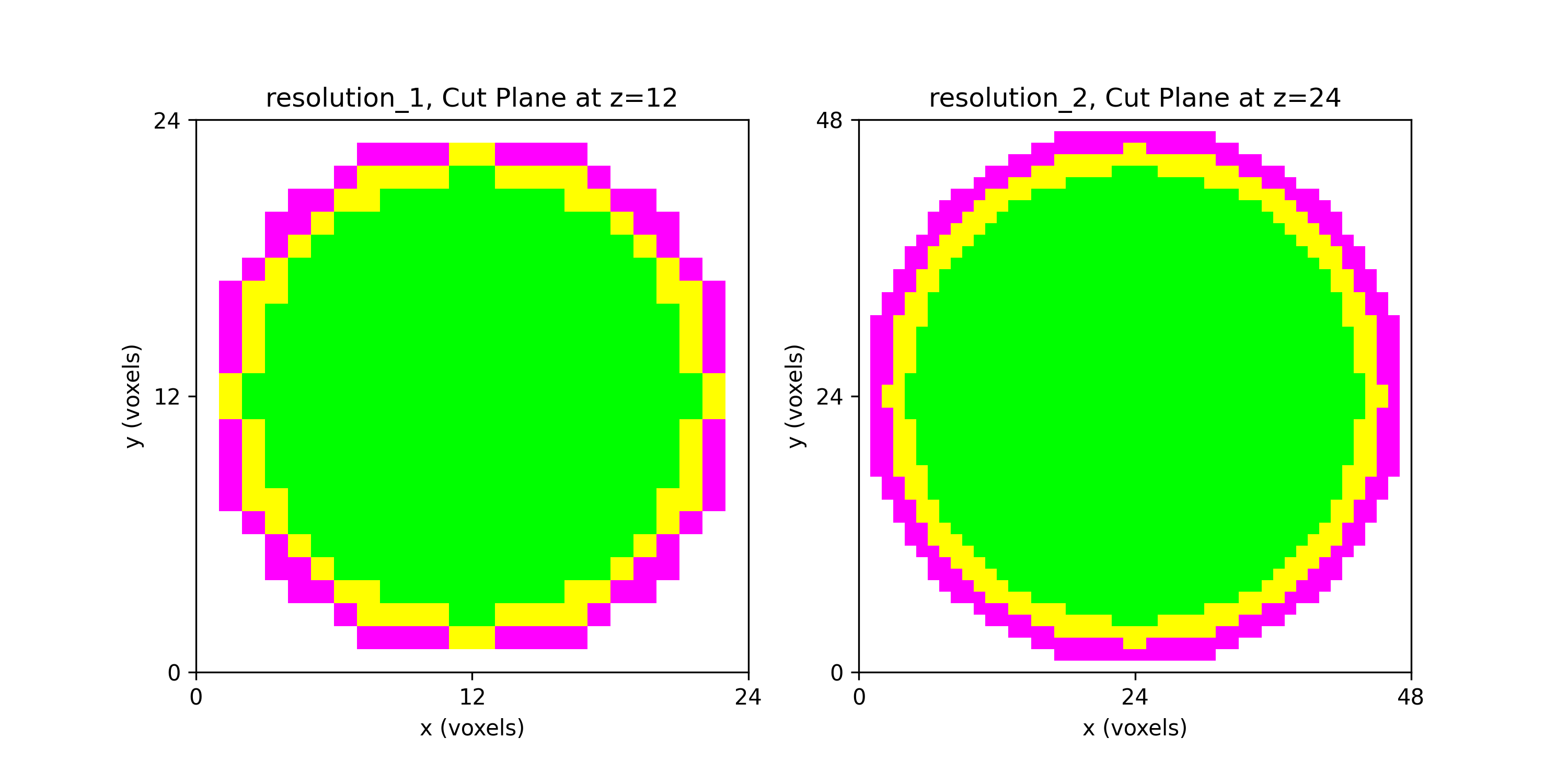

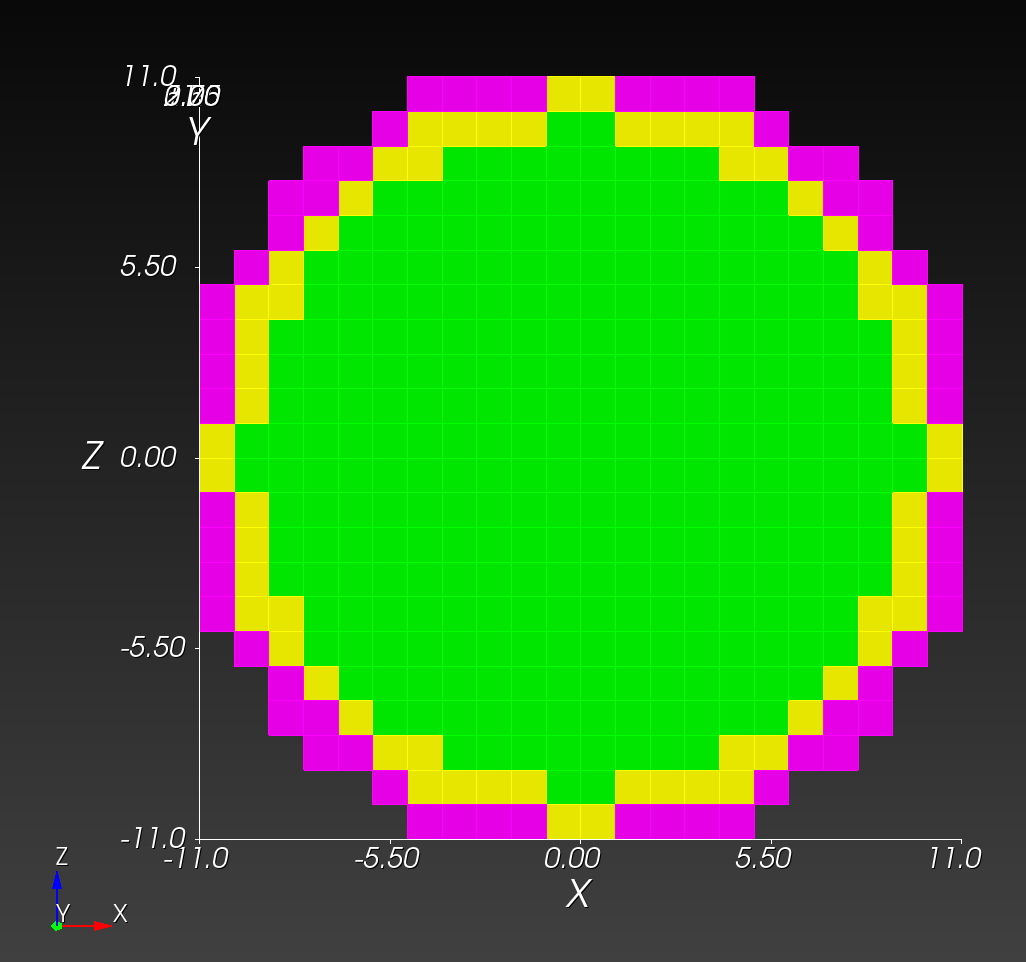

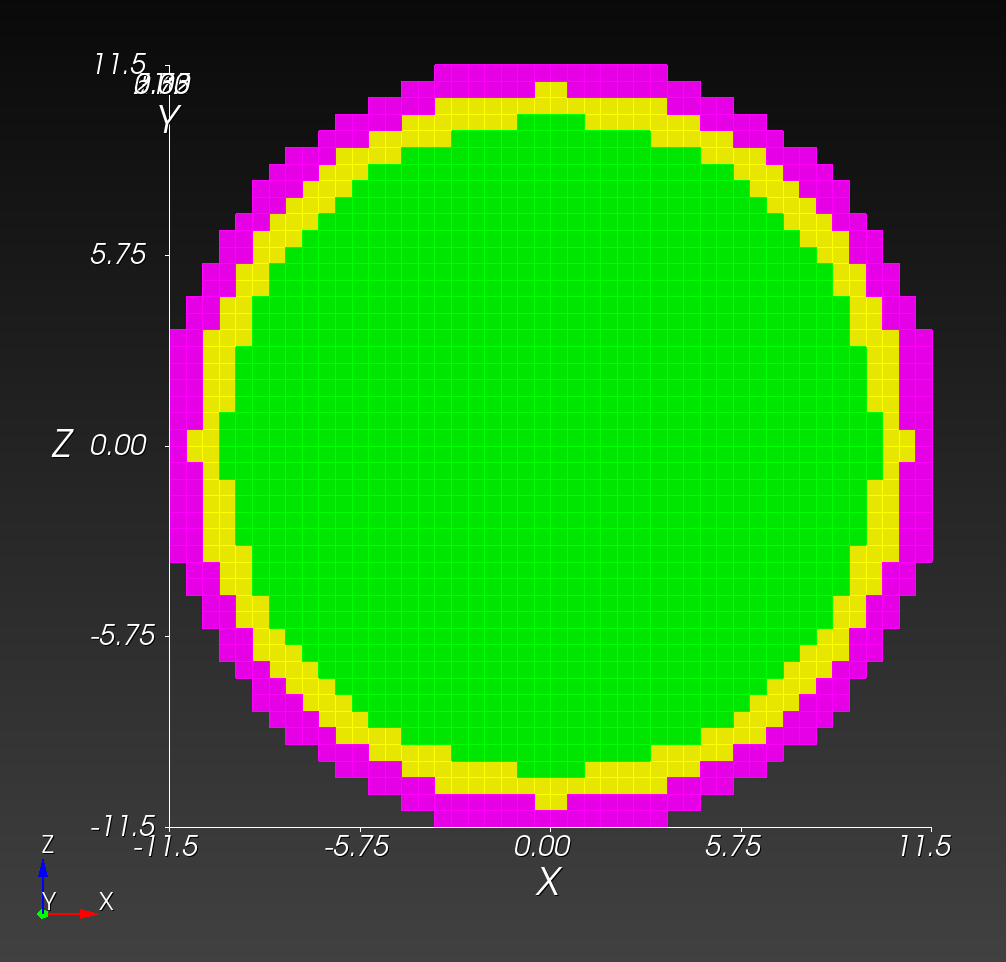

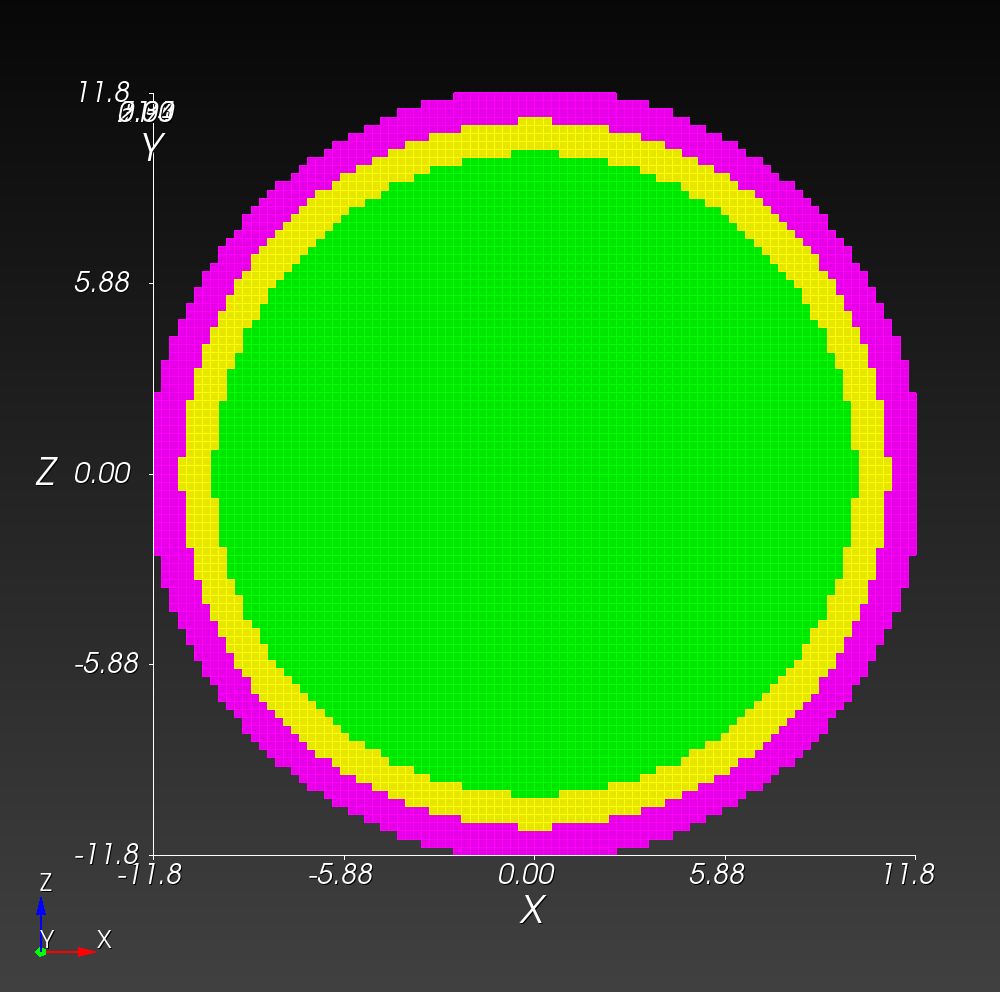

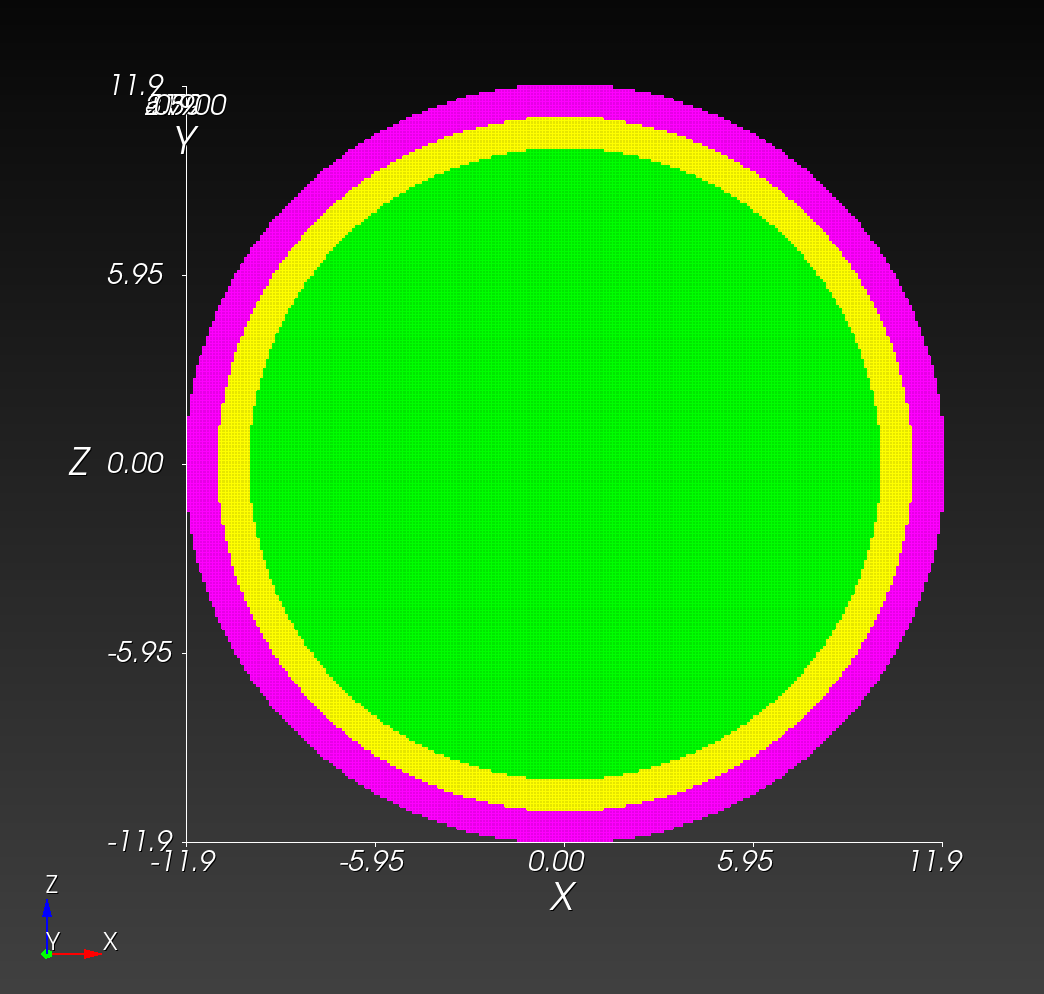

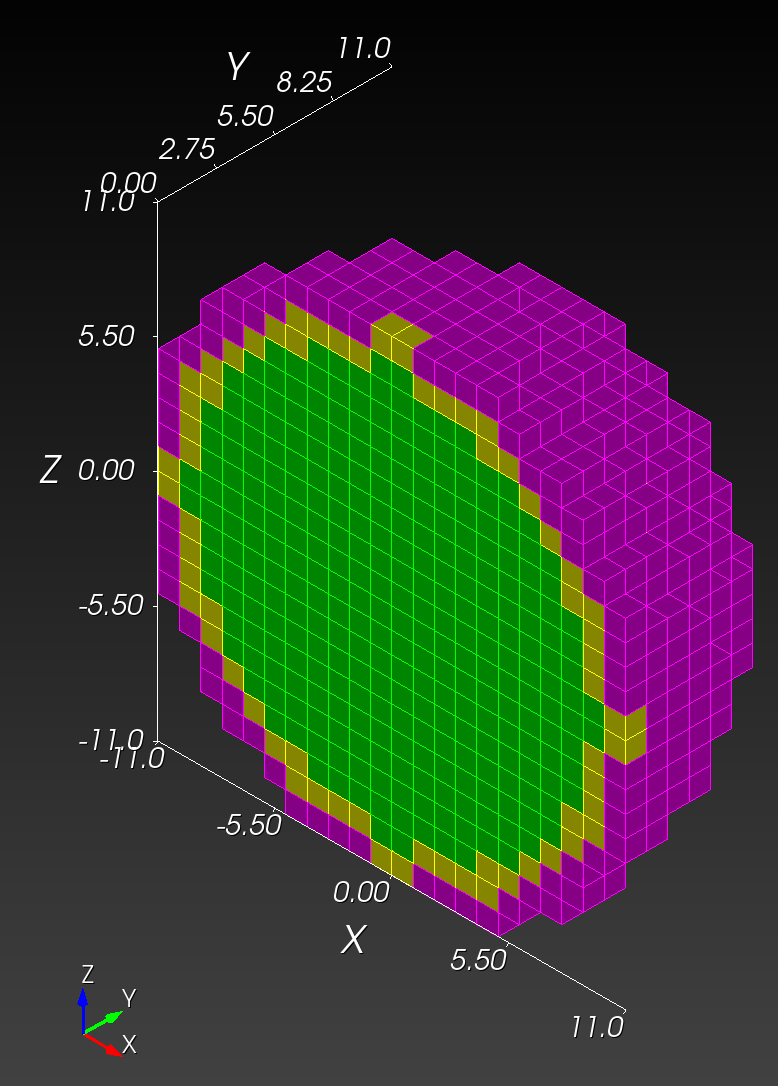

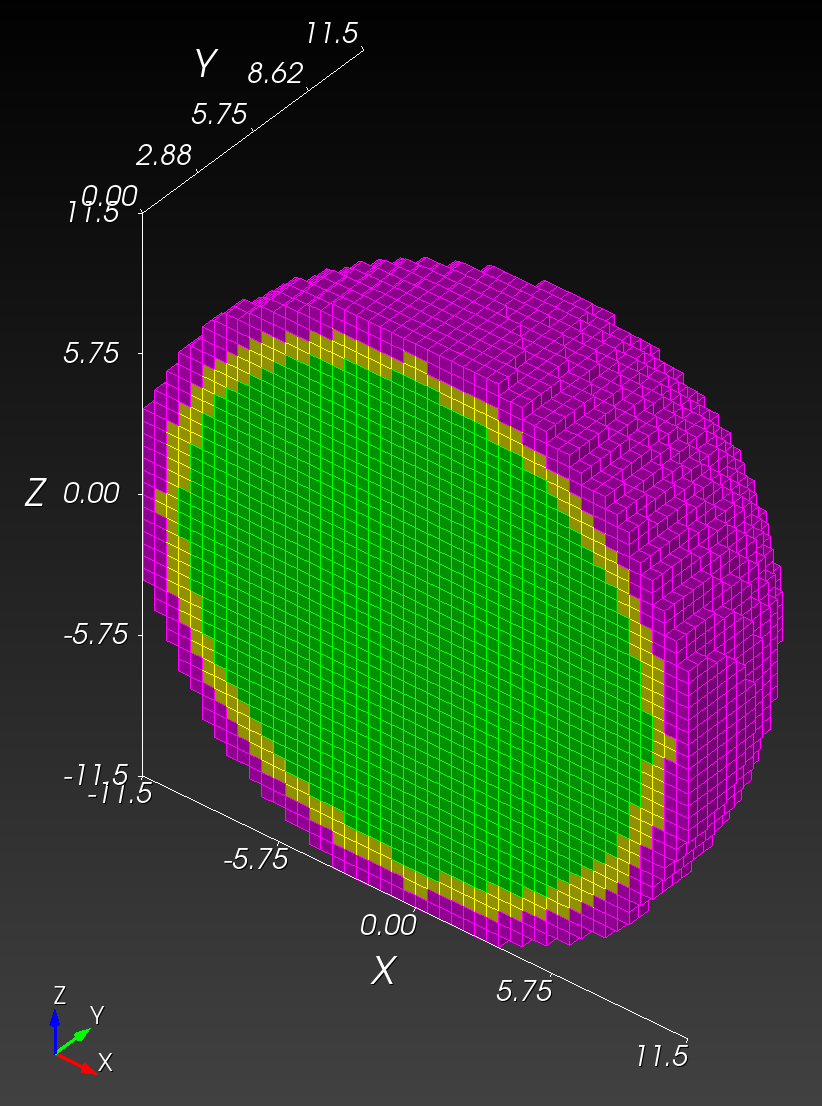

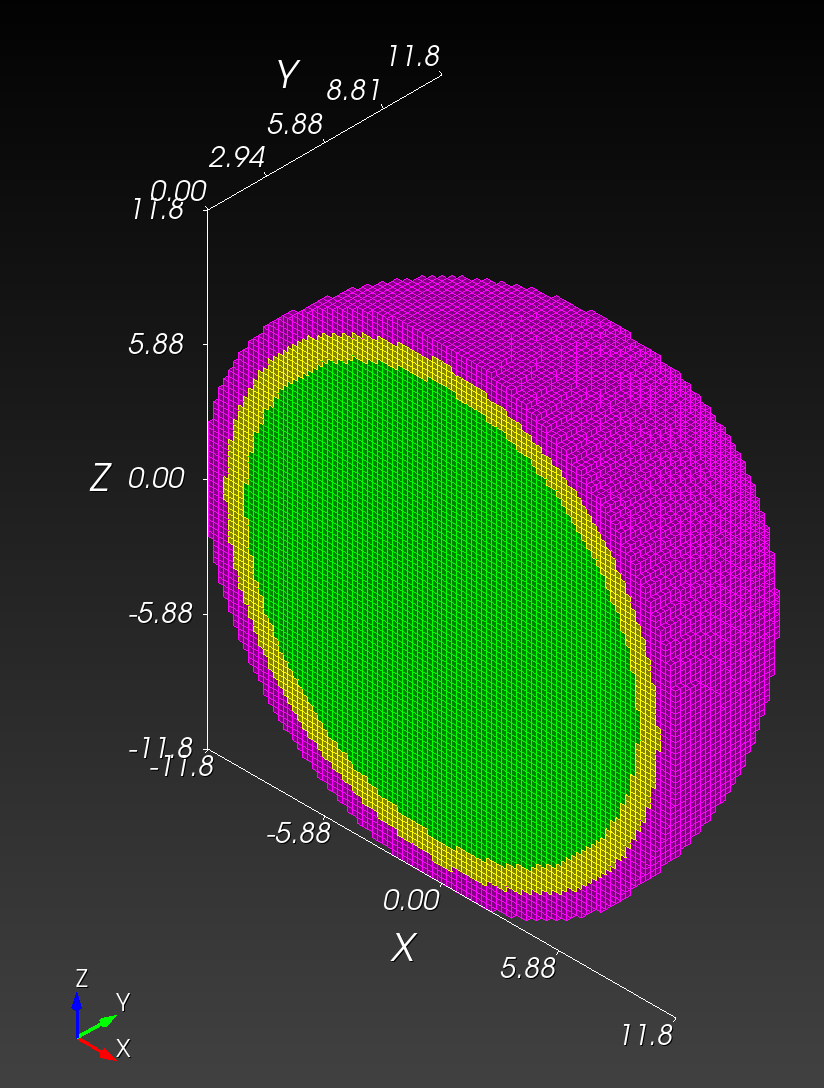

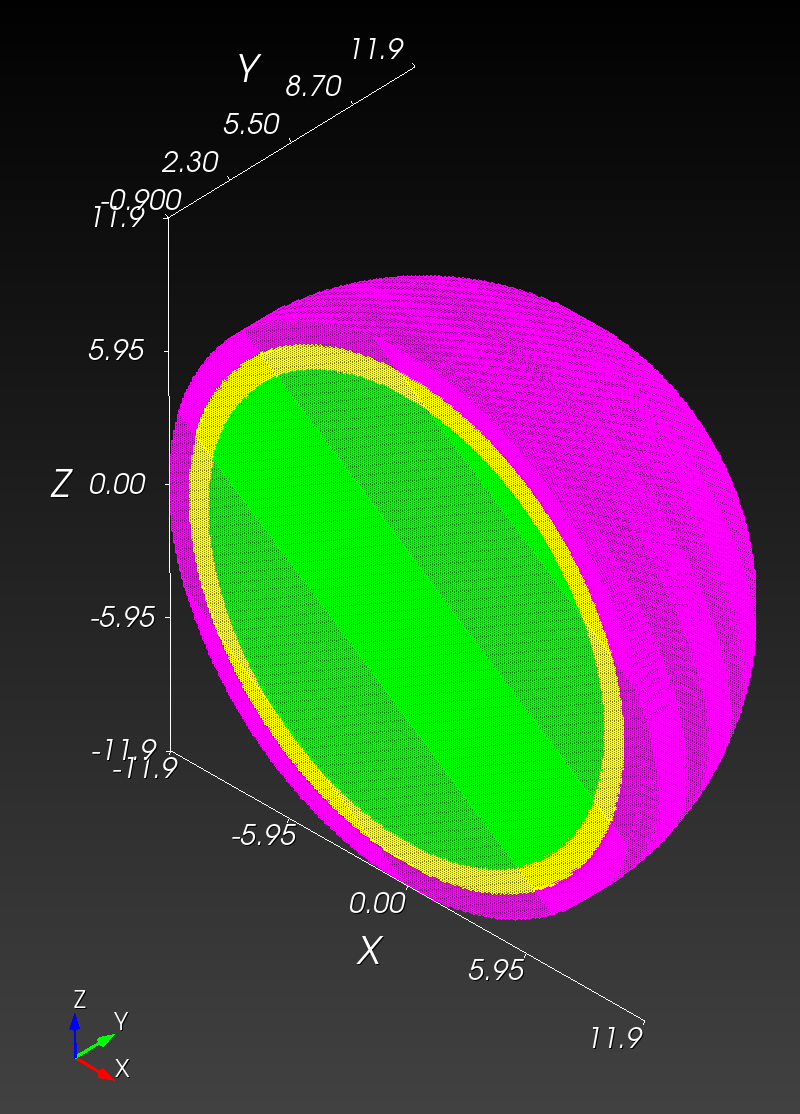

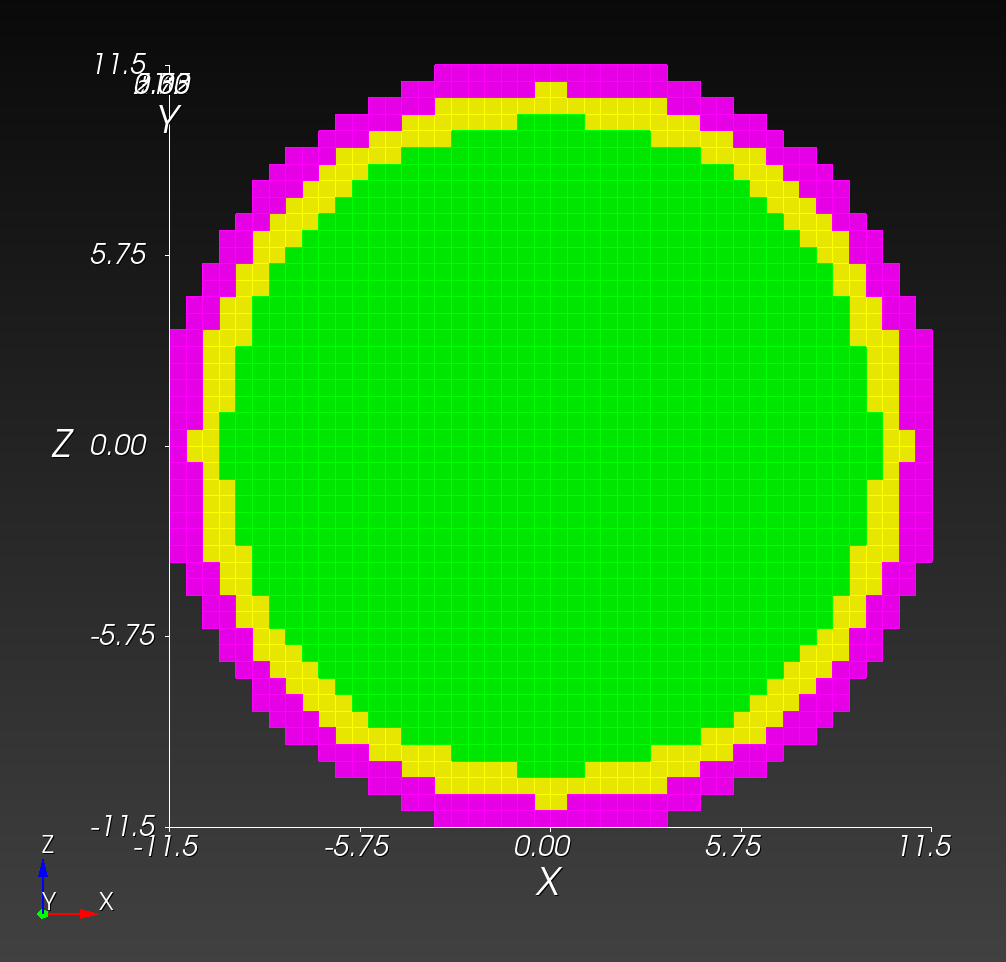

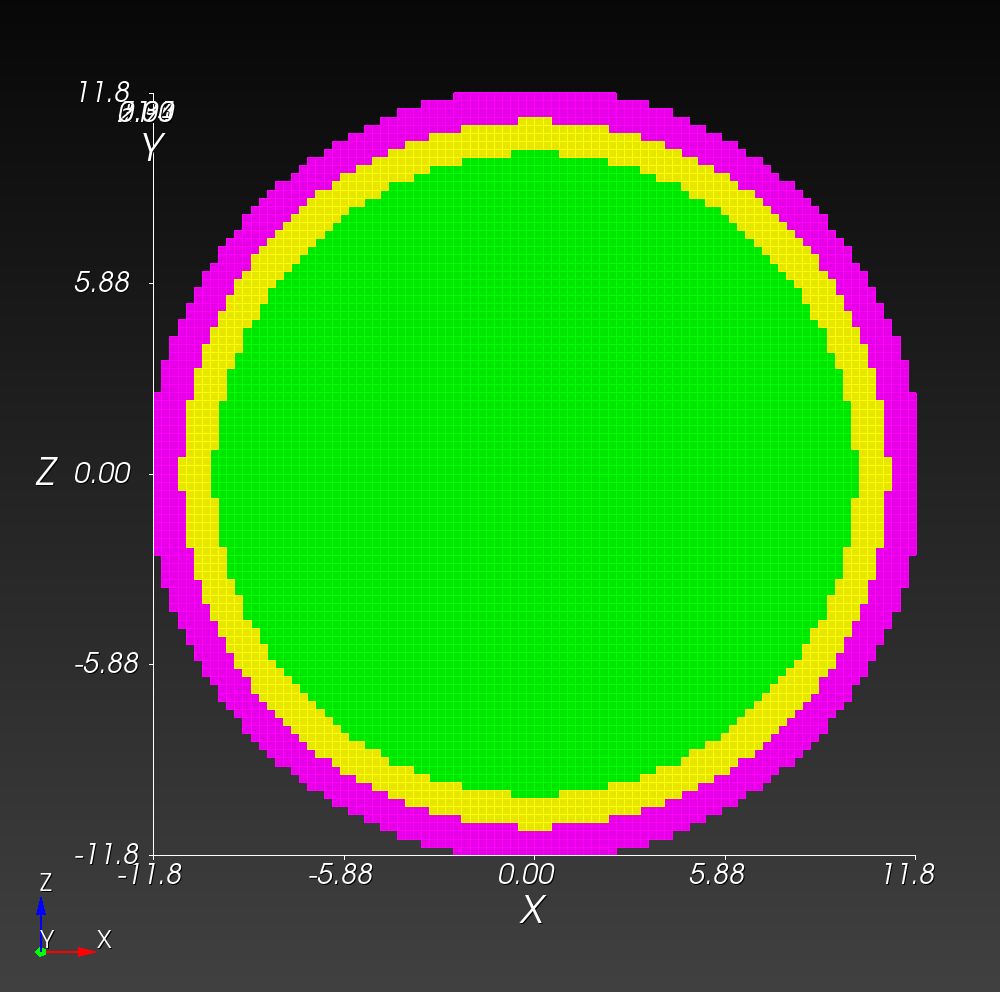

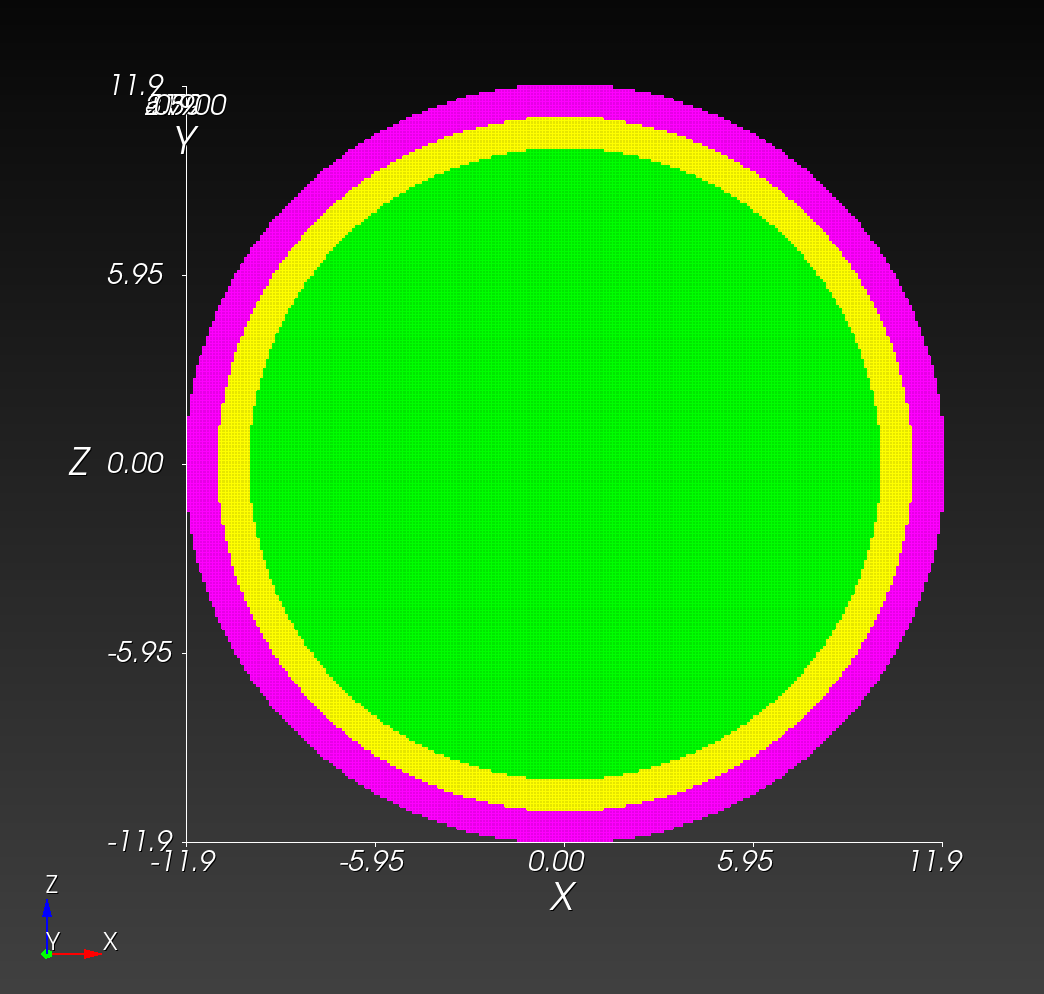

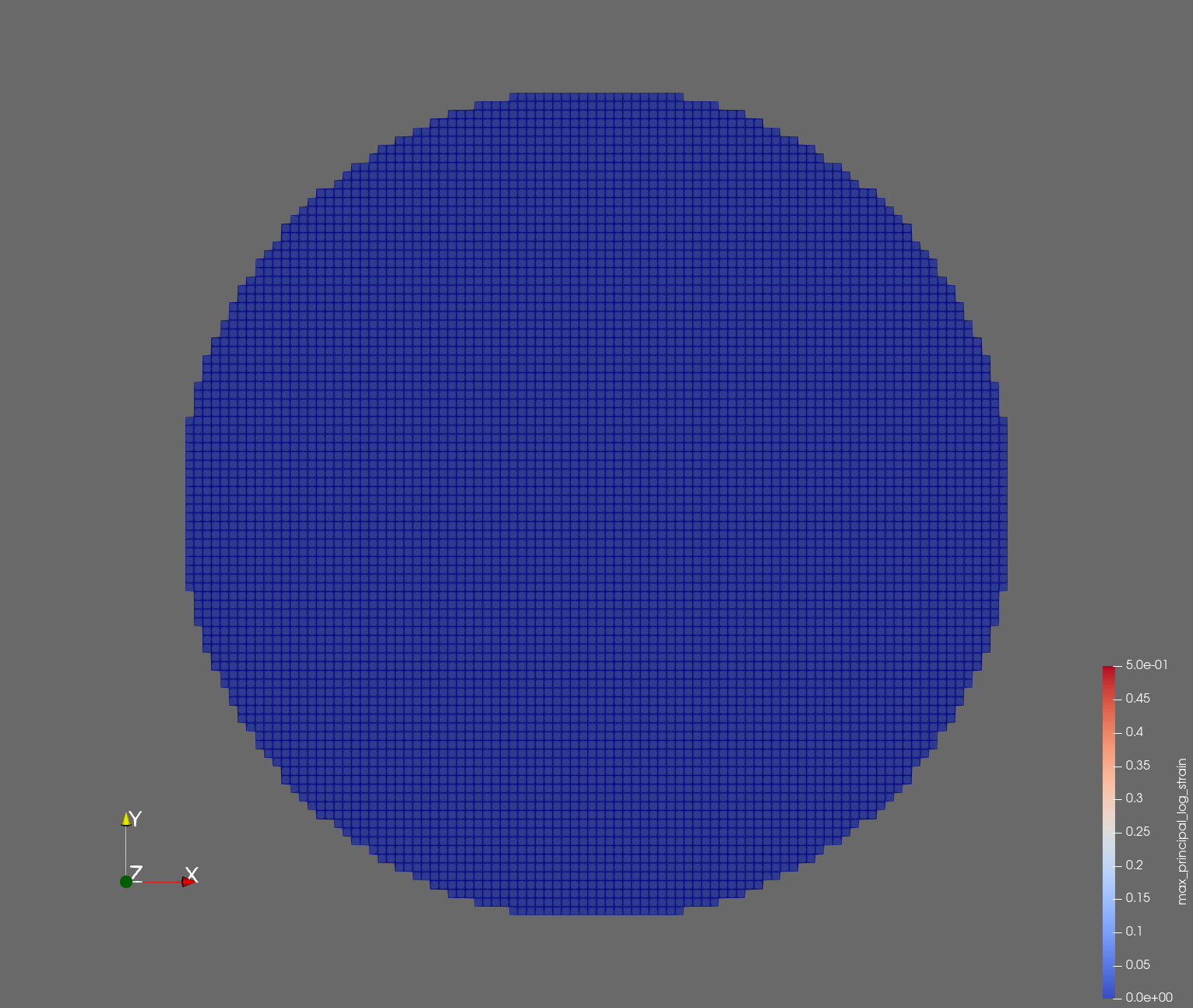

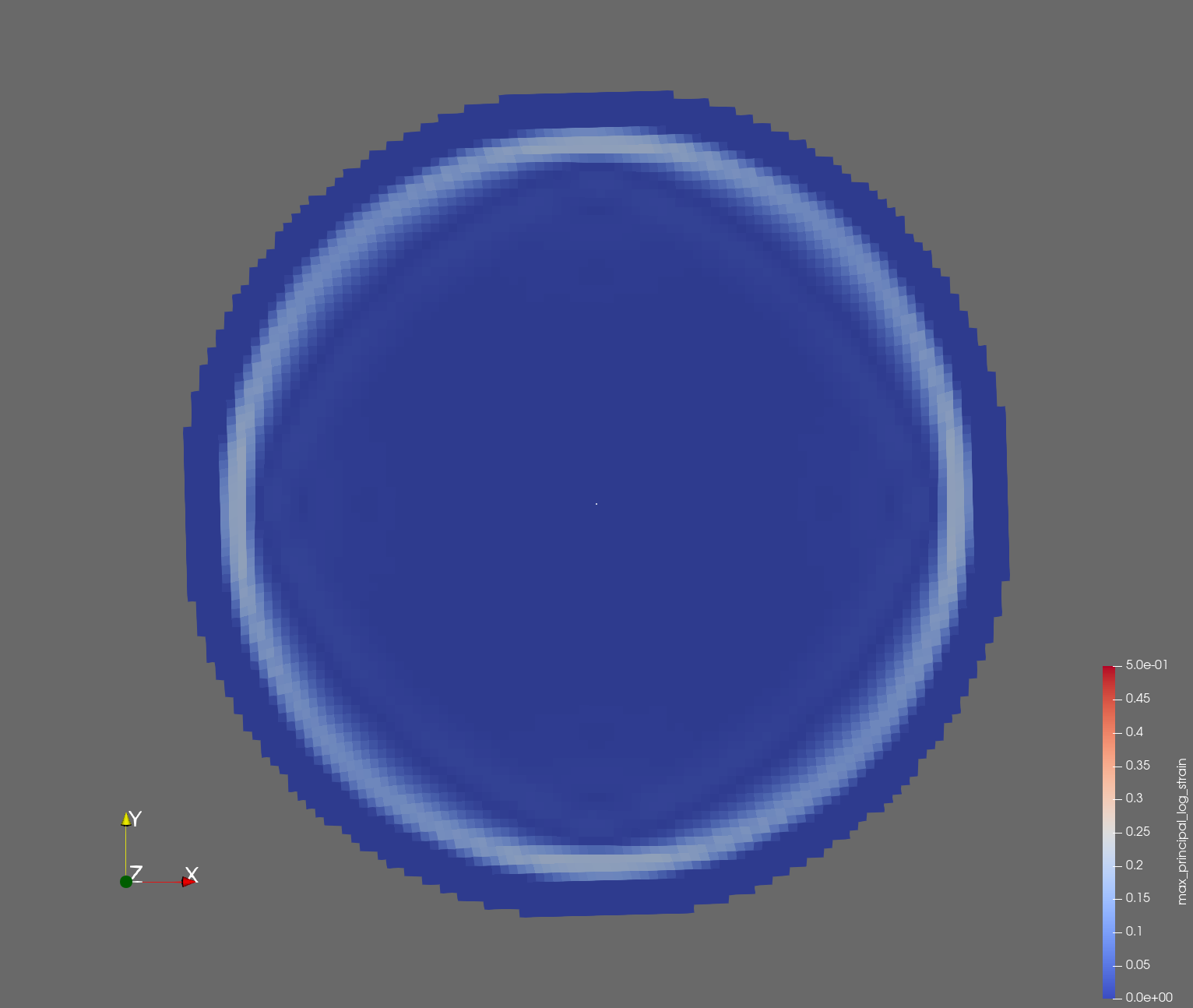

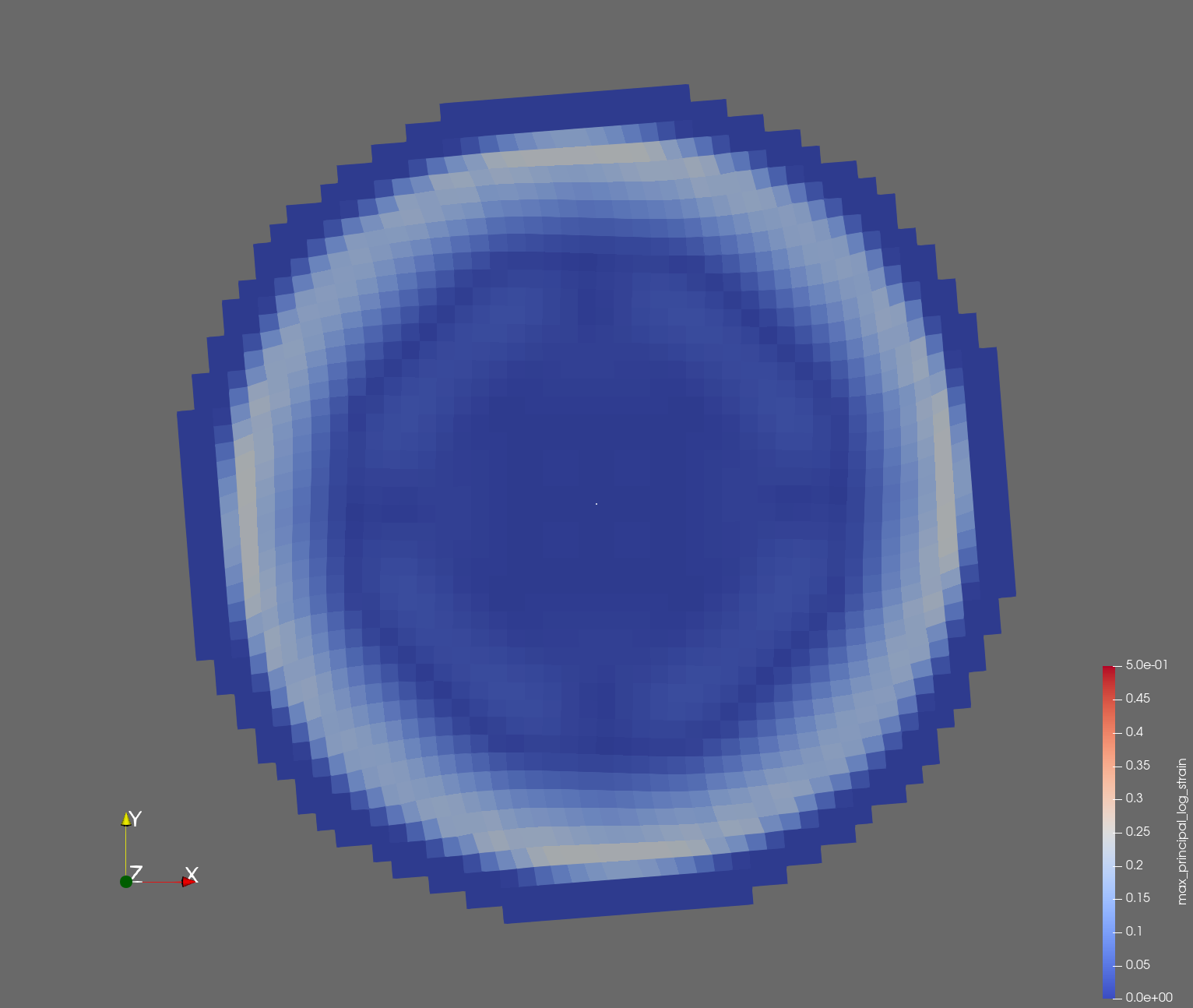

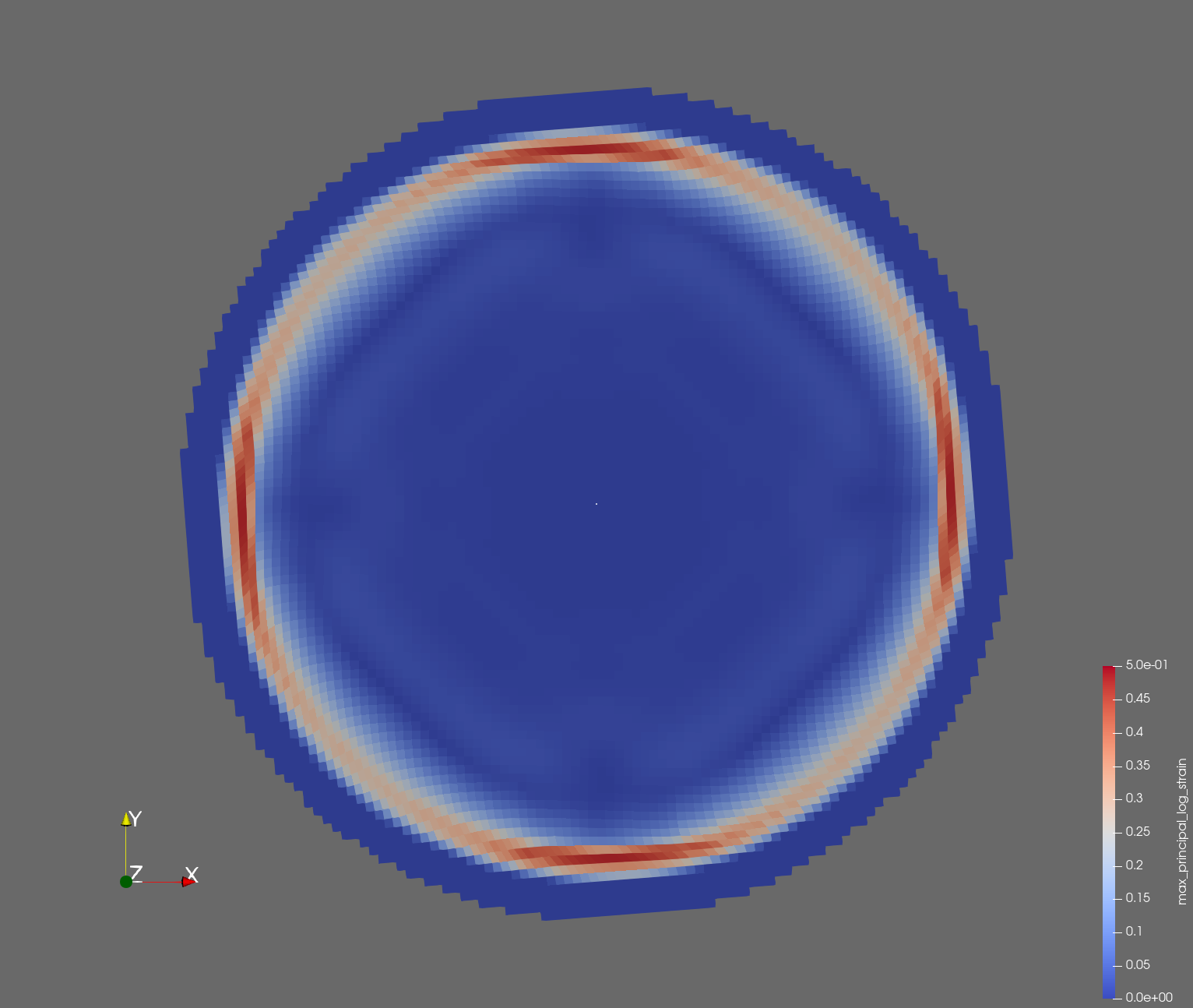

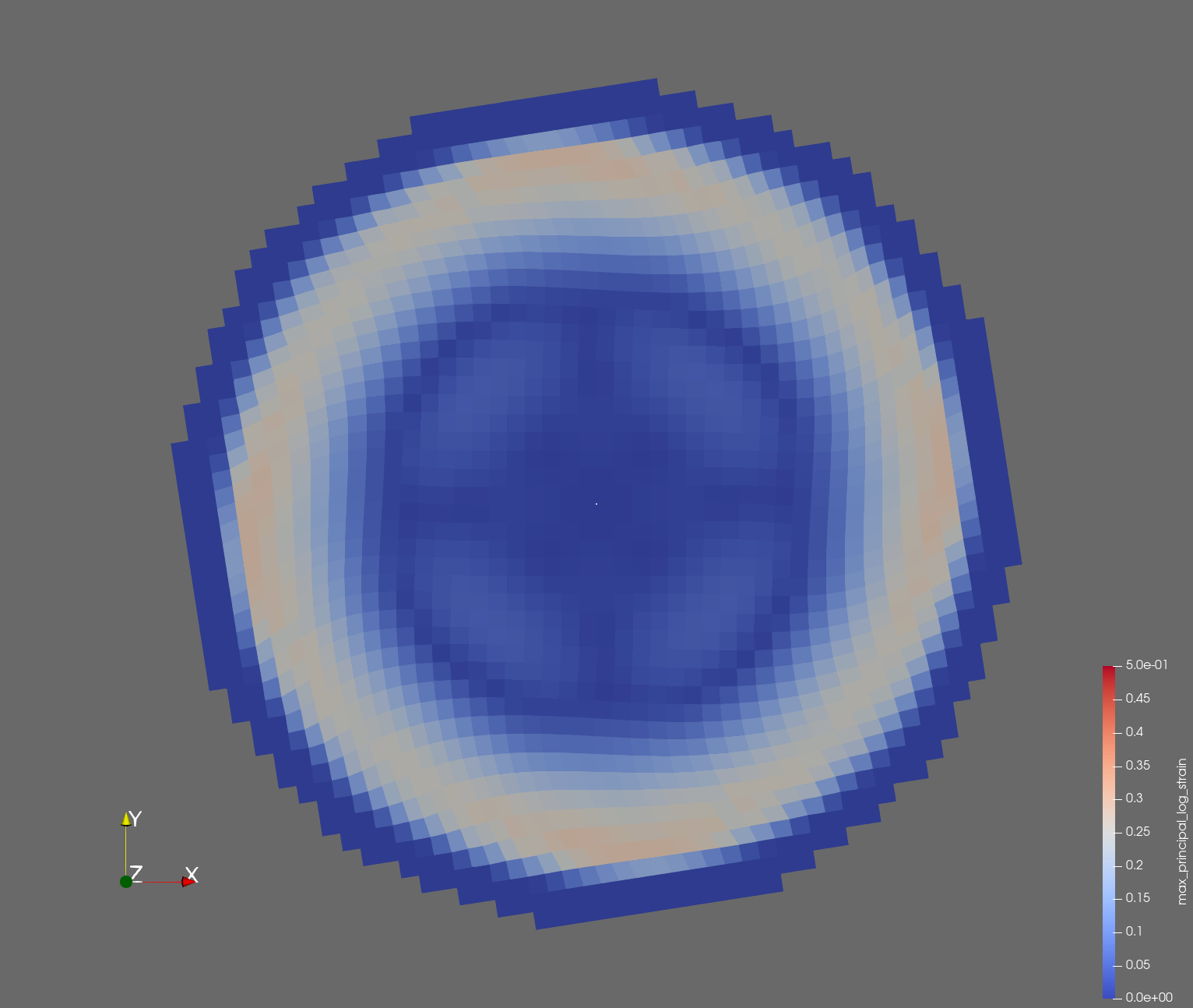

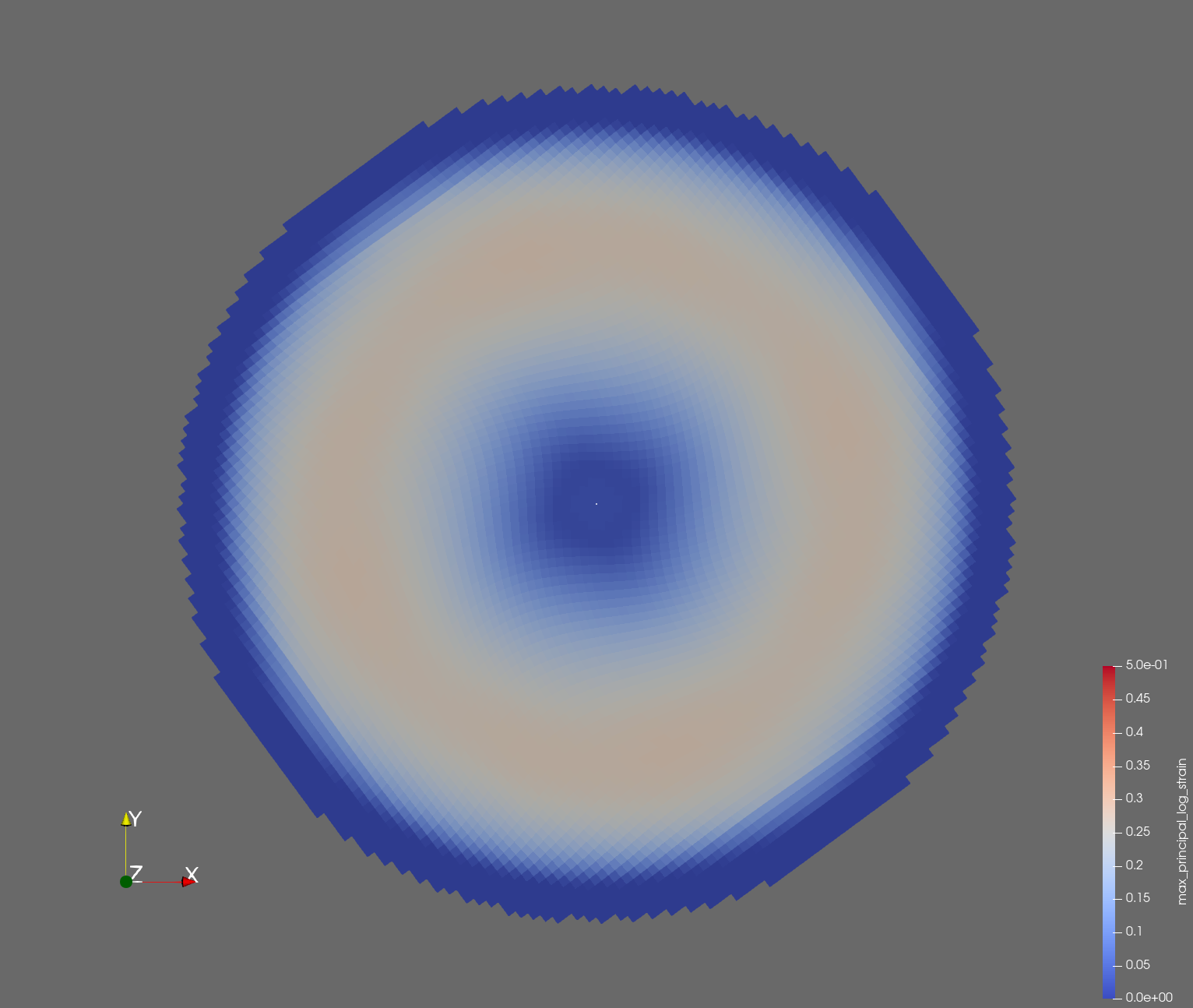

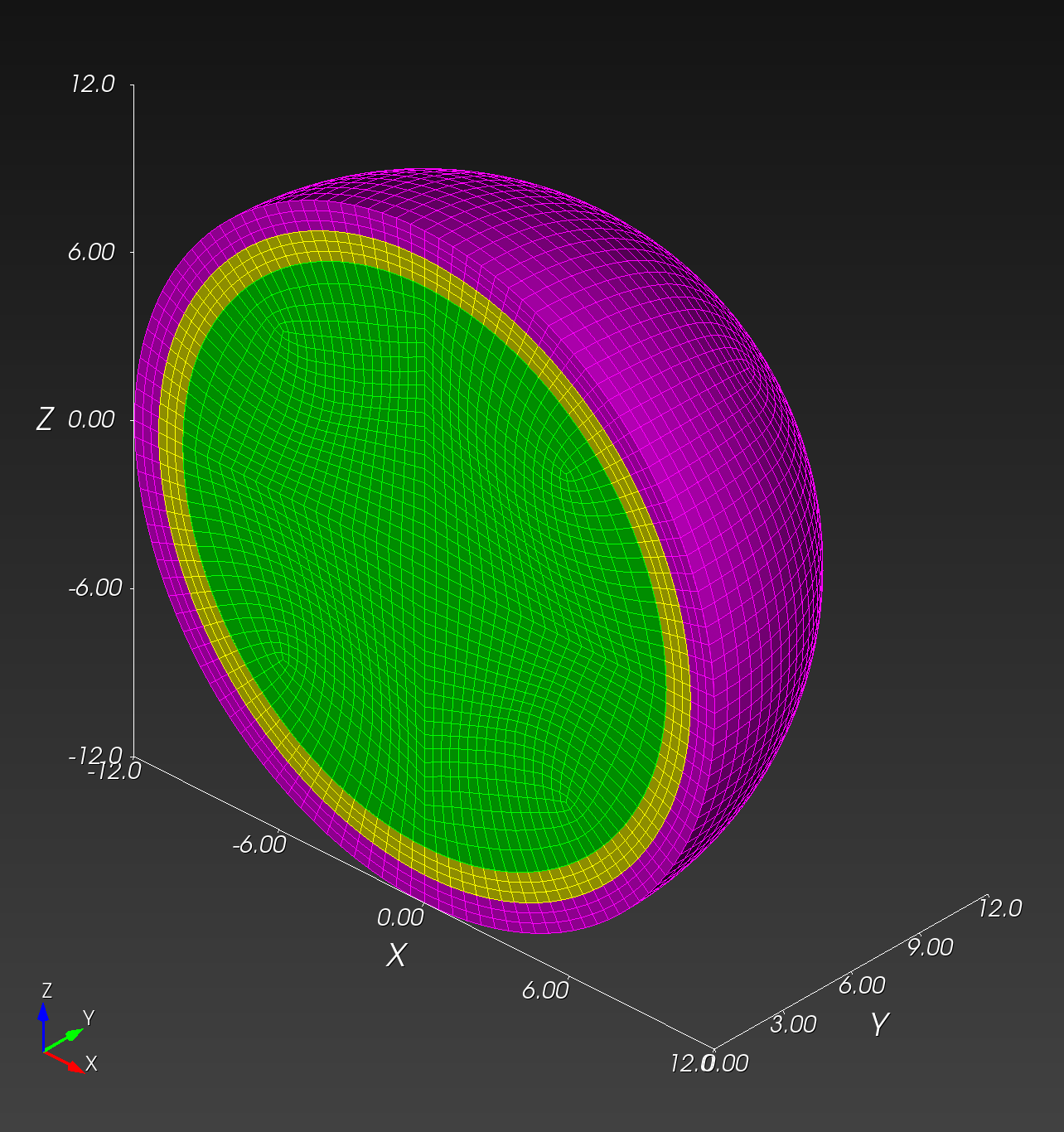

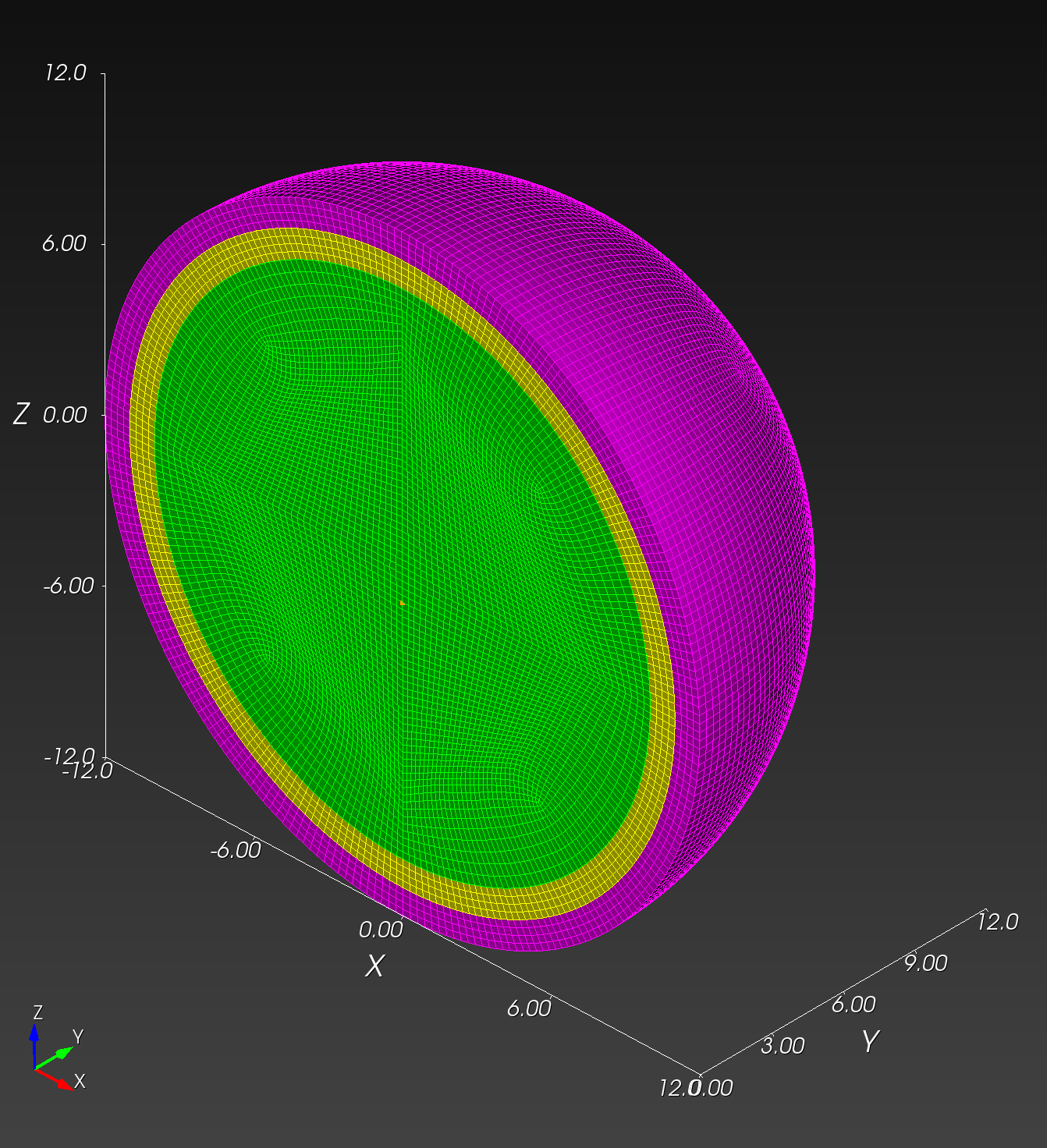

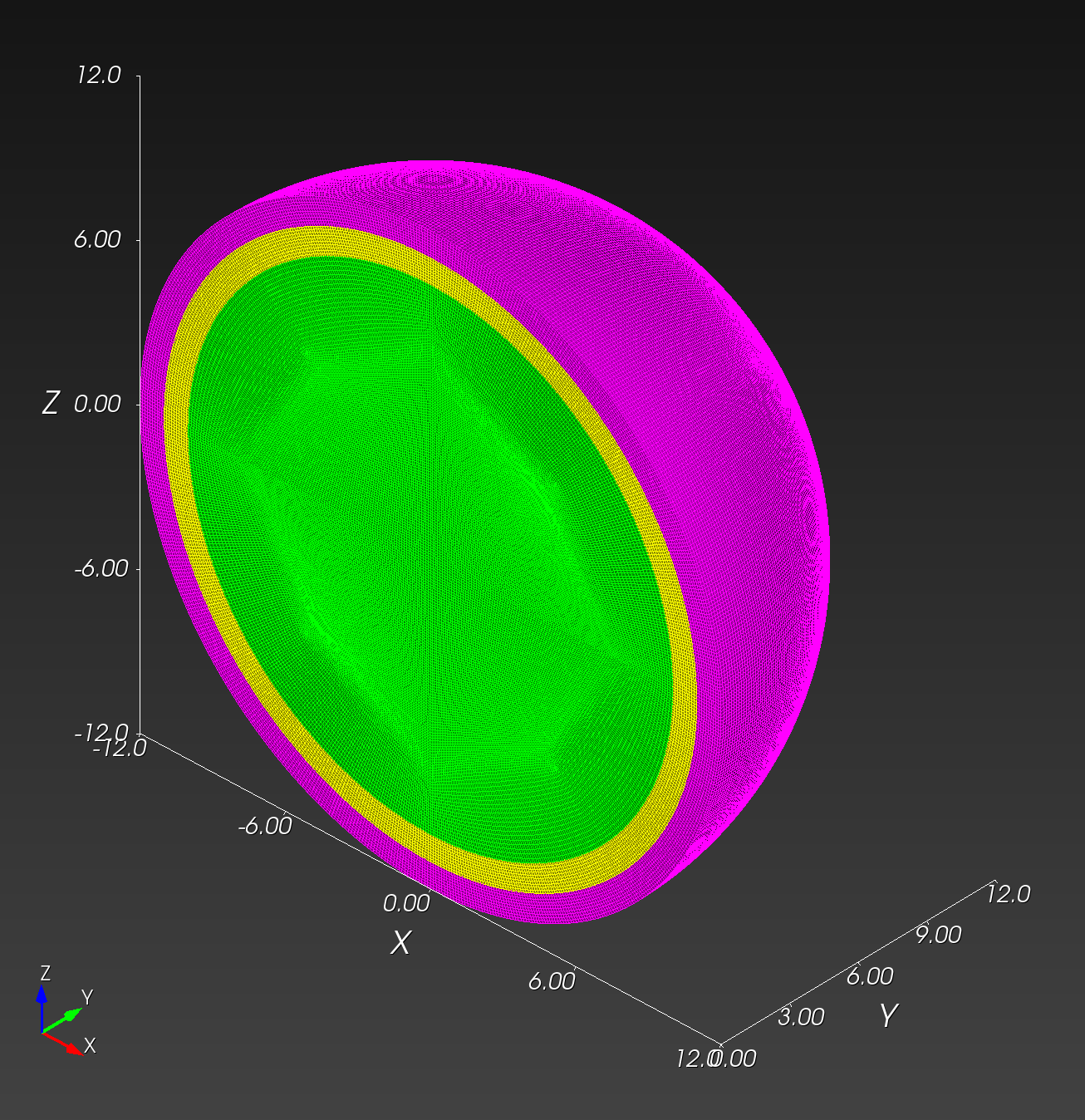

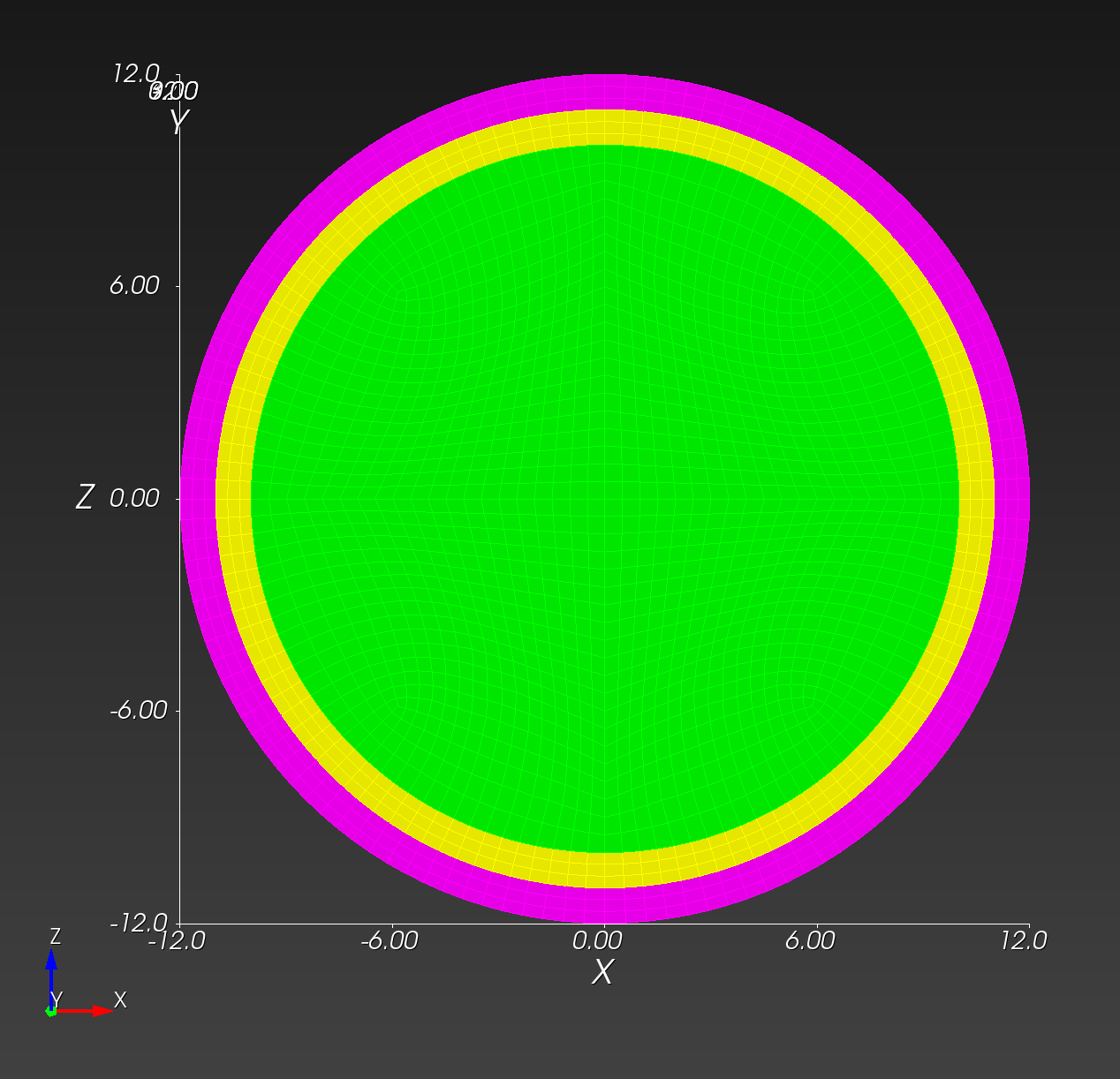

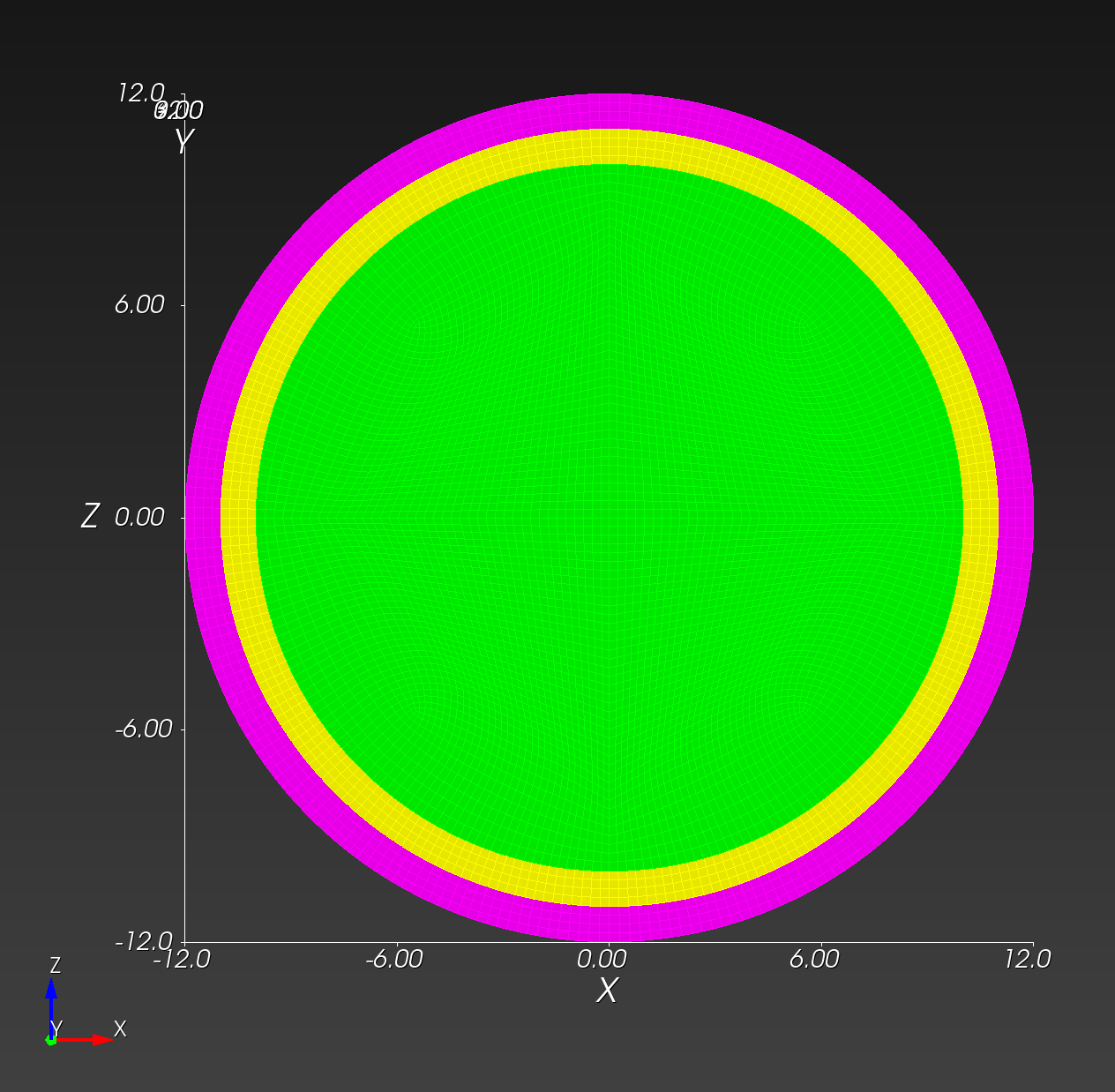

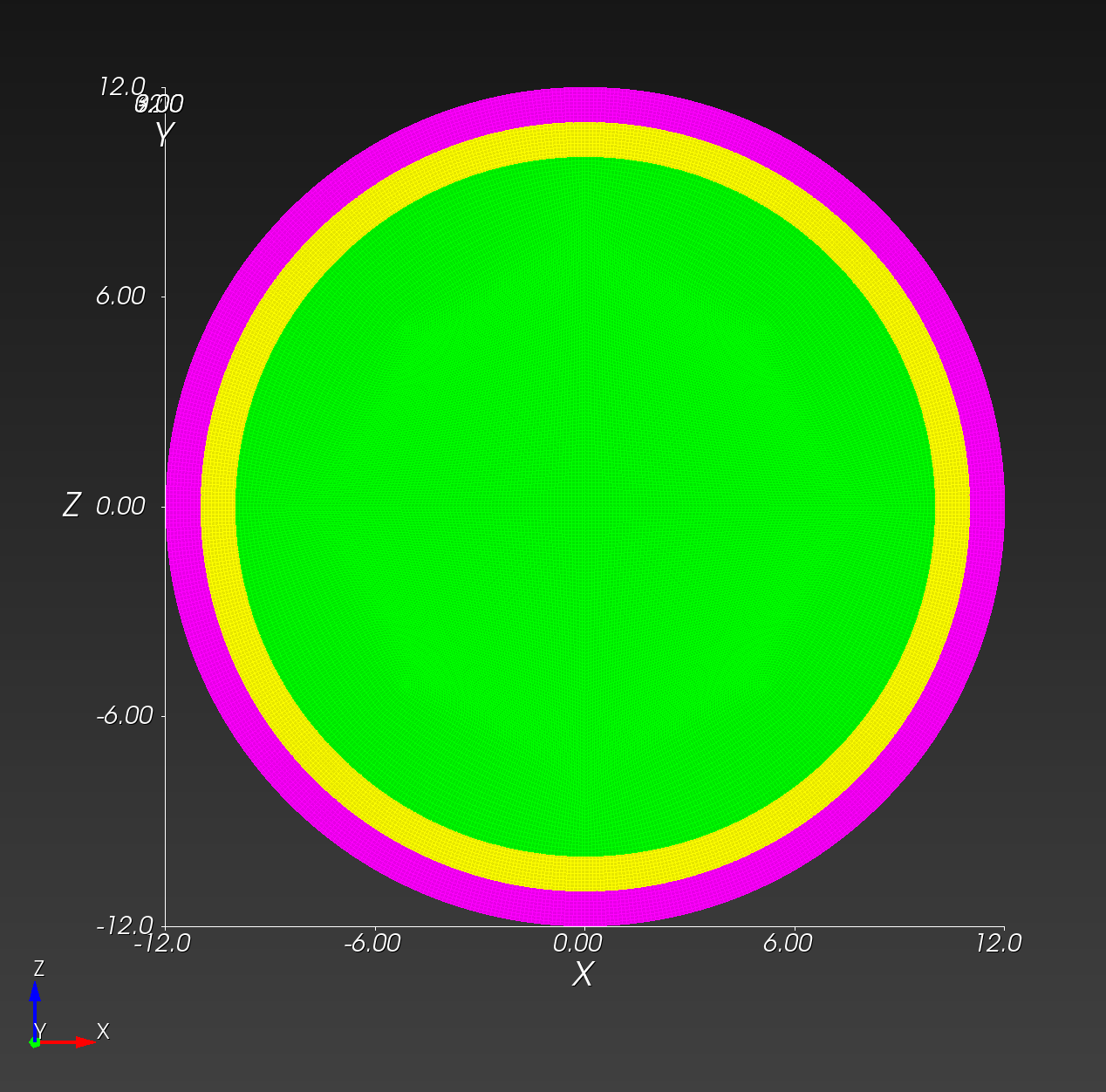

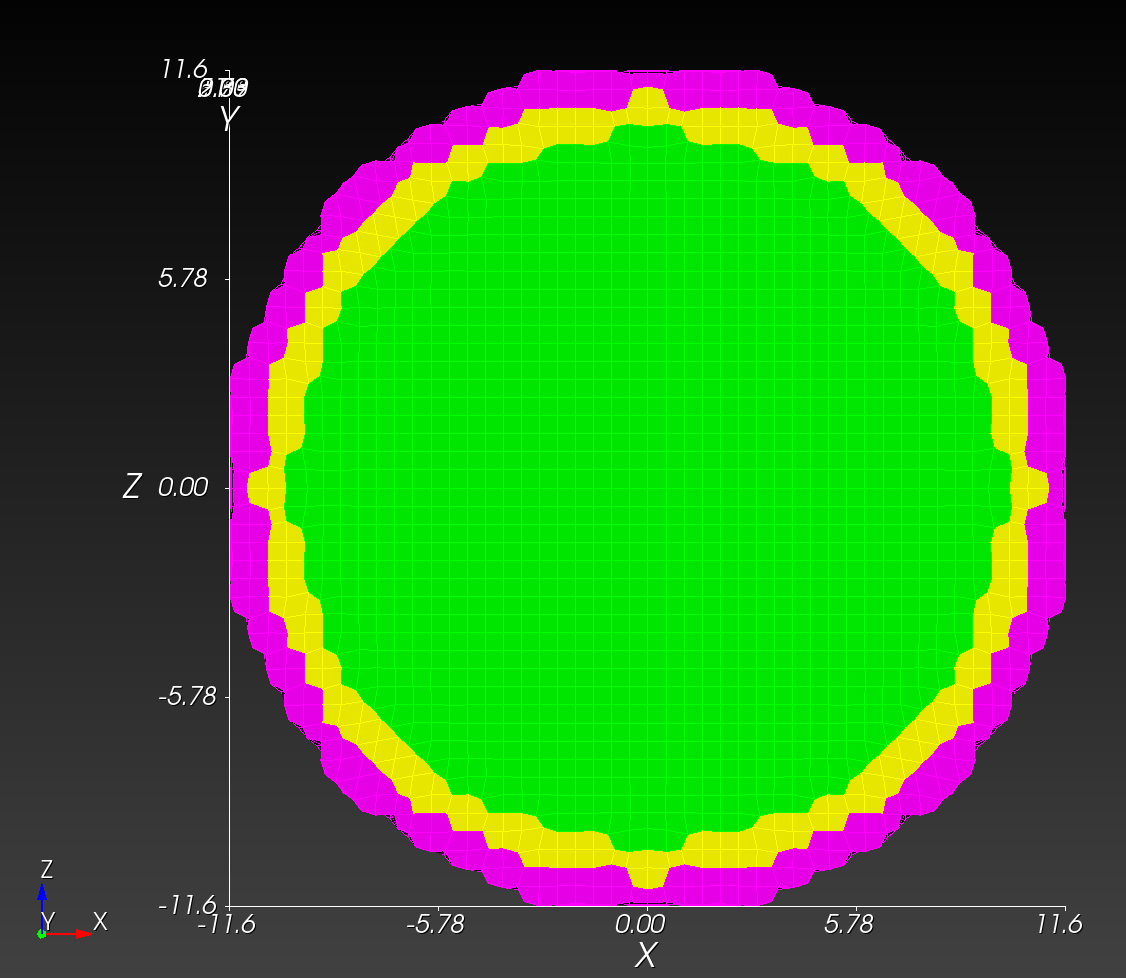

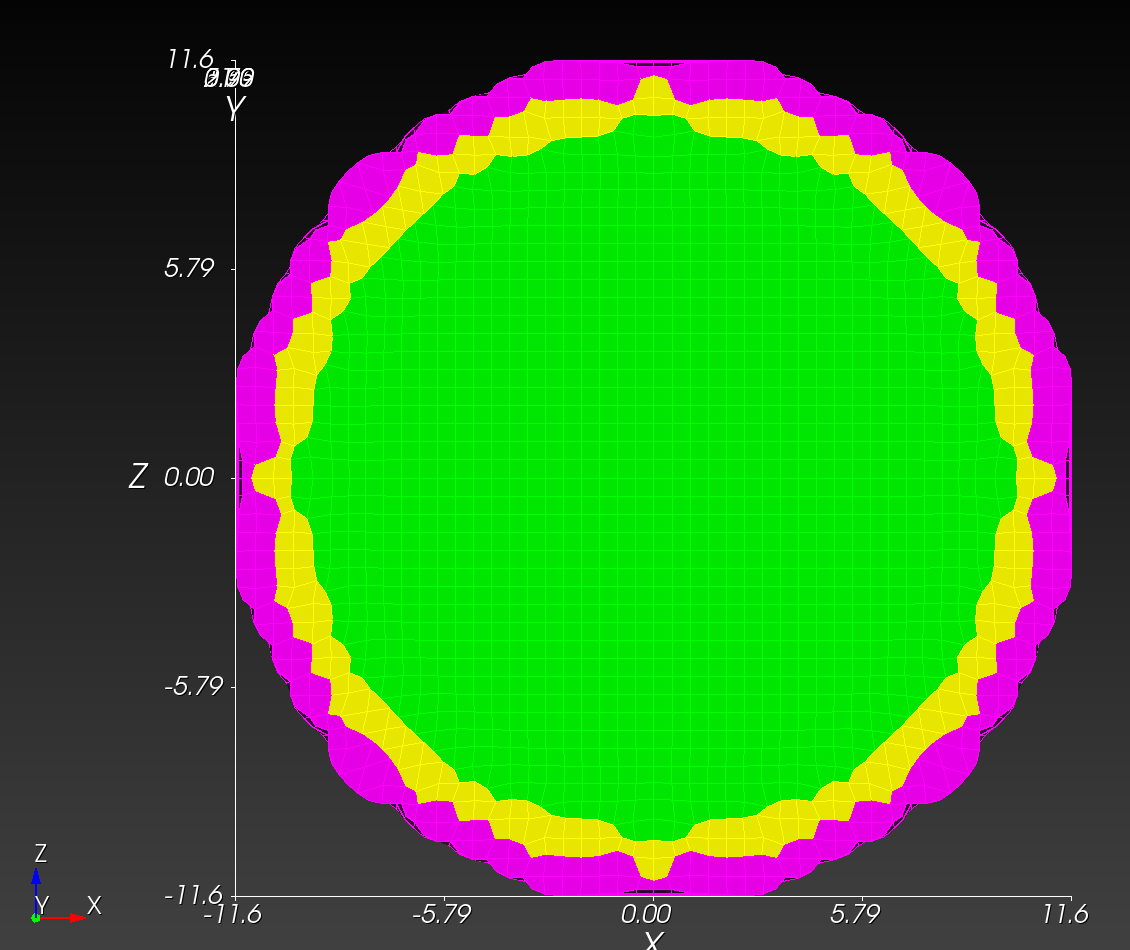

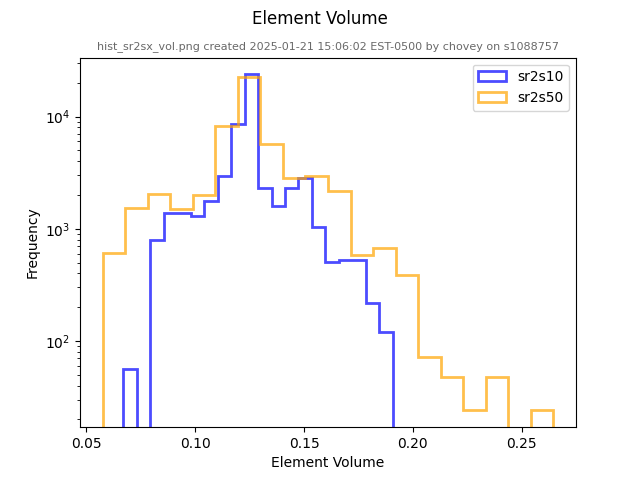

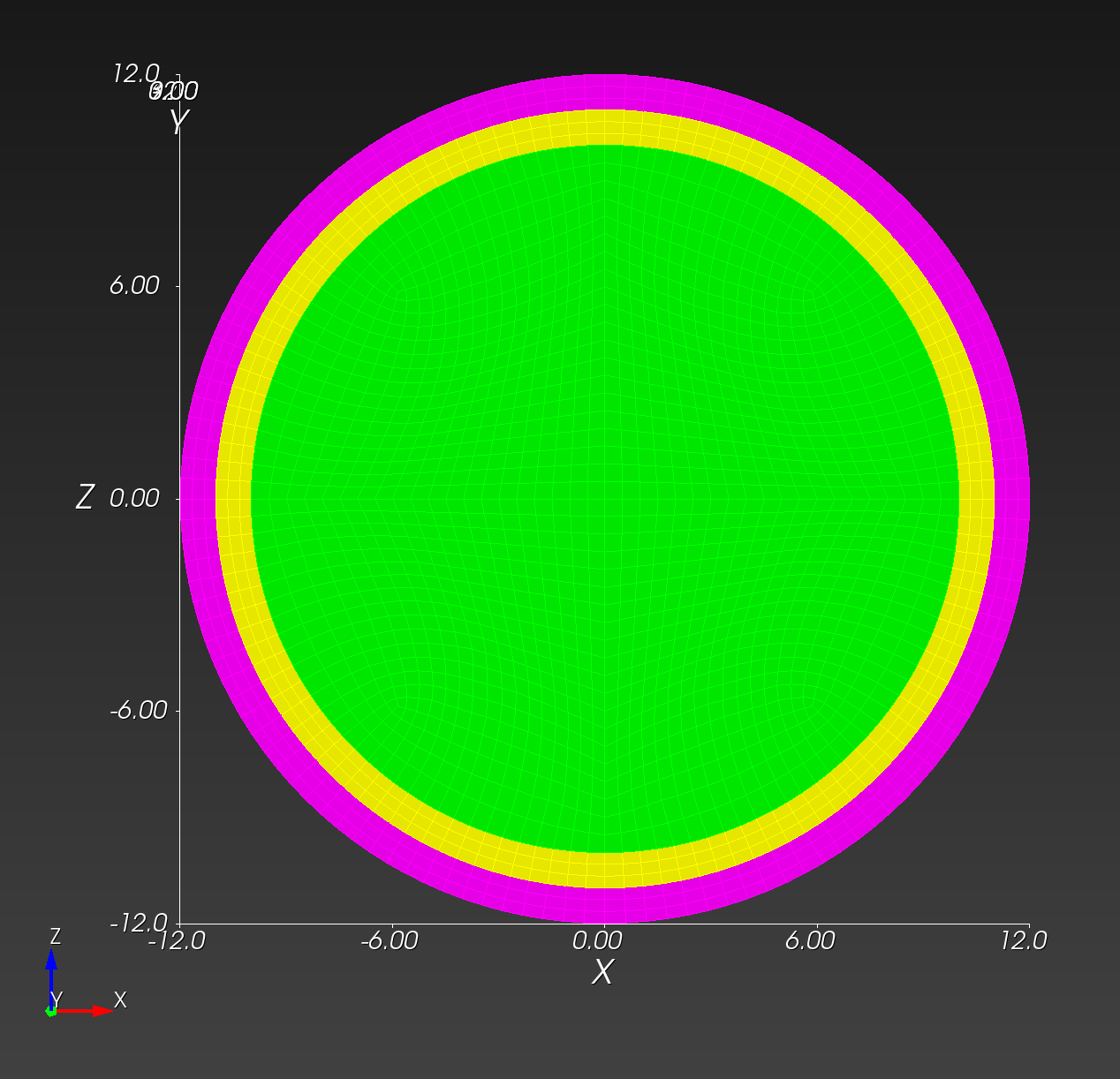

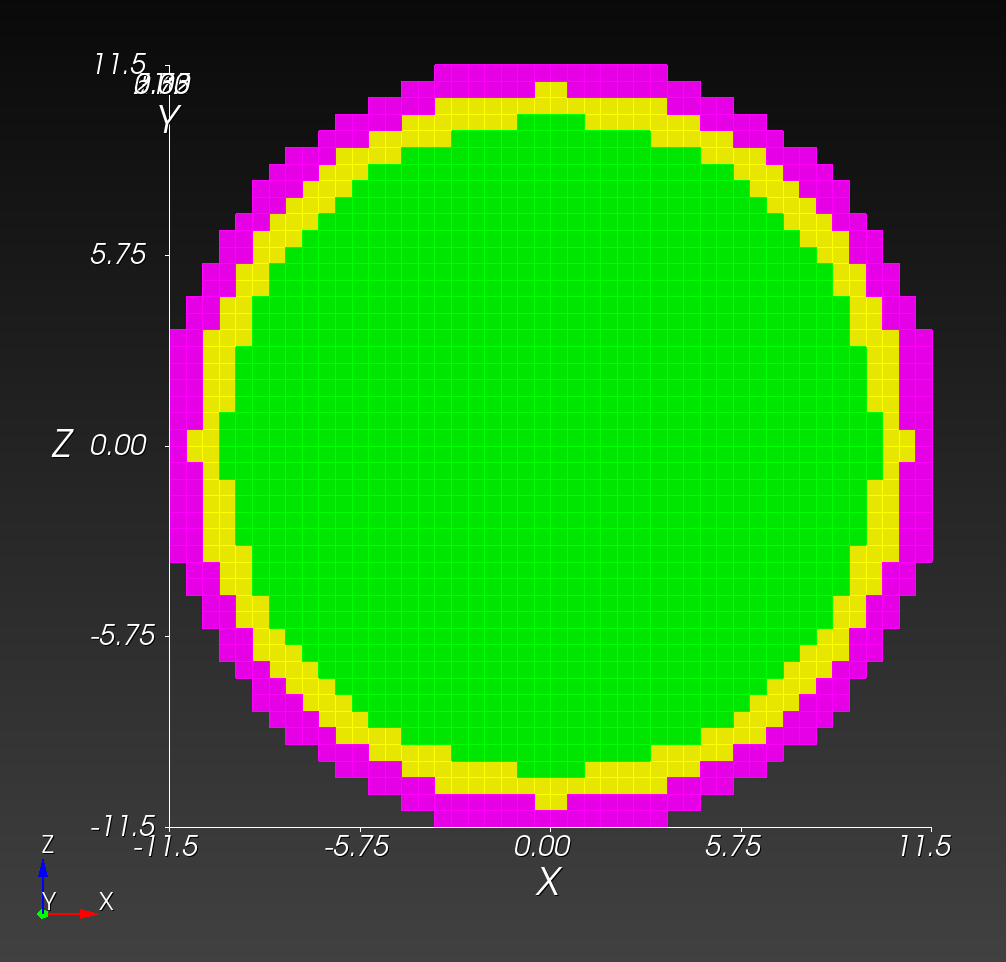

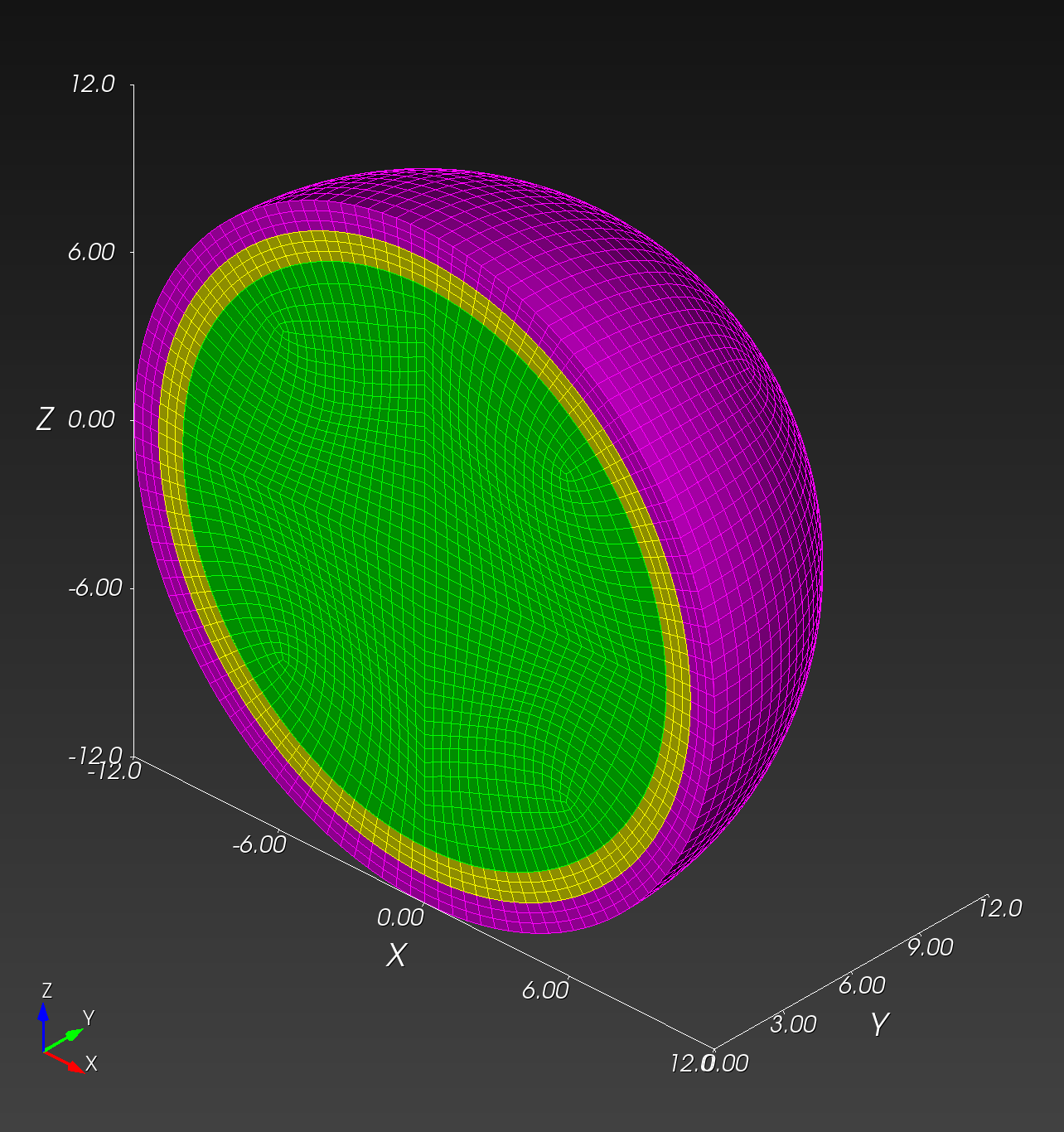

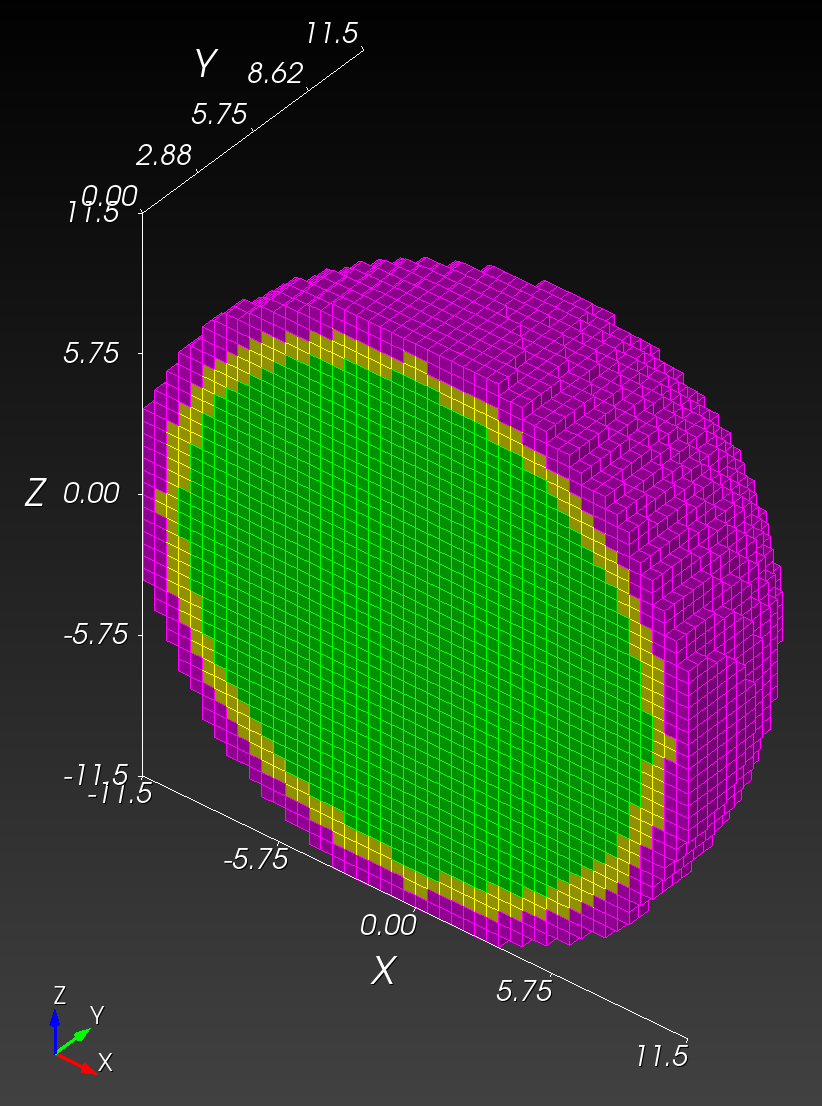

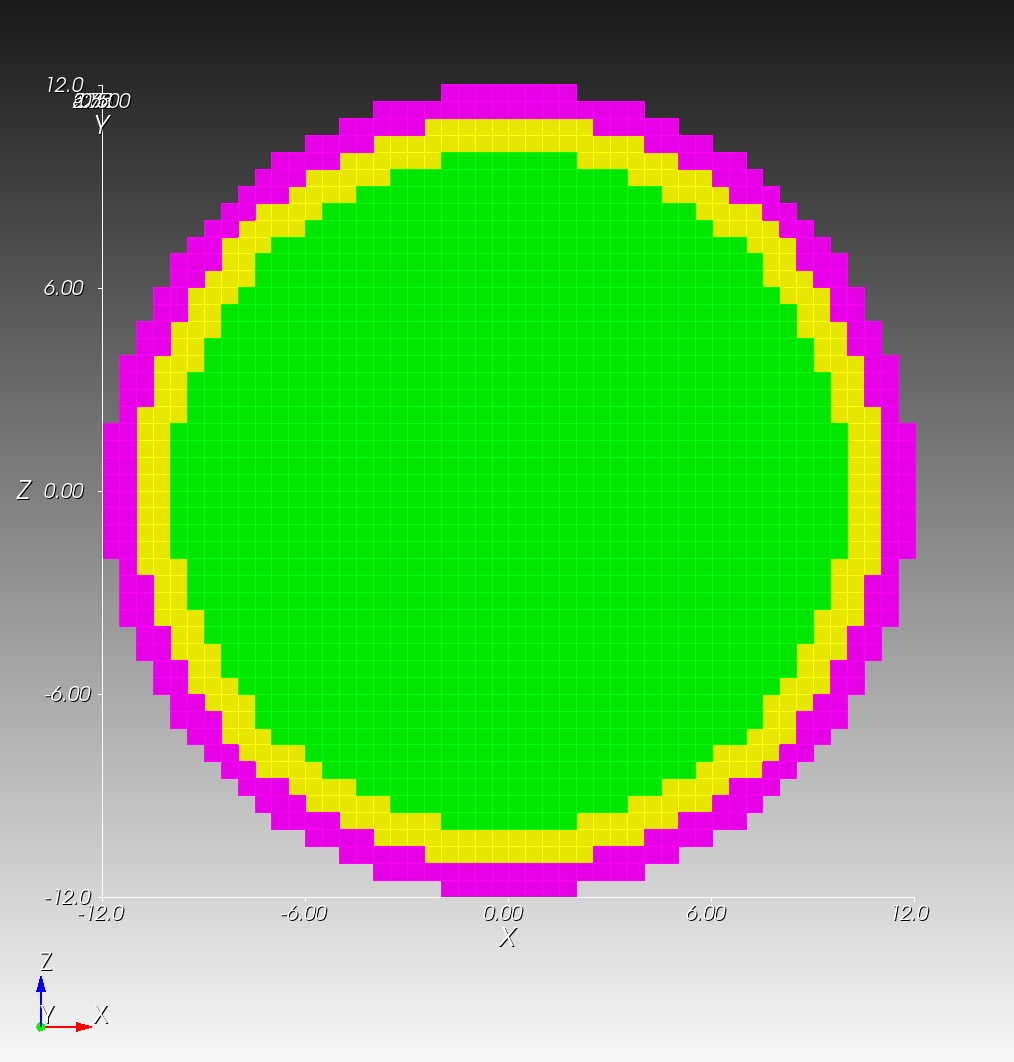

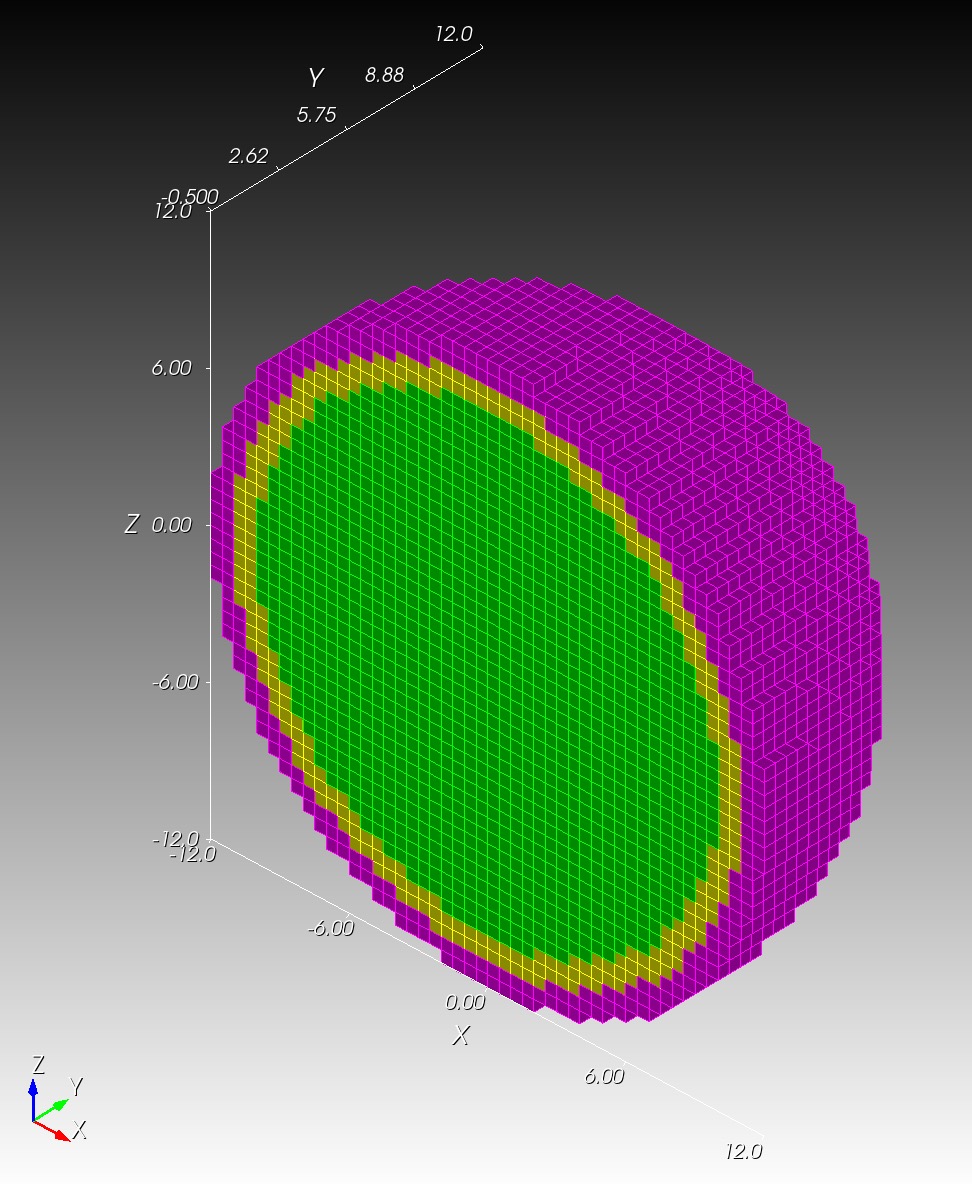

7 x 7 x 7) segmentation can be thought of as a conceptual start point for a process called Loop subdivision, used to produce spherical shapes at higher resolutions. See Octa Loop for additional information. A sphere in resolutions of (24 x 24 x 24) and (48 x 48 x 48), used in the Sphere with Shells section, is shown below:

Segmentation File Types

Two types of segmentation files types are supported: .spn and .npy.

The .spn file can be thought of as the most elementary segmentation file type because it is

saved as an ASCII text file and is therefore readily human-readable.

Below is an abbreviated and commented .spn segmentation of the (7 x 7 x 7) octahedron

discussed above.

0 # slice 1, row 1

0

0

0

0

0

0

0 # slice 1, row 2

0

0

0

0

0

0

0 # slice 1, row 3

0

0

0

0

0

0

0 # slice 1, row 4

0

0

3

0

0

0

0 # slice 1, row 5

0

0

0

0

0

0

0 # slice 1, row 6

0

0

0

0

0

0

0 # slice 1, row 7

0

0

0

0

0

0

# ... and so on for the remaining six slices

A disadvantage of .spn is that it can become difficult to keep track of data

slice-by-slice. Because it is not a compressed binary file, the .spn has a

larger file size than the equivalent .npy.

The .npy segmentation file format is an alternative to the .spn

format. The .npy format can be advantageous because is can be generated easily

from Python. This approach can be useful because Python can be used to

algorithmically create a segmentation and serialized the segmentation to a compressed

binary file in .npy format.

We illustrate creating the octahedron segmentation in Python:

"""This module creates a 7x7x7 octahedron segmentation."""

import numpy as np

segmentation = np.array(

[

[ # slice 1

[0, 0, 0, 0, 0, 0, 0], # row 1

[0, 0, 0, 0, 0, 0, 0], # row 2

[0, 0, 0, 0, 0, 0, 0], # row 3

[0, 0, 0, 3, 0, 0, 0], # row 4

[0, 0, 0, 0, 0, 0, 0], # row 5

[0, 0, 0, 0, 0, 0, 0], # row 6

[0, 0, 0, 0, 0, 0, 0], # row 7

],

[ # slice 2

[0, 0, 0, 0, 0, 0, 0], # row 1

[0, 0, 0, 0, 0, 0, 0], # row 2

[0, 0, 0, 3, 0, 0, 0], # row 3

[0, 0, 3, 2, 3, 0, 0], # row 4

[0, 0, 0, 3, 0, 0, 0], # row 5

[0, 0, 0, 0, 0, 0, 0], # row 6

[0, 0, 0, 0, 0, 0, 0], # row 7

],

[ # slice 3

[0, 0, 0, 0, 0, 0, 0], # row 1

[0, 0, 0, 3, 0, 0, 0], # row 2

[0, 0, 3, 2, 3, 0, 0], # row 3

[0, 3, 2, 1, 2, 3, 0], # row 4

[0, 0, 3, 2, 3, 0, 0], # row 5

[0, 0, 0, 3, 0, 0, 0], # row 6

[0, 0, 0, 0, 0, 0, 0], # row 7

],

[ # slice 4

[0, 0, 0, 3, 0, 0, 0], # row 1

[0, 0, 3, 2, 3, 0, 0], # row 2

[0, 3, 2, 1, 2, 3, 0], # row 3

[3, 2, 1, 1, 1, 2, 3], # row 4

[0, 3, 2, 1, 2, 3, 0], # row 5

[0, 0, 3, 2, 3, 0, 0], # row 6

[0, 0, 0, 3, 0, 0, 0], # row 7

],

[ # slice 5

[0, 0, 0, 0, 0, 0, 0], # row 1

[0, 0, 0, 3, 0, 0, 0], # row 2

[0, 0, 3, 2, 3, 0, 0], # row 3

[0, 3, 2, 1, 2, 3, 0], # row 4

[0, 0, 3, 2, 3, 0, 0], # row 5

[0, 0, 0, 3, 0, 0, 0], # row 6

[0, 0, 0, 0, 0, 0, 0], # row 7

],

[ # slice 6

[0, 0, 0, 0, 0, 0, 0], # row 1

[0, 0, 0, 0, 0, 0, 0], # row 2

[0, 0, 0, 3, 0, 0, 0], # row 3

[0, 0, 3, 2, 3, 0, 0], # row 4

[0, 0, 0, 3, 0, 0, 0], # row 5

[0, 0, 0, 0, 0, 0, 0], # row 6

[0, 0, 0, 0, 0, 0, 0], # row 7

],

[ # slice 7

[0, 0, 0, 0, 0, 0, 0], # row 1

[0, 0, 0, 0, 0, 0, 0], # row 2

[0, 0, 0, 0, 0, 0, 0], # row 3

[0, 0, 0, 3, 0, 0, 0], # row 4

[0, 0, 0, 0, 0, 0, 0], # row 5

[0, 0, 0, 0, 0, 0, 0], # row 6

[0, 0, 0, 0, 0, 0, 0], # row 7

],

],

dtype=np.uint8,

)

FILE_NAME = "octahedron.npy"

np.save(FILE_NAME, segmentation)

print(f"Saved {FILE_NAME} with shape {segmentation.shape}.")

The convert Command

automesh allows for interoperability between .spn. and .npy file types.

Use the automesh help to discover the command syntax:

automesh convert --help

Converts between mesh or segmentation file types

Usage: automesh convert <COMMAND>

Commands:

mesh Converts mesh file types (exo | inp | stl) -> (exo | mesh | stl | vtk)

segmentation Converts segmentation file types (npy | spn) -> (npy | spn)

help Print this message or the help of the given subcommand(s)

Options:

-h, --help Print help

For example, to convert the octahedron.npy to octahedron2.spn:

automesh convert segmentation -i octahedron.npy -o octahedron2.spn

automesh 0.3.8

Reading octahedron.npy

Done 75.845µs [4 materials, 343 voxels]

Writing octahedron2.spn

Done 45.431µs

Total 446.1µs

To convert from octahedron2.spn to octahedron3.npy:

automesh convert segmentation -i octahedron2.spn -x 7 -y 7 -z 7 -o octahedron3.npy

automesh 0.3.8

Reading octahedron2.spn

Done 36.585µs [4 materials, 343 voxels]

Writing octahedron3.npy

Done 32.926µs

Total 345.014µs

Remark: Notice that the

.spnrequires number of voxels in each of the x, y, and z dimensions to be specified using--nelx,--nely,--nelz(or, equivalently-x,-y,-z) flags.

We can verify the two .npy files encode the same segmentation:

"""The purpose of this module is to show that the

segmentation data encoded in two .npy files is the same.

"""

import numpy as np

aa = np.load("octahedron.npy")

print(aa)

bb = np.load("octahedron3.npy")

print(bb)

comparison = aa == bb

print(comparison)

result = np.all(comparison)

print(f"Element-by-element equality is {result}.")

Mesh Generation

automesh creates several finite element mesh file types from

a segmentation.

Use the automesh help to discover the command syntax:

automesh mesh --help

Creates a finite element mesh from a tessellation or segmentation

Usage: automesh mesh <COMMAND>

Commands:

hex Creates an all-hexahedral mesh from a tessellation or segmentation

tet Creates an all-tetrahedral mesh from a tessellation or segmentation

tri Creates all-triangular isosurface(s) from a tessellation or segmentation

help Print this message or the help of the given subcommand(s)

Options:

-h, --help Print help

To convert the octahedron.npy into an ABAQUS finite element mesh, while removing

segmentation 0 from the mesh:

automesh mesh hex -r 0 -i octahedron.npy -o octahedron.inp

automesh 0.3.8

Reading octahedron.npy

Done 62.609µs [4 materials, 343 voxels]

Meshing voxels into hexahedra

Done 272.245µs [3 blocks, 63 elements, 160 nodes]

Writing octahedron.inp

Done 194.175µs

Total 842.835µs

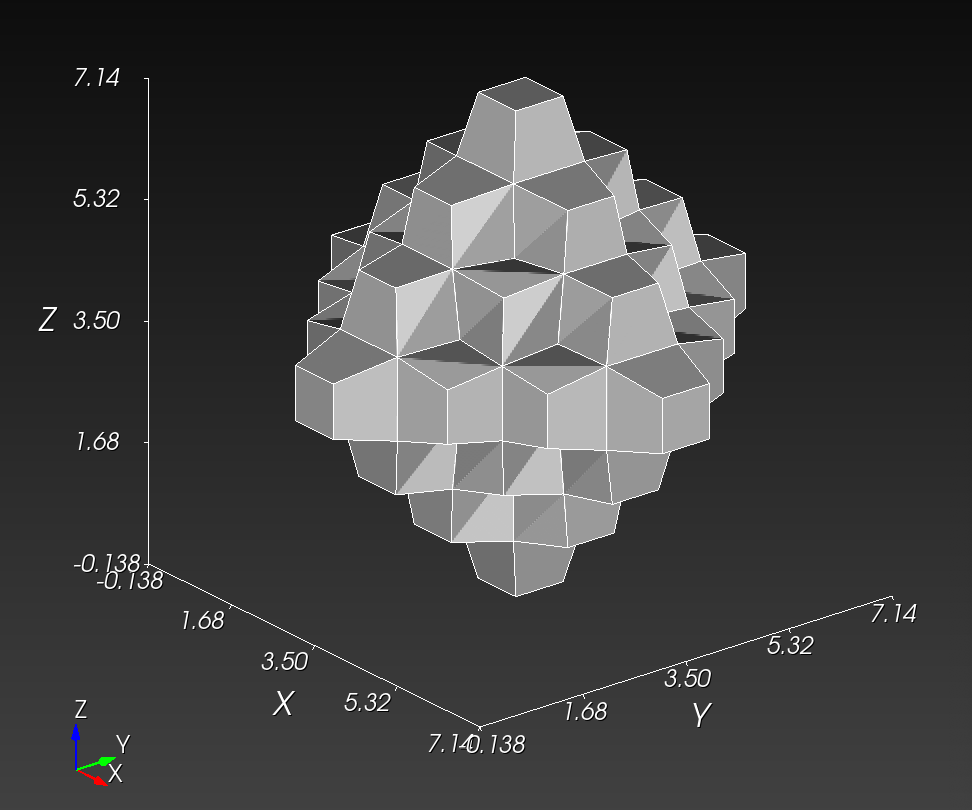

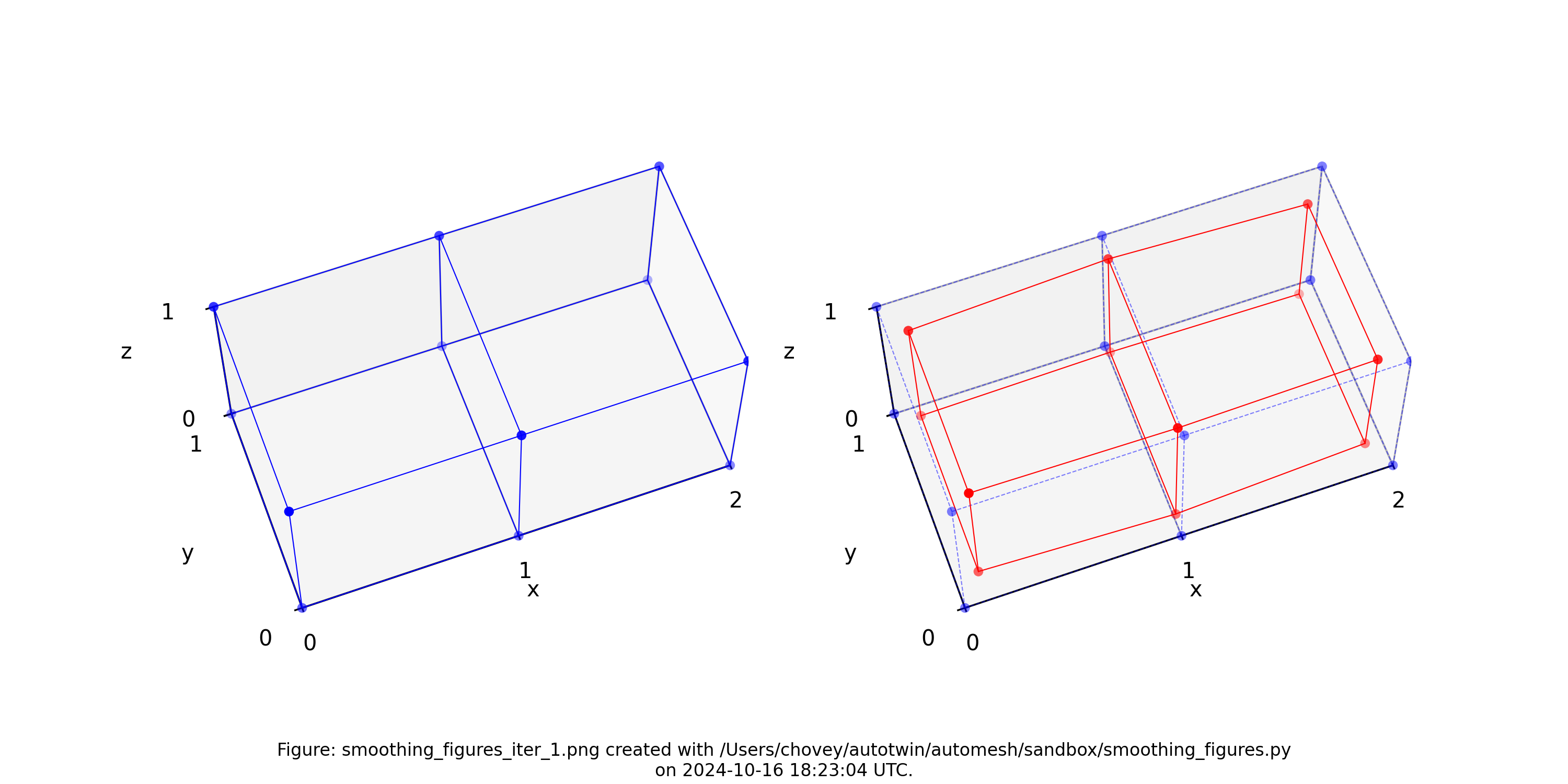

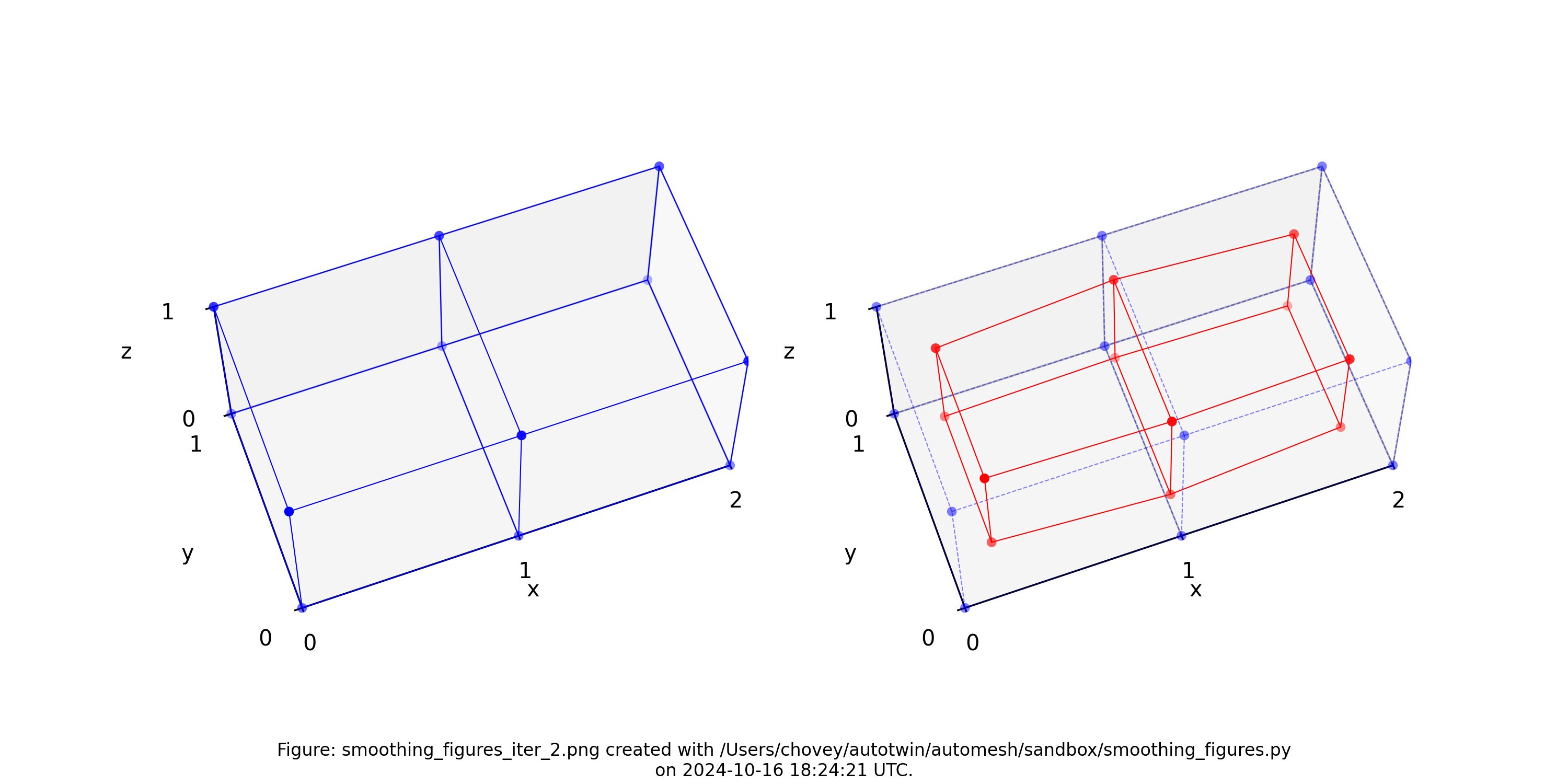

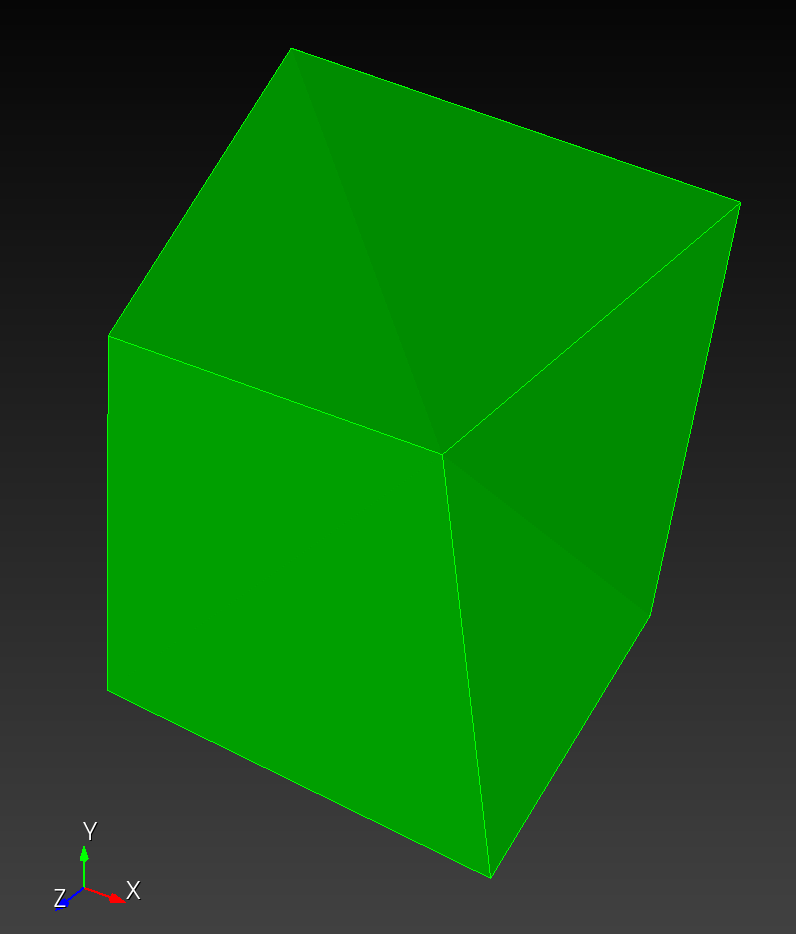

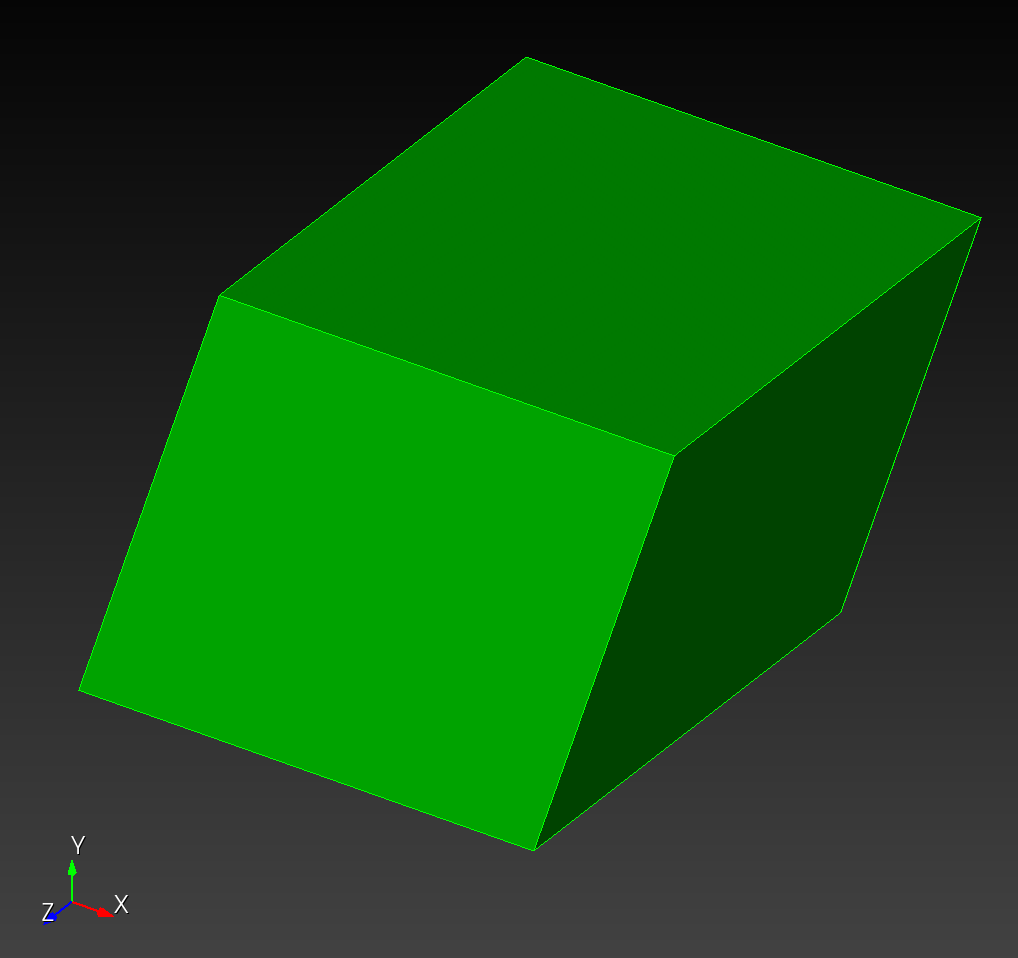

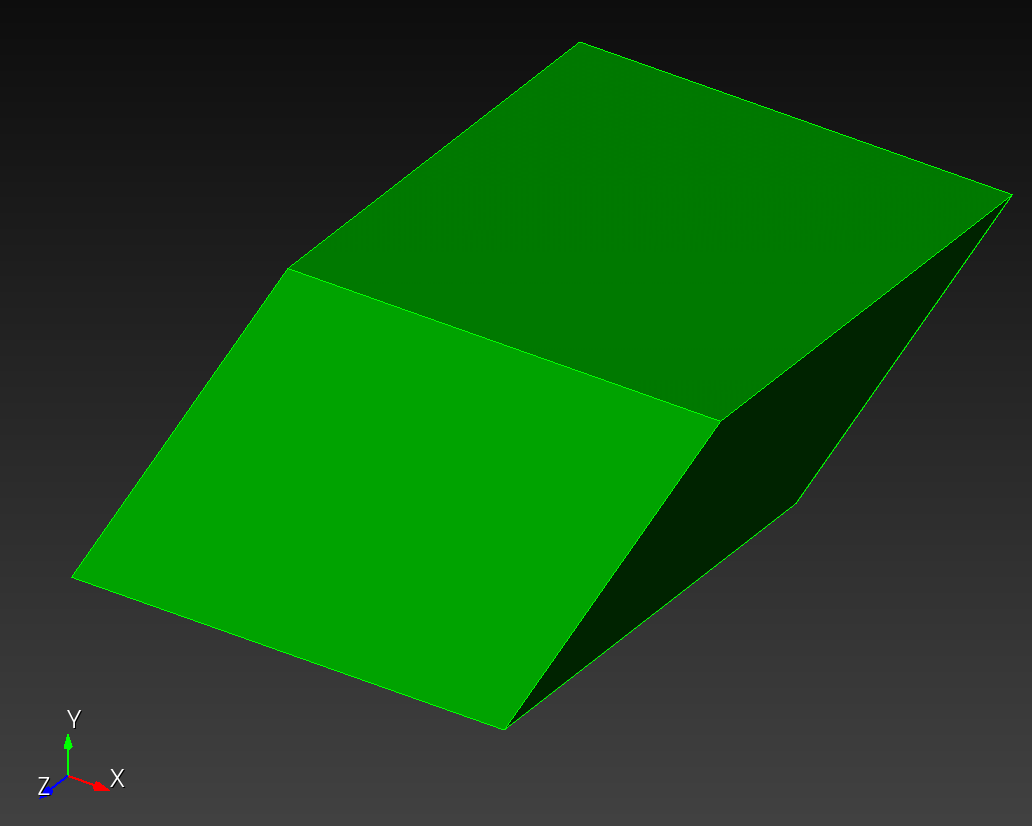

Smoothing

Use the automesh help to discover the command syntax:

automesh smooth --help

Applies smoothing to an existing mesh

Usage: automesh smooth <COMMAND>

Commands:

hex Smooths an all-hexahedral mesh

tet Smooths an all-tetrahedral mesh

tri Smooths an all-triangular mesh

help Print this message or the help of the given subcommand(s)

Options:

-h, --help Print help

To smooth the octahedron.inp mesh with Taubin smoothing parameters for five

iterations:

automesh smooth hex -n 5 -i octahedron.inp -o octahedron_s05.inp

automesh 0.3.8

Reading octahedron.inp

Done 133.775µs

Smoothing with 5 iterations of Taubin

Done 63.685µs

Writing octahedron_s05.inp

Done 153.503µs

Total 631.939µs

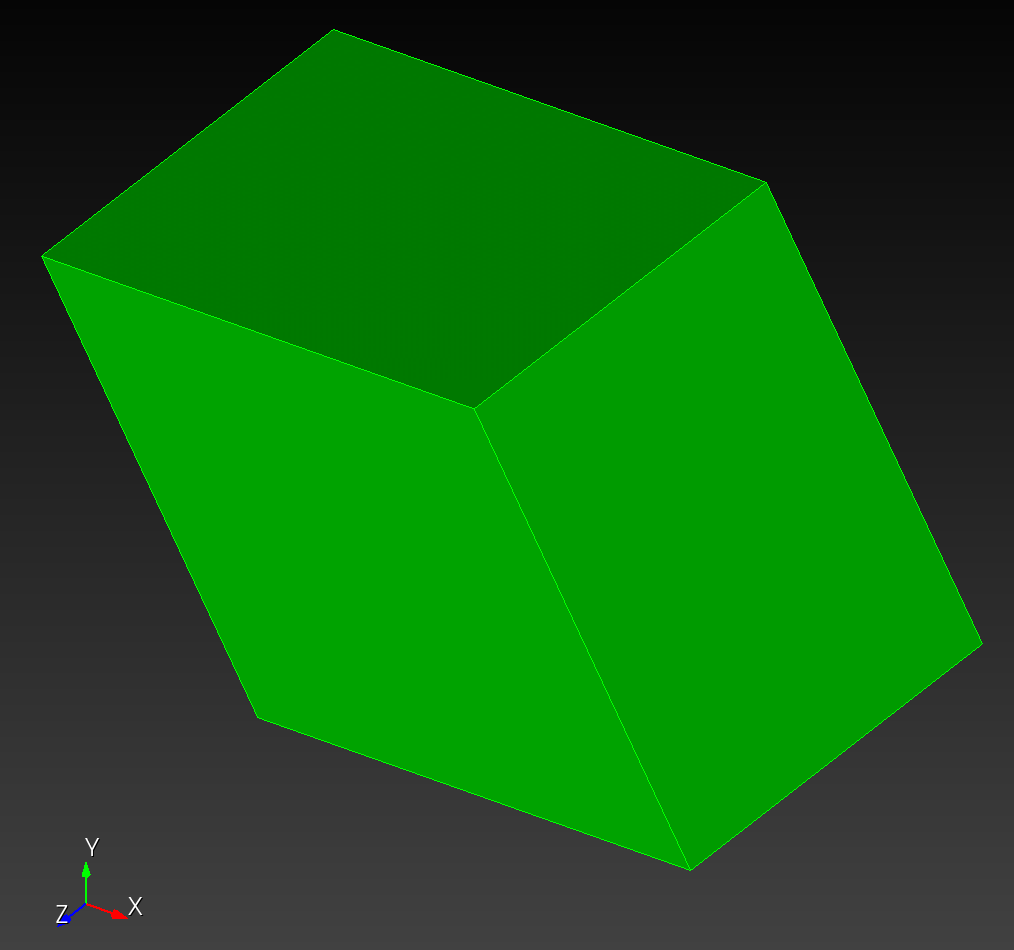

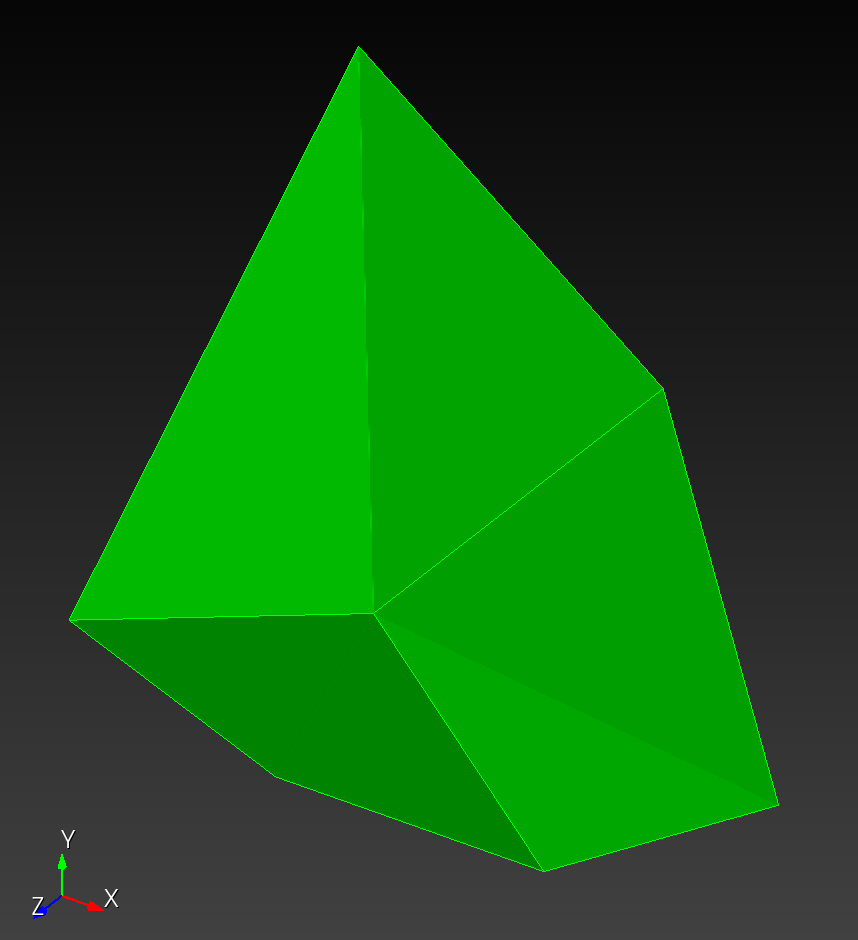

The original voxel mesh and the smoothed voxel mesh are shown below:

octahedron.inp | octahedron_s05.inp |

|---|---|

|  |

See the Smoothing section for more information.

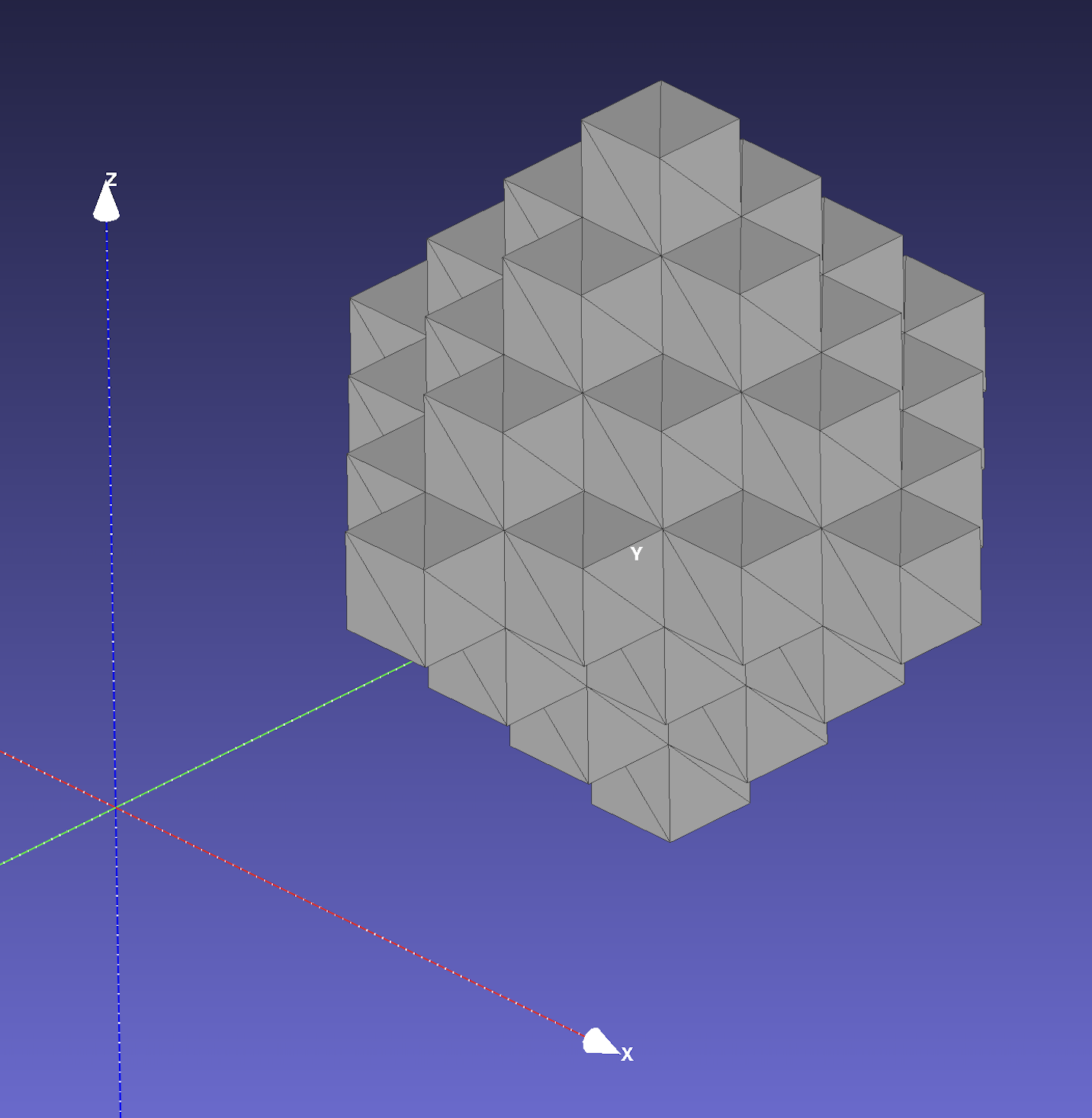

Isosurface

An isosurface can be generated from a segmentation using the tri command.

To create a mesh of the outer isosurfaces contained in the octahedron example:

automesh mesh tri -r 0 1 2 -i octahedron.npy -o octahedron.stl

automesh 0.3.8

Reading octahedron.npy

Done 62.328µs [4 materials, 343 voxels]

Meshing internal surfaces

Done 557.514µs [38 blocks, 456 elements, 808 nodes]

Writing octahedron.stl

Done 70.314µs

Total 985.194µs

The surfaces are visualized below:

octahedron.stl in MeshLab | octahedron.stl in Cubit with cut plane |

|---|---|

|  |

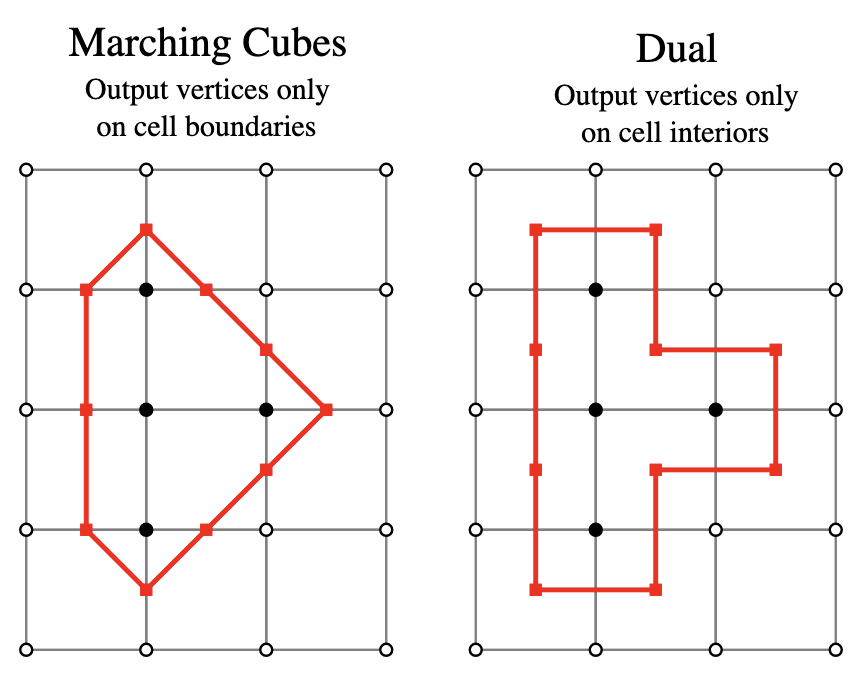

automesh creates an isosurface from the boundary faces of voxels. The

quadrilateral faces are divided into two triangles. The Isosurface section contains more details about alternative methods used to create an isosurface.

The Sphere with Shells section contains more examples of the command line interface.

Examples

Following are examples created with automesh.

Unit Tests

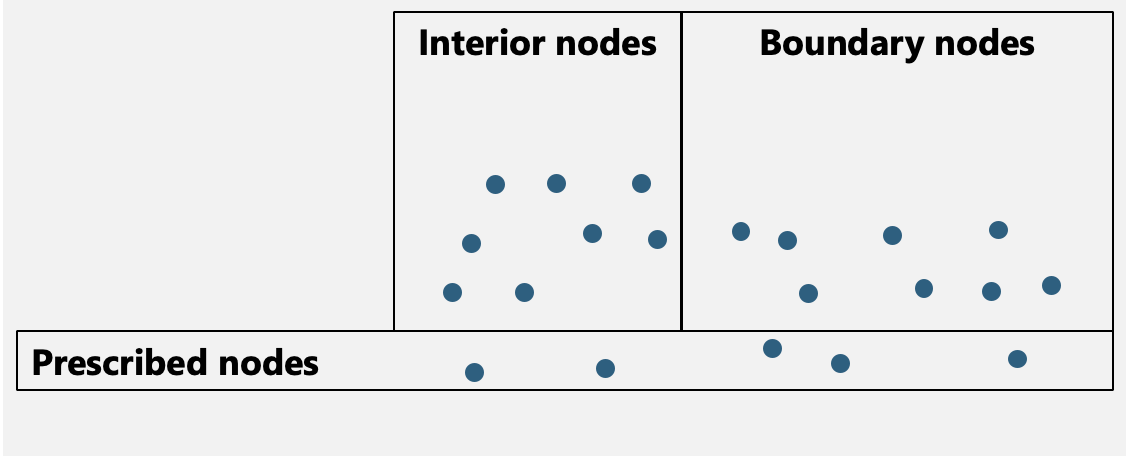

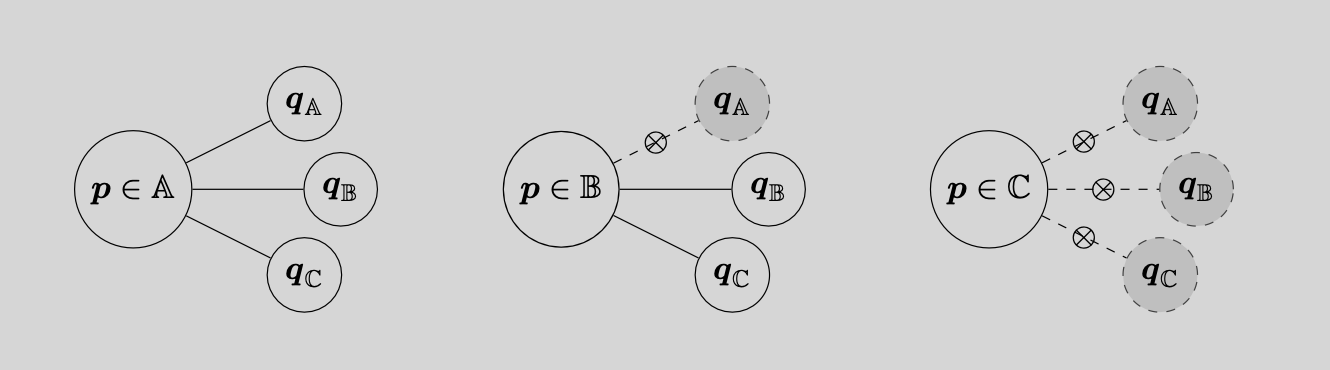

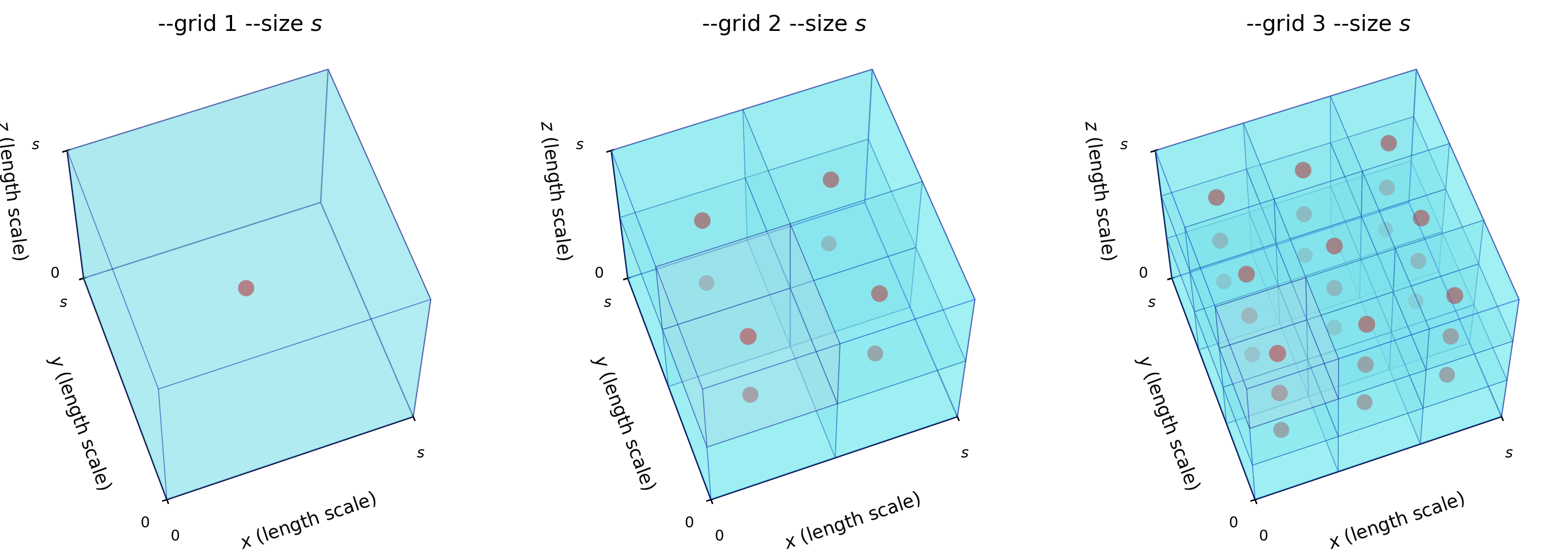

The following illustates a subset of the unit tests used to validate the code implementation. For a complete listing of the unit sets, see voxels.rs and voxel.py.

The Python code used to generate the figures is included below.

Remark: We use the convention np when importing numpy as follows:

import numpy as np

Single

The minimum working example (MWE) is a single voxel, used to create a single mesh consisting of one block consisting of a single element. The NumPy input single.npy contains the following segmentation:

segmentation = np.array(

[

[

[ 11, ],

],

],

dtype=np.uint8,

)

where the segmentation 11 denotes block 11 in the finite element mesh.

Remark: Serialization (write and read)

| Write | Read |

|---|---|

Use the np.save command to serialize the segmentation a .npy file | Use the np.load command to deserialize the segmentation from a .npy file |

Example: Write the data in segmentation to a file called seg.npynp.save("seg.npy", segmentation) | Example: Read the data from the file seg.npy to a variable called loaded_arrayloaded_array = np.load("seg.npy") |

Equivalently, the single.spn contains a single integer:

11 # x:1 y:1 z:1

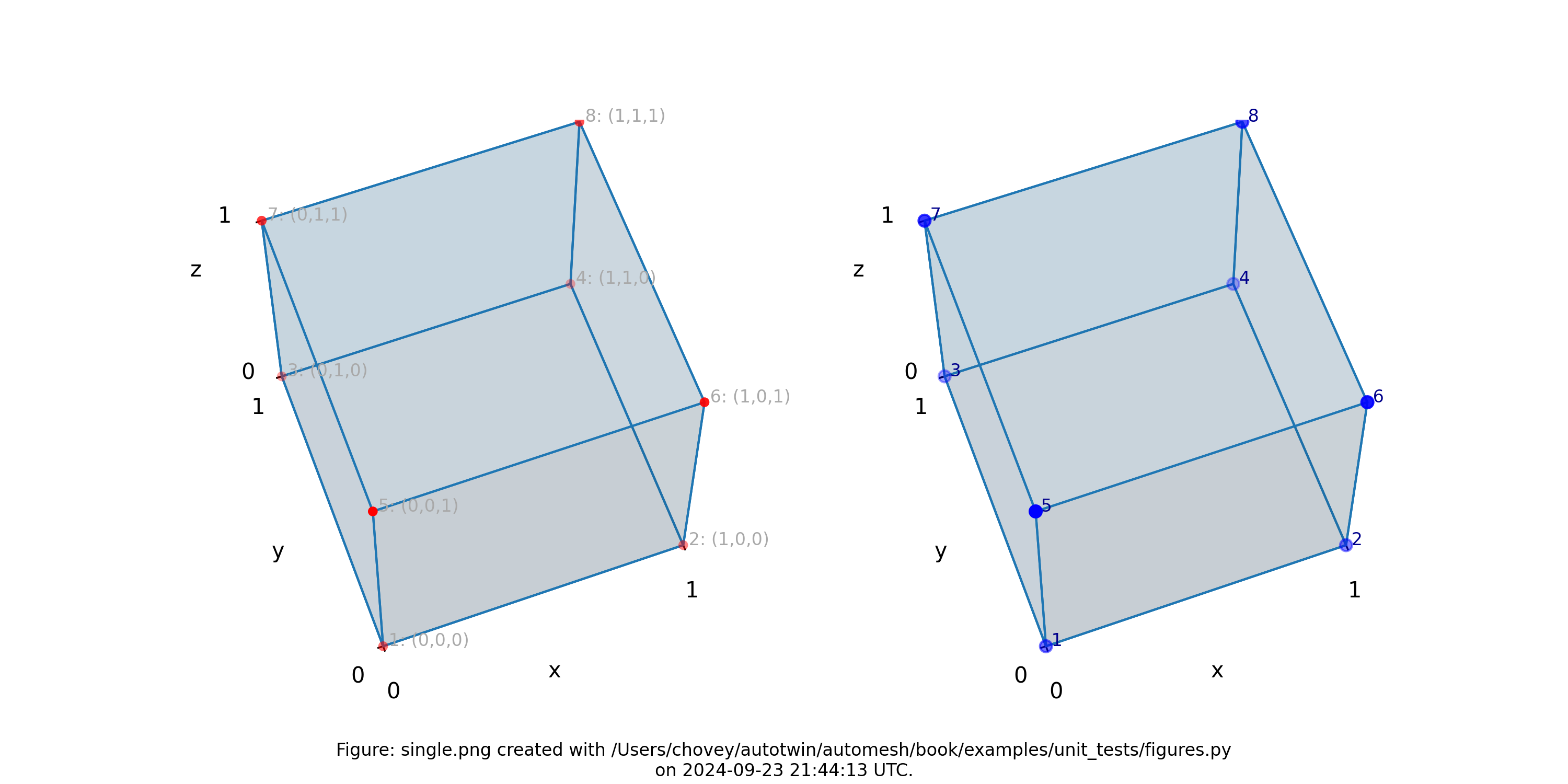

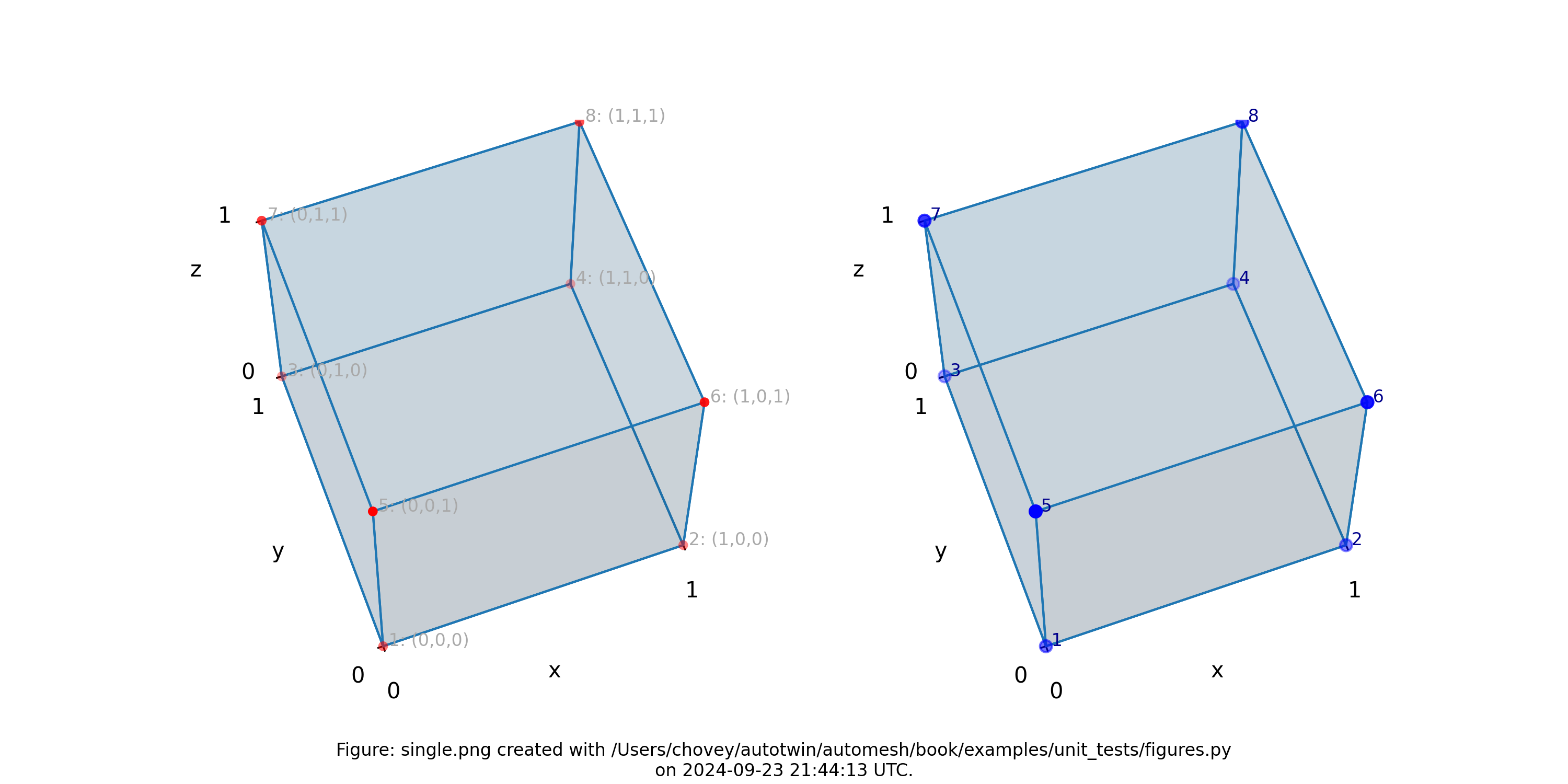

The resulting finite element mesh is visualized is shown in the following figure:

Figure: The single.png visualization, (left) lattice node numbers, (right)

mesh node numbers. Lattice node numbers appear in gray, with (x, y, z)

indices in parenthesis. The right-hand rule is used. Lattice coordinates

start at (0, 0, 0), and proceed along the x-axis, then

the y-axis, and then the z-axis.

The finite element mesh local node numbering map to the following global node numbers identically, and :

[1, 2, 4, 3, 5, 6, 8, 7]

->

[1, 2, 4, 3, 5, 6, 8, 7]

which is a special case not typically observed, as shown in more complex examples below.

Remark: Input .npy and .spn files for the examples below can be found on the repository at automesh/tests/input.

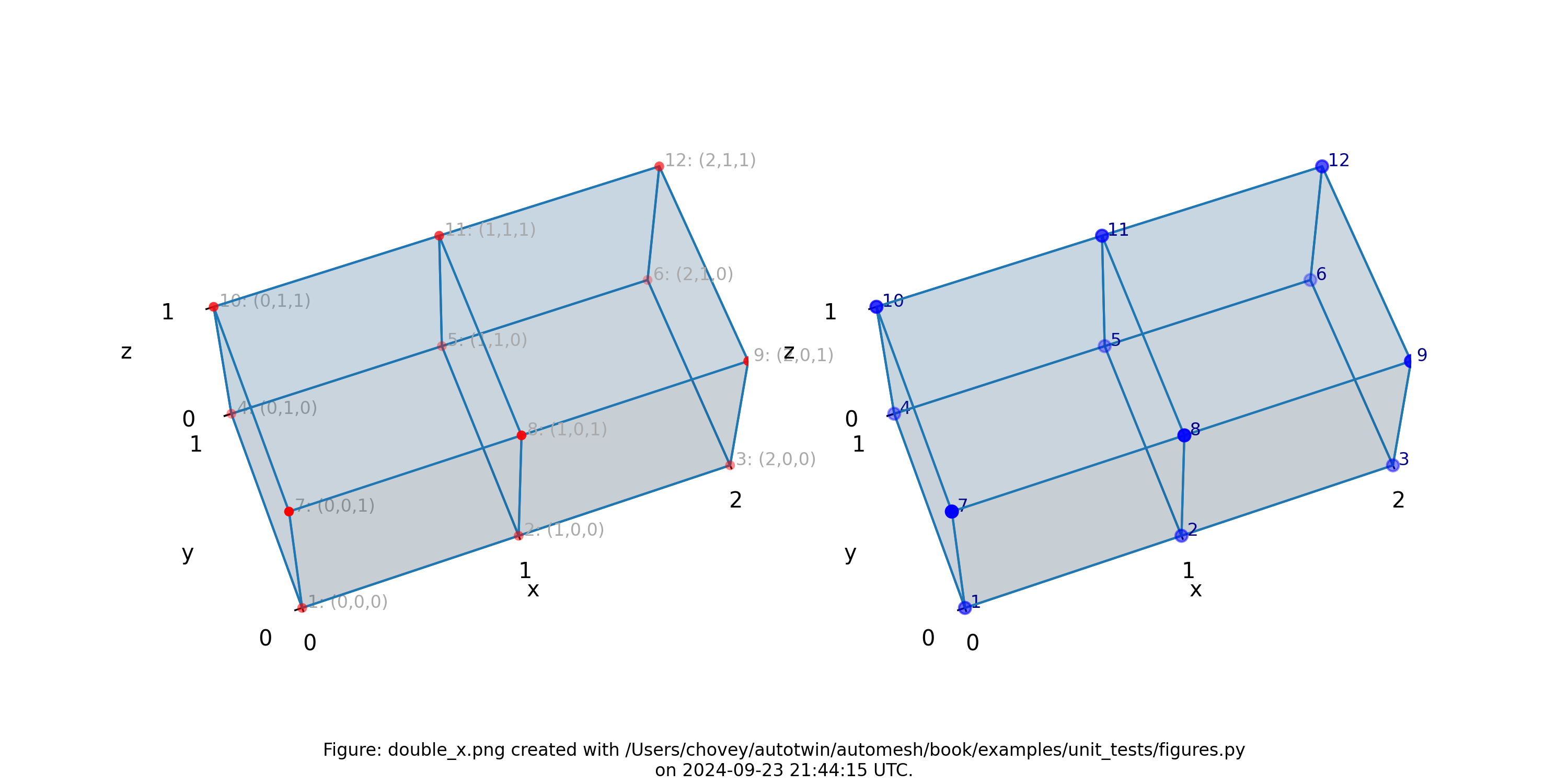

Double

The next level of complexity example is a two-voxel domain, used to create

a single block composed of two finite elements. We test propagation in

both the x and y directions. The figures below show these two

meshes.

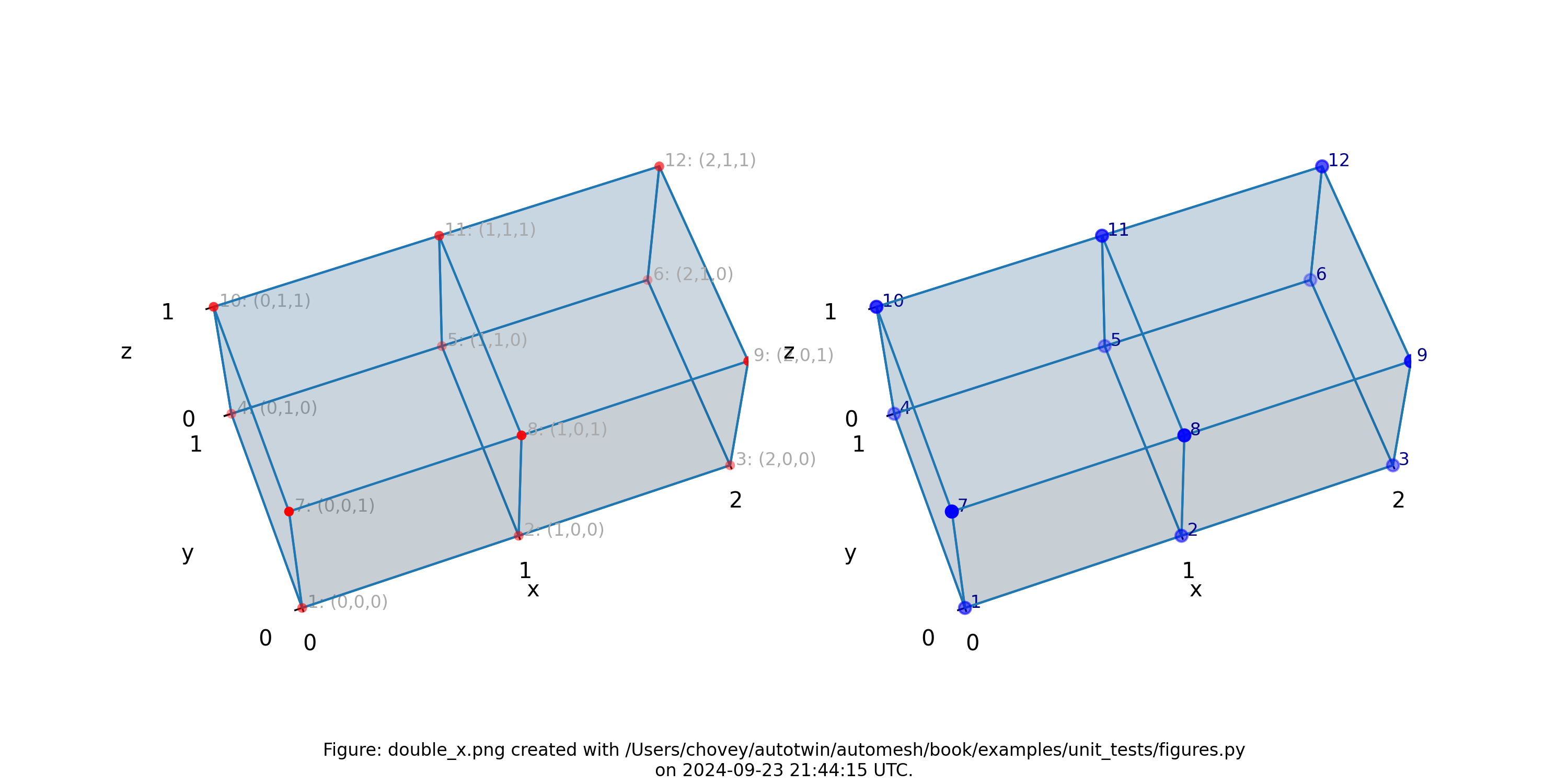

Double X

11 # x:1 y:1 z:1

11 # 2 1 1

where the segmentation 11 denotes block 11 in the finite element mesh.

Figure: Mesh composed of a single block with two elements, propagating along

the x-axis, (left) lattice node numbers, (right) mesh node numbers.

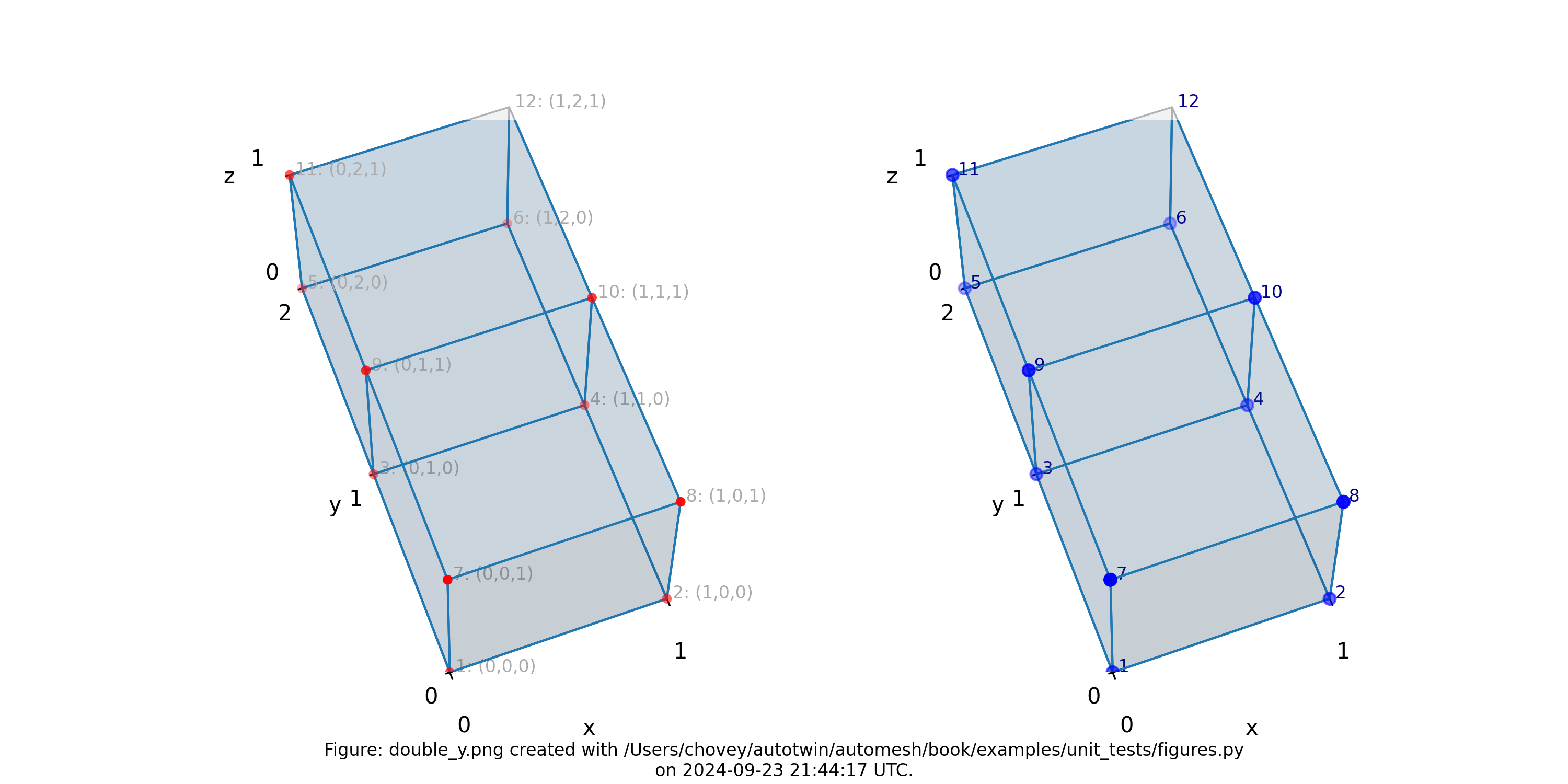

Double Y

11 # x:1 y:1 z:1

11 # 1 2 1

where the segmentation 11 denotes block 11 in the finite element mesh.

Figure: Mesh composed of a single block with two elements, propagating along

the y-axis, (left) lattice node numbers, (right) mesh node numbers.

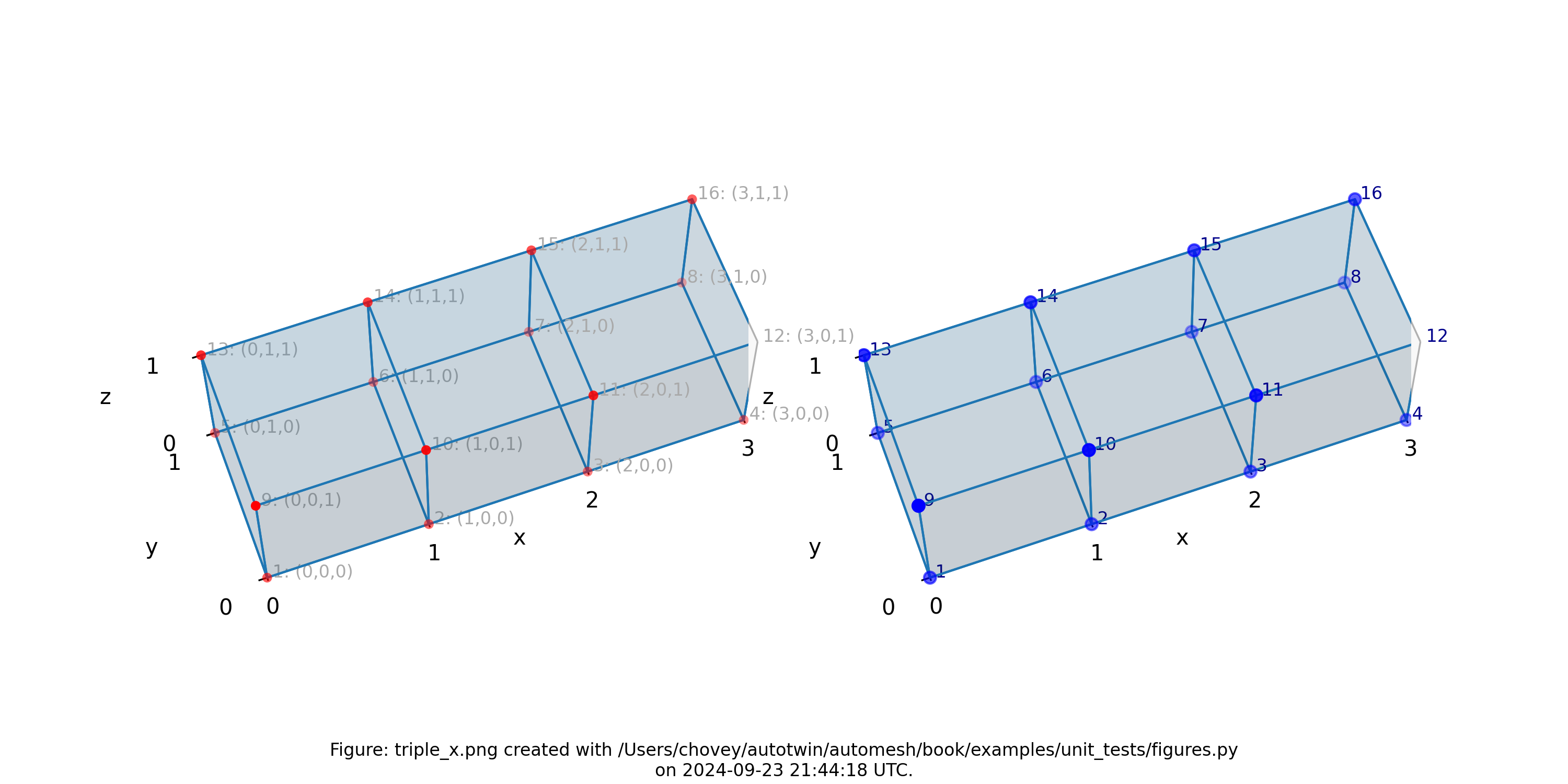

Triple

11 # x:1 y:1 z:1

11 # 2 1 1

11 # 3 1 1

where the segmentation 11 denotes block 11 in the finite element mesh.

Figure: Mesh composed of a single block with three elements, propagating along

the x-axis, (left) lattice node numbers, (right) mesh node numbers.

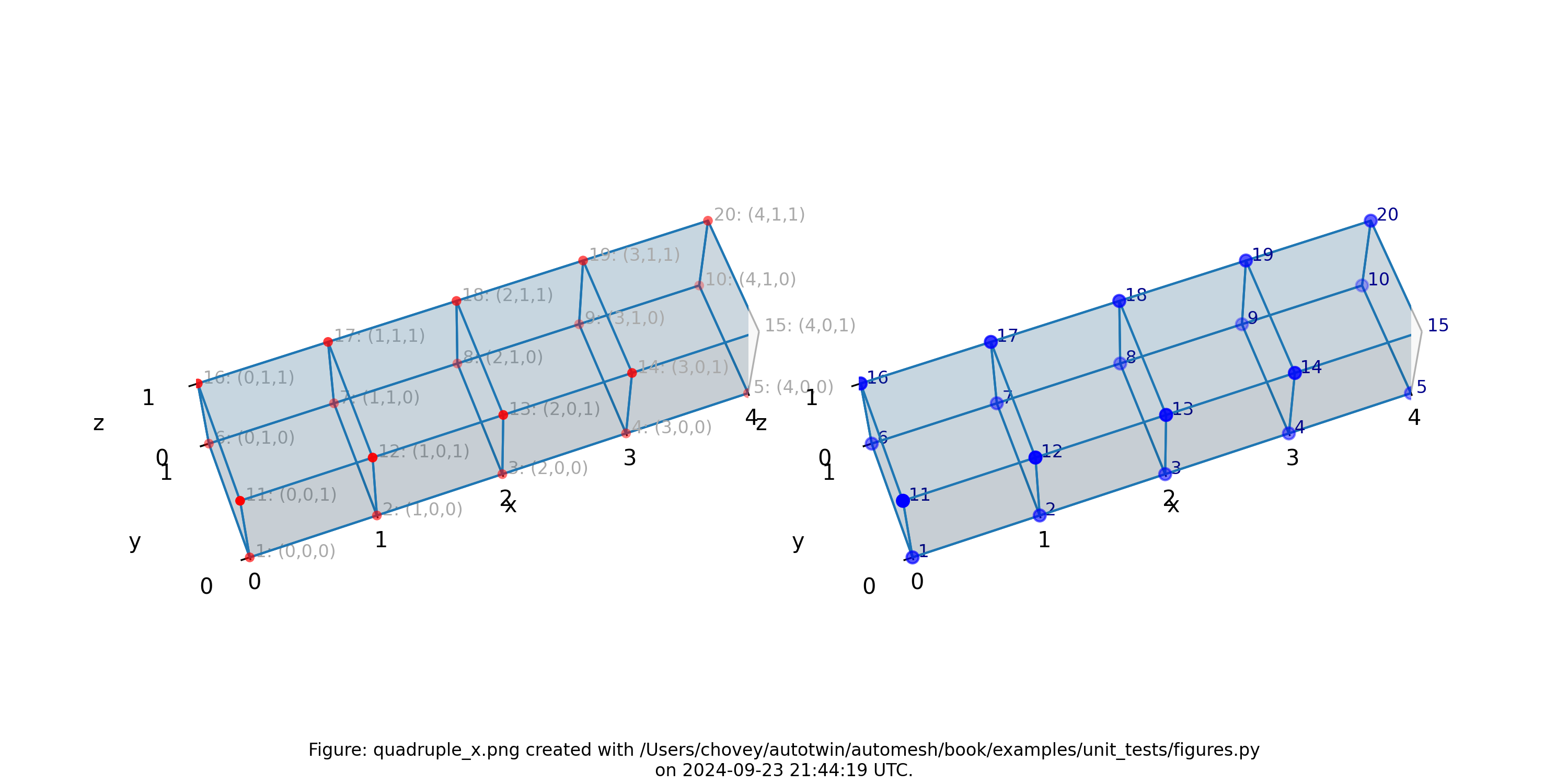

Quadruple

11 # x:1 y:1 z:1

11 # 2 1 1

11 # 3 1 1

11 # 4 1 1

where the segmentation 11 denotes block 11 in the finite element mesh.

Figure: Mesh composed of a single block with four elements, propagating along

the x-axis, (left) lattice node numbers, (right) mesh node numbers.

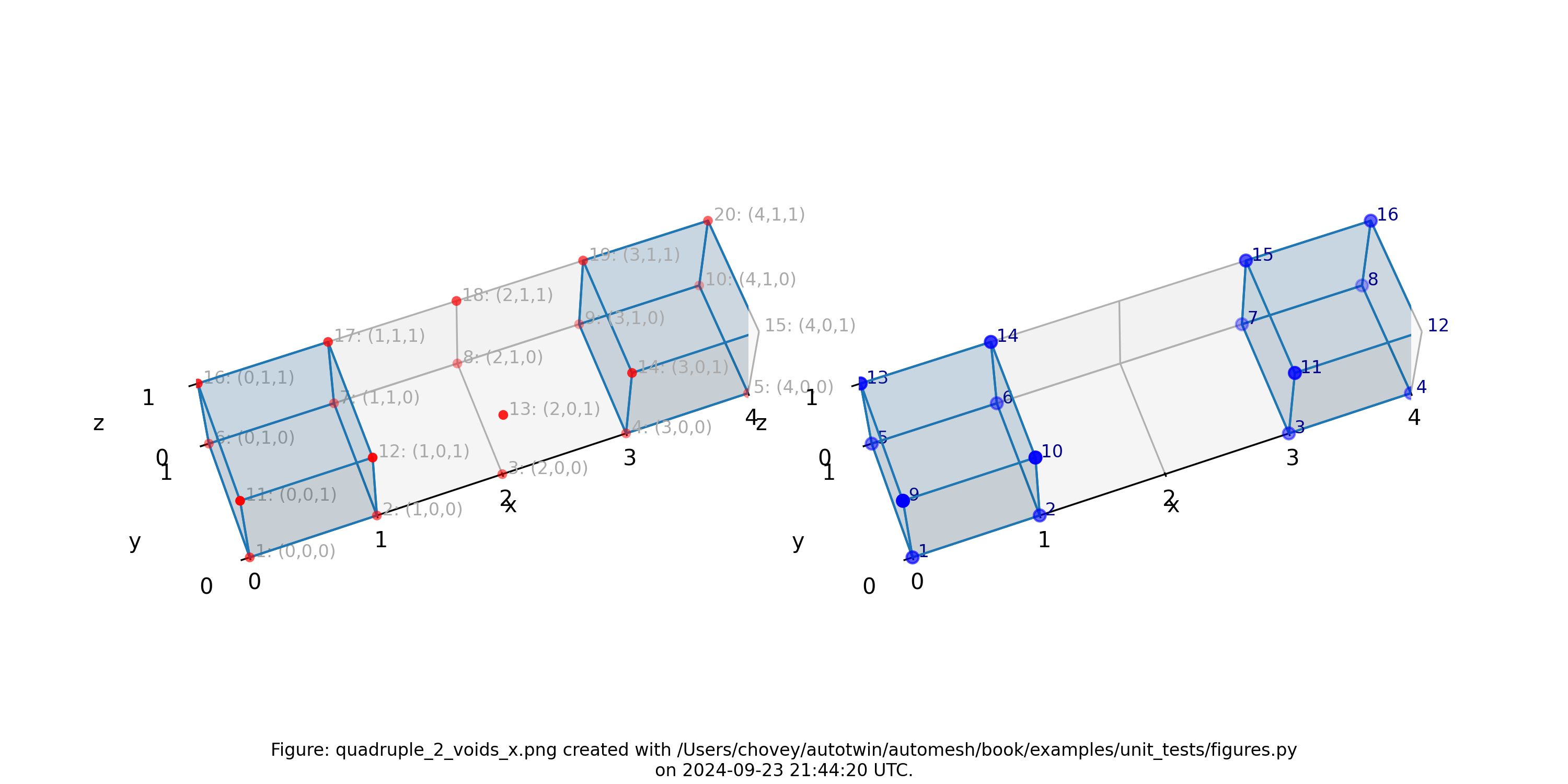

Quadruple with Voids

99 # x:1 y:1 z:1

0 # 2 1 1

0 # 3 1 1

99 # 4 1 1

where the segmentation 99 denotes block 99 in the finite element mesh, and segmentation 0 is excluded from the mesh.

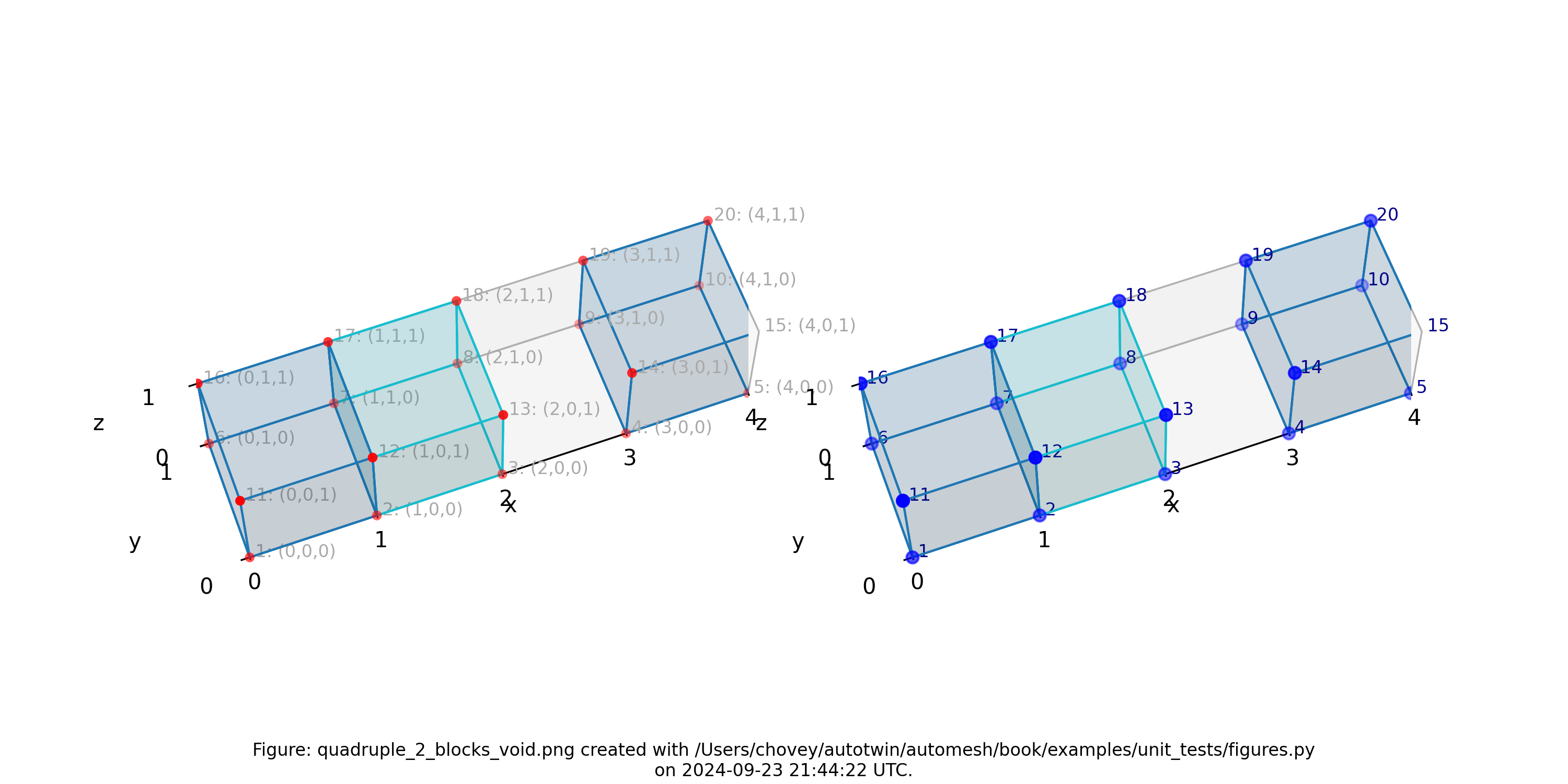

Figure: Mesh composed of a single block with two elements, propagating along

the x-axis and two voids, (left) lattice node numbers, (right) mesh node

numbers.

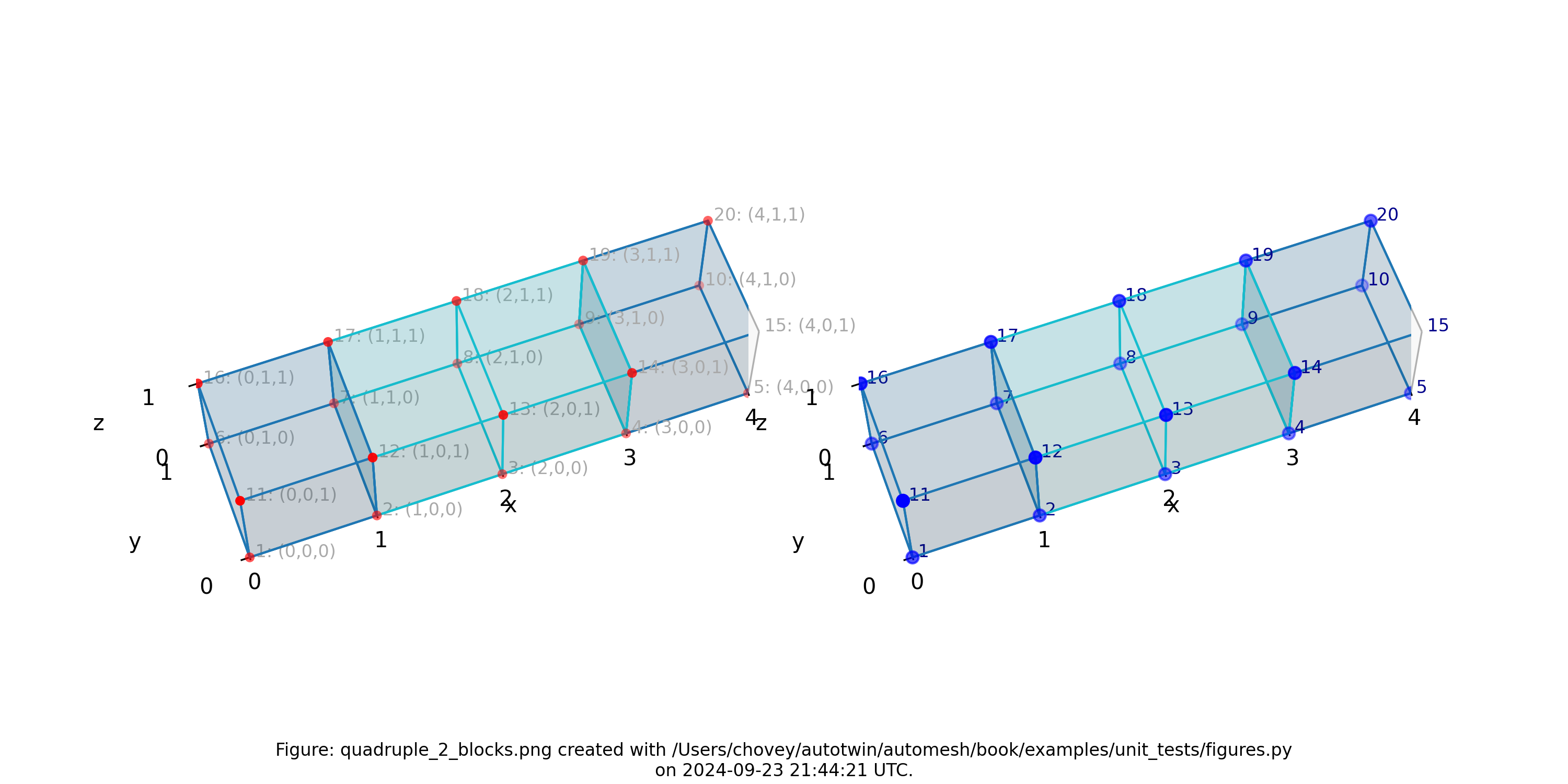

Quadruple with Two Blocks

100 # x:1 y:1 z:1

101 # 2 1 1

101 # 3 1 1

100 # 4 1 1

where the segmentation 100 and 101 denotes block 100 and 101,

respectively in the finite element mesh.

Figure: Mesh composed of two blocks with two elements elements each,

propagating along the x-axis, (left) lattice node numbers, (right) mesh

node numbers.

Quadruple with Two Blocks and Void

102 # x:1 y:1 z:1

103 # 2 1 1

0 # 3 1 1

102 # 4 1 1

where the segmentation 102 and 103 denotes block 102 and 103,

respectively, in the finite element mesh, and segmentation 0 will be included from the finite element mesh.

Figure: Mesh composed of one block with two elements, a second block with one

element, and a void, propagating along the x-axis, (left) lattice node

numbers, (right) mesh node numbers.

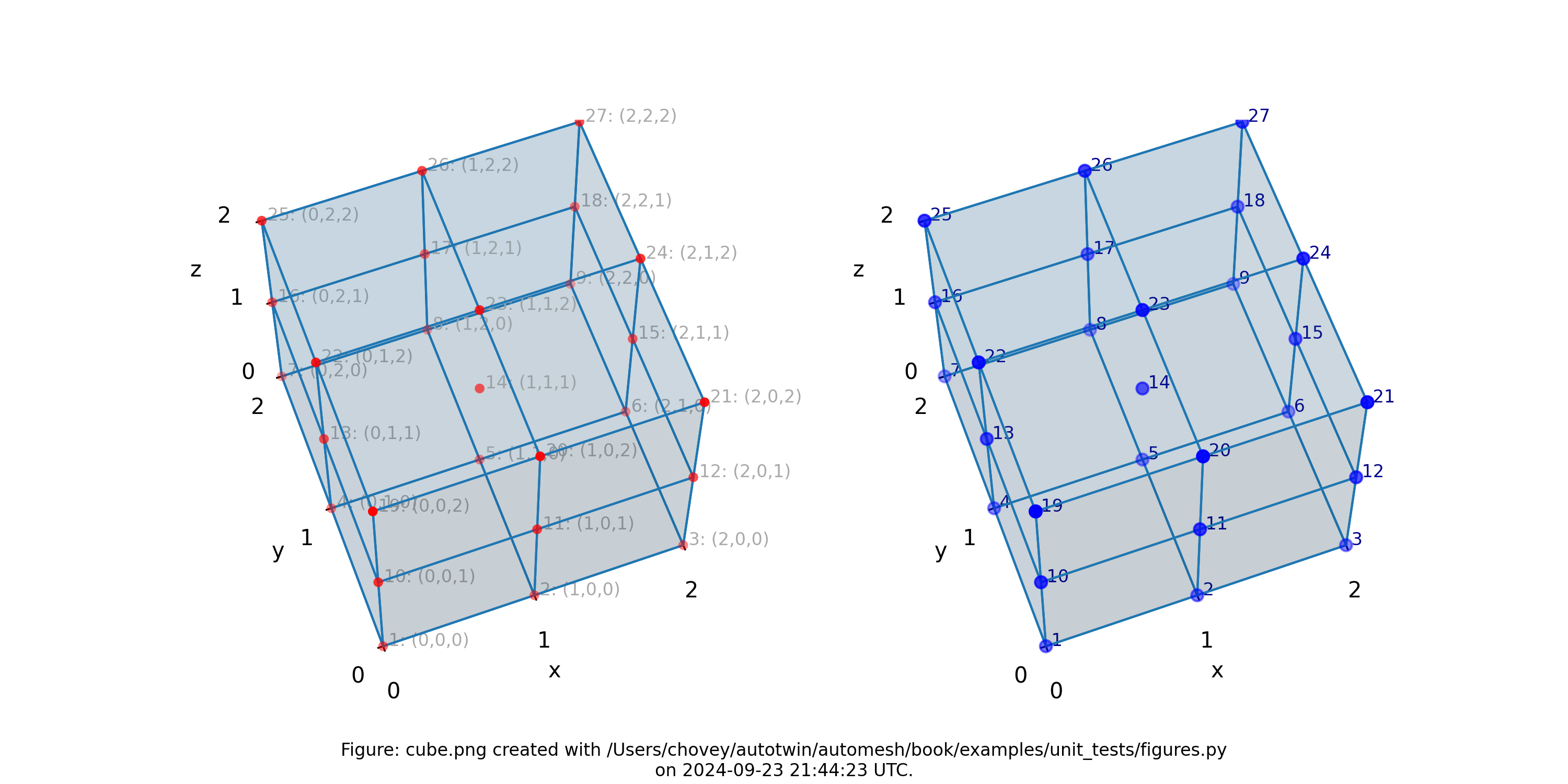

Cube

11 # x:1 y:1 z:1

11 # _ 2 _ 1 1

11 # 1 2 1

11 # _ 2 _ 2 _ 1

11 # 1 1 2

11 # _ 2 _ 1 2

11 # 1 2 2

11 # _ 2 _ 2 _ 2

where the segmentation 11 denotes block 11 in the finite element mesh.

Figure: Mesh composed of one block with eight elements, (left) lattice node numbers, (right) mesh node numbers.

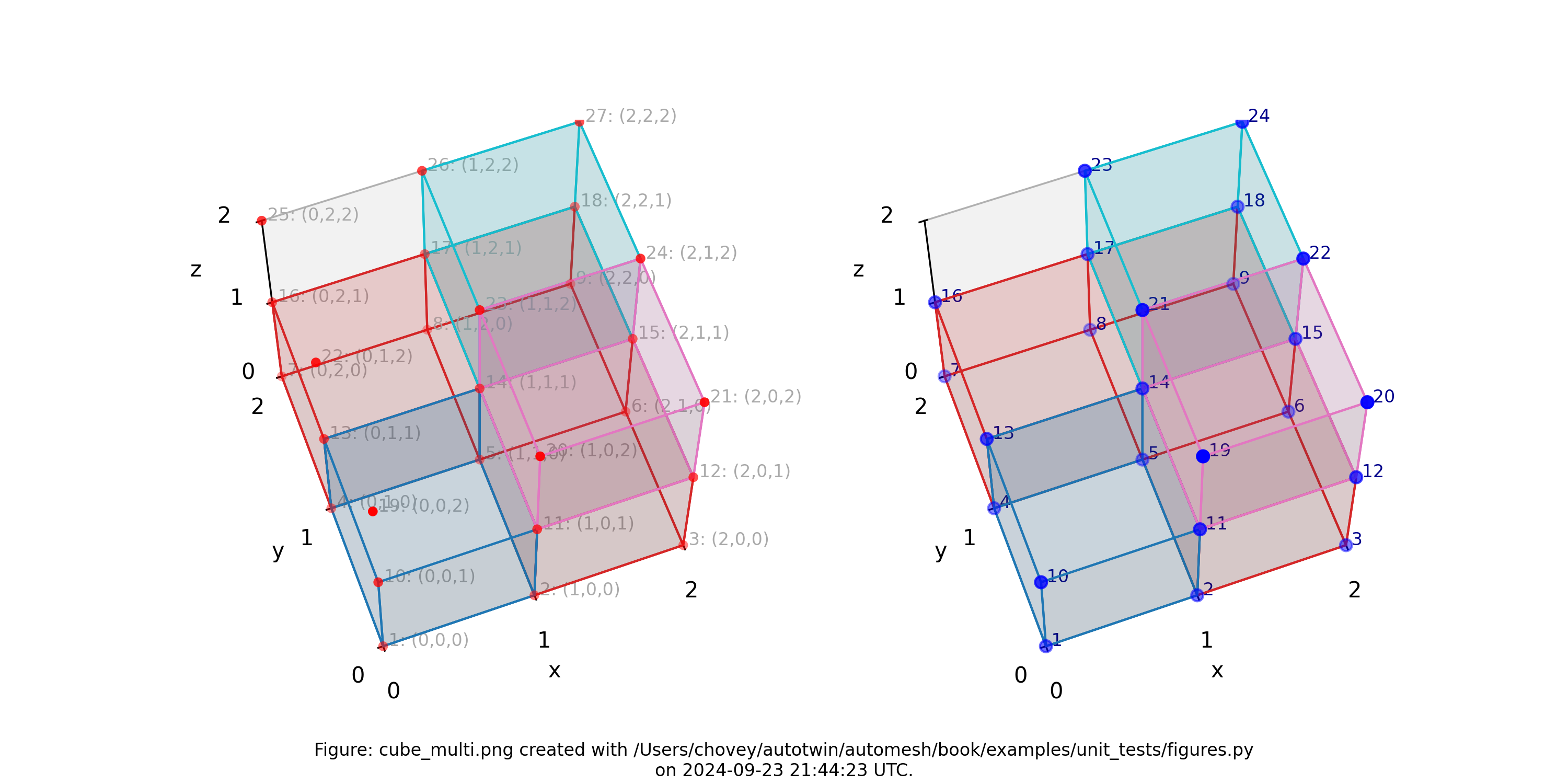

Cube with Multi Blocks and Void

82 # x:1 y:1 z:1

2 # _ 2 _ 1 1

2 # 1 2 1

2 # _ 2 _ 2 _ 1

0 # 1 1 2

31 # _ 2 _ 1 2

0 # 1 2 2

44 # _ 2 _ 2 _ 2

where the segmentation 82, 2, 31 and 44 denotes block 82, 2, 31

and 44, respectively, in the finite element mesh, and segmentation 0 will

be included from the finite element mesh.

Figure: Mesh composed of four blocks (block 82 has one element, block 2

has three elements, block 31 has one element, and block 44 has one

element), (left) lattice node numbers, (right) mesh node numbers.

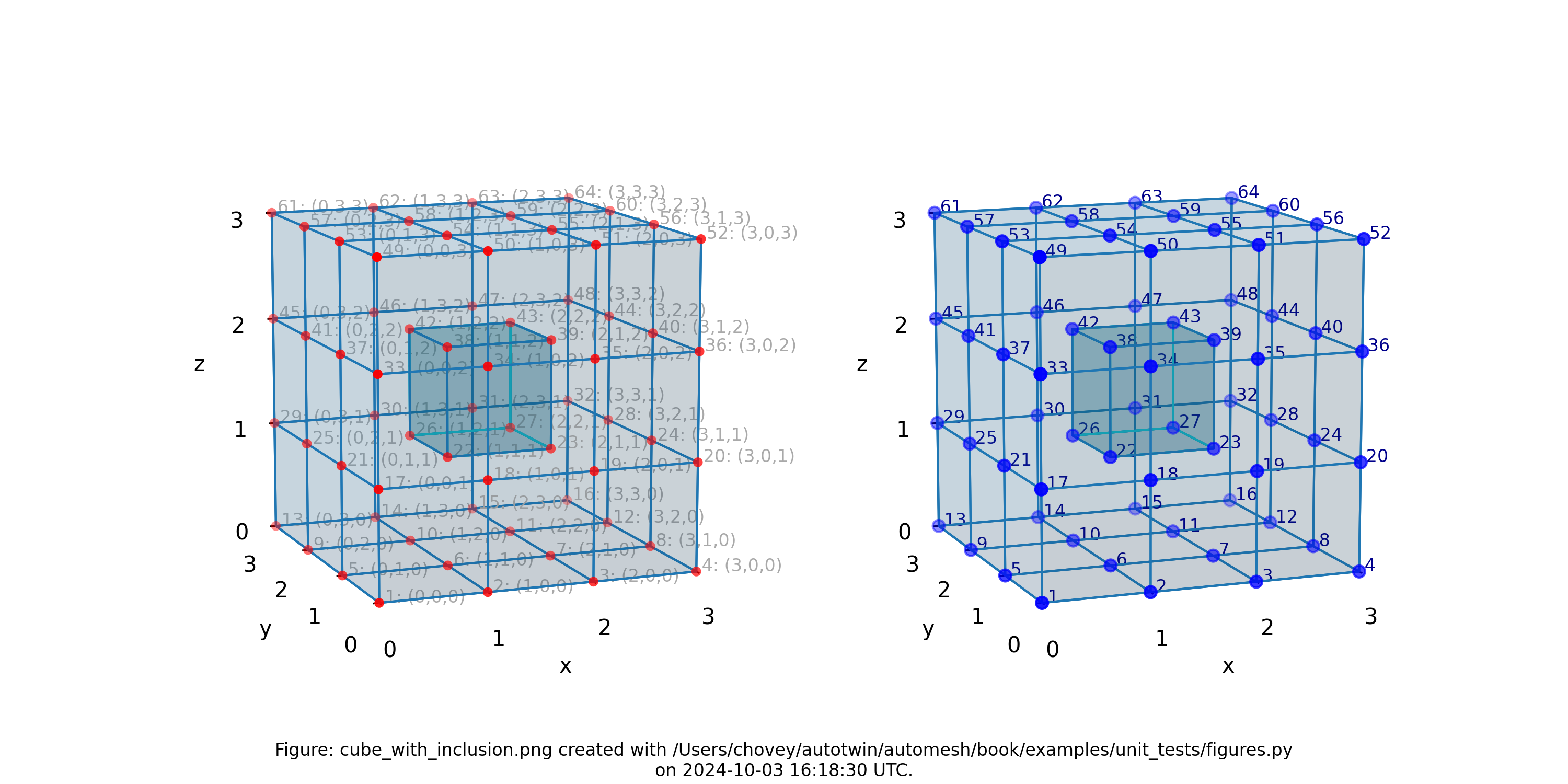

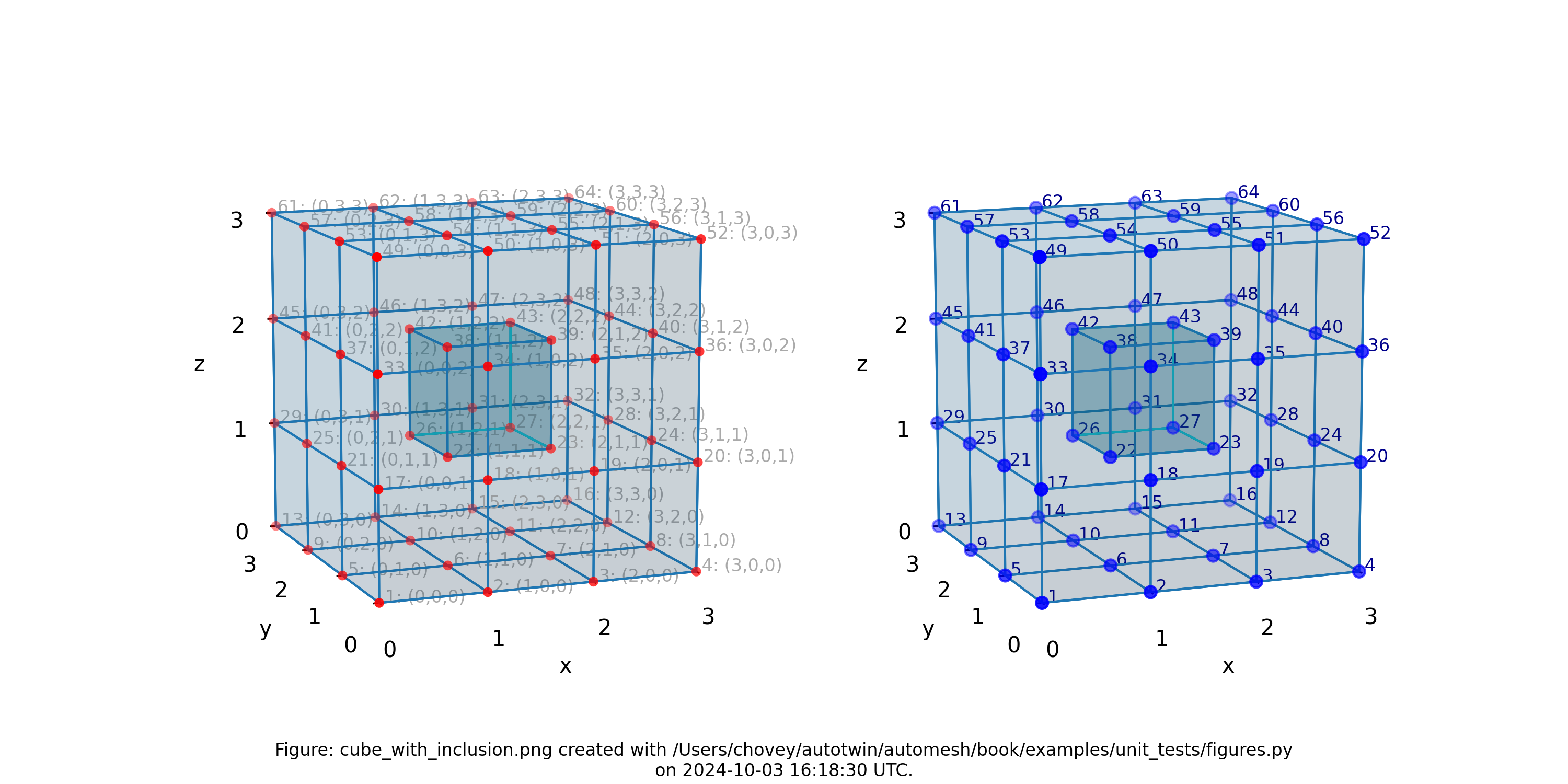

Cube with Inclusion

11 # x:1 y:1 z:1

11 # 2 1 1

11 # _ 3 _ 1 1

11 # 1 2 1

11 # 2 2 1

11 # _ 3 _ 2 1

11 # 1 3 1

11 # 2 3 1

11 # _ 3 _ 3 _ 1

11 # 1 1 2

11 # 2 1 2

11 # _ 3 _ 1 2

11 # 1 2 2

88 # 2 2 2

11 # _ 3 _ 2 2

11 # 1 3 2

11 # 2 3 2

11 # _ 3 _ 3 _ 2

11 # 1 1 3

11 # 2 1 3

11 # _ 3 _ 1 3

11 # 1 2 3

11 # 2 2 3

11 # _ 3 _ 2 3

11 # 1 3 3

11 # 2 3 3

11 # _ 3 _ 3 _ 3

Figure: Mesh composed of 26 voxels of (block 11) and one voxel inslusion

(block 88), (left) lattice node numbers, (right) mesh node numbers.

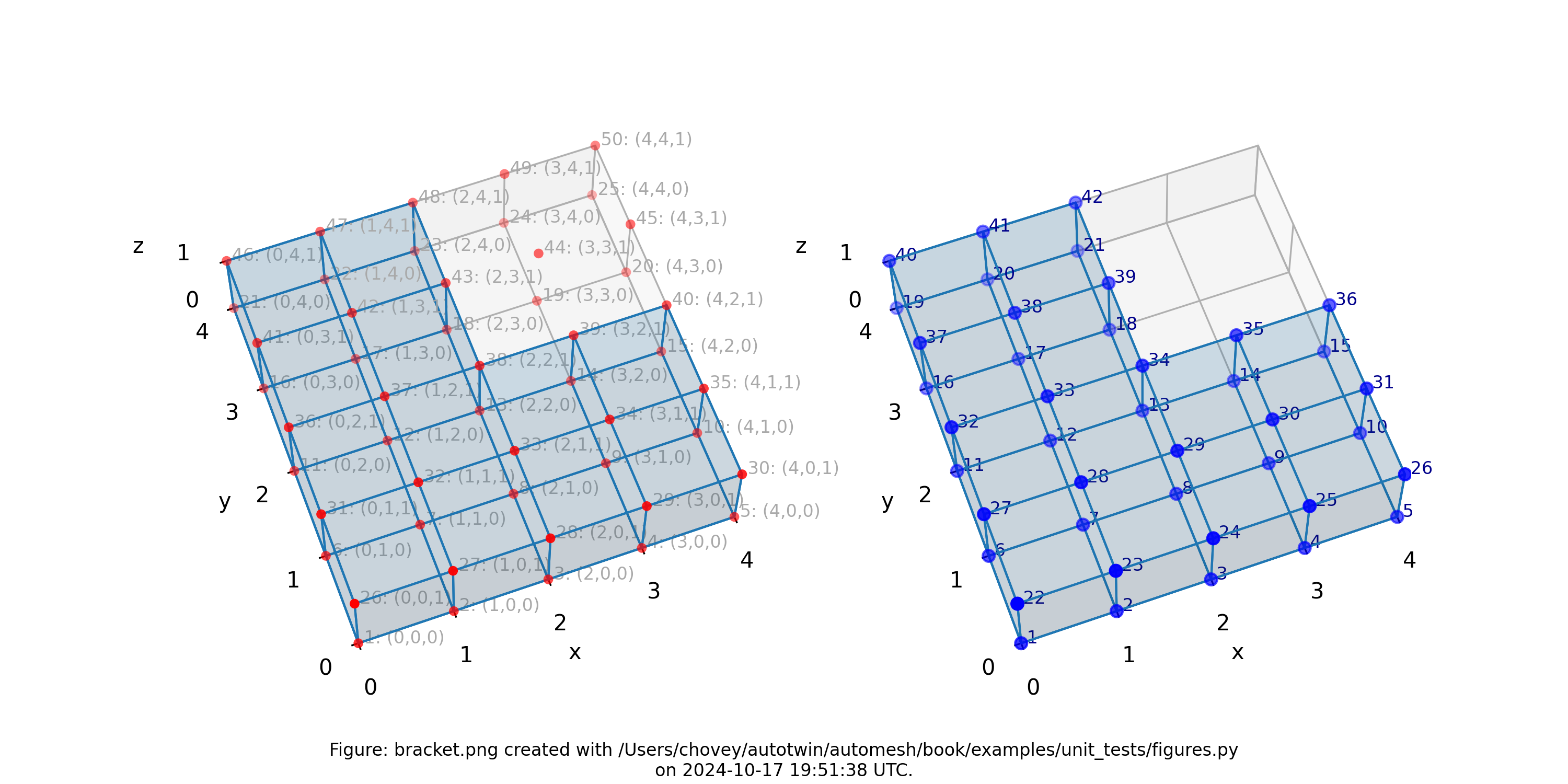

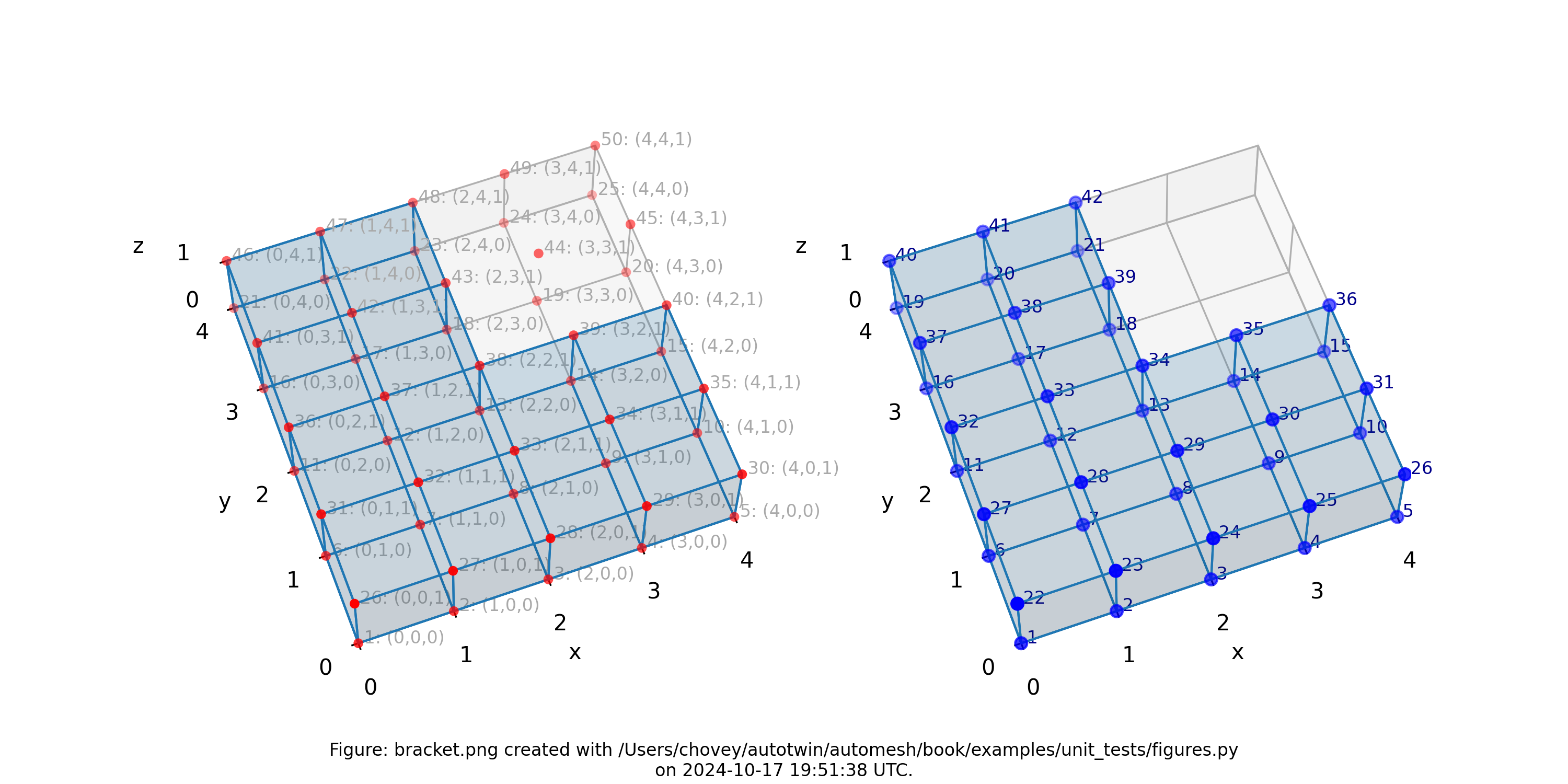

Bracket

1 # x:1 y:1 z:1

1 # 2 1 1

1 # 3 1 1

1 # _ 4 _ 1 1

1 # x:1 y:2 z:1

1 # 2 2 1

1 # 3 2 1

1 # _ 4 _ 2 1

1 # x:1 y:3 z:1

1 # 2 3 1

0 # 3 3 1

0 # _ 4 _ 3 1

1 # x:1 y:4 z:1

1 # 2 4 1

0 # 3 4 1

0 # _ 4 _ 4 1

where the segmentation 1 denotes block 1 in the finite element mesh,

and segmentation 0 is excluded from the mesh.

Figure: Mesh composed of a L-shaped bracket in the xy plane.

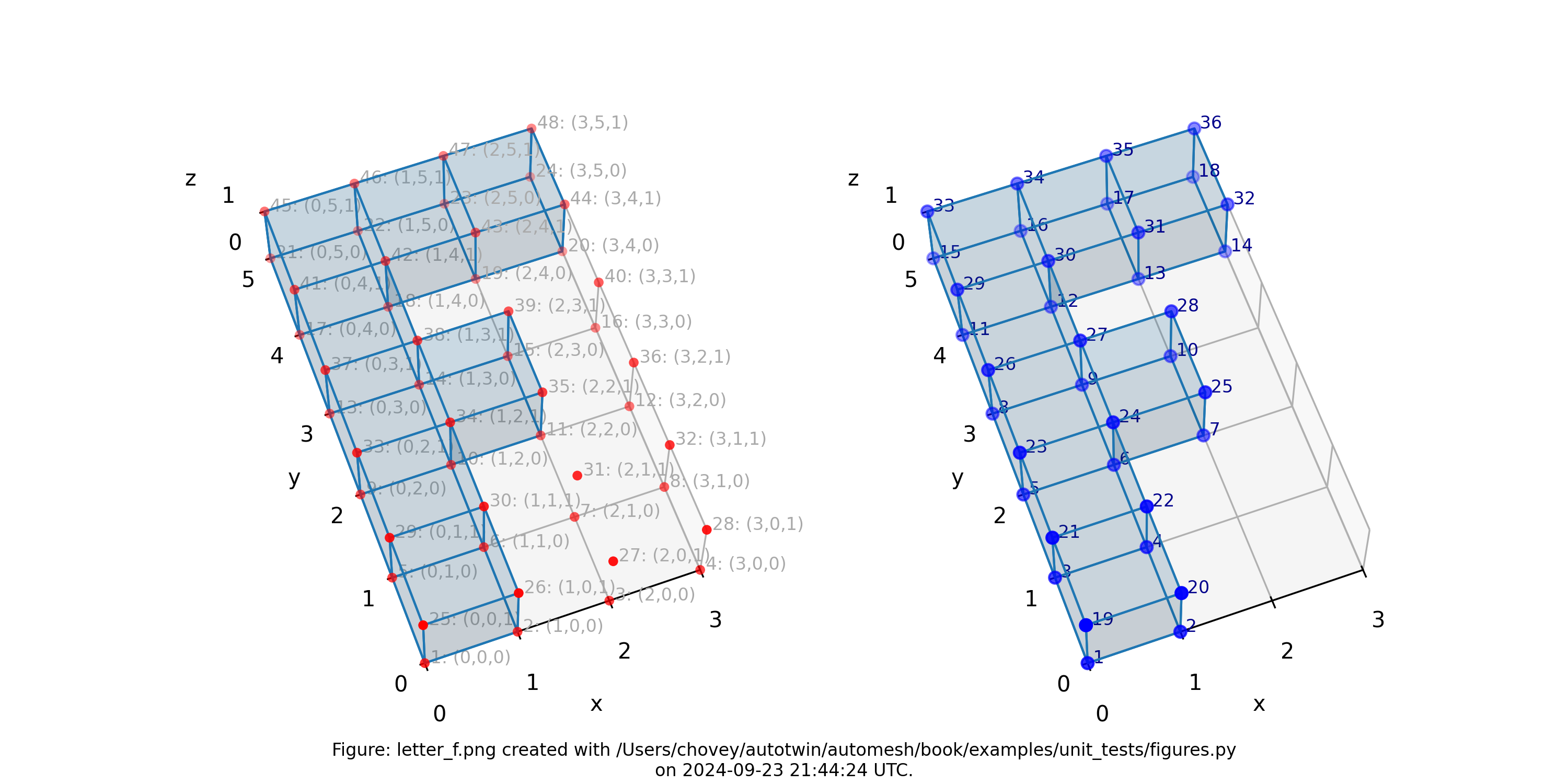

Letter F

11 # x:1 y:1 z:1

0 # 2 1 1

0 # _ 3 _ 1 1

11 # 1 2 1

0 # 2 2 1

0 # _ 3 _ 2 1

11 # 1 3 1

11 # 2 3 1

0 # _ 3 _ 3 1

11 # 1 4 1

0 # 2 4 1

0 # _ 3 _ 4 1

11 # 1 5 1

11 # 2 5 1

11 # _ 3 _ 5 _ 1

where the segmentation 11 denotes block 11 in the finite element mesh.

Figure: Mesh composed of a single block with eight elements, (left) lattice node numbers, (right) mesh node numbers.

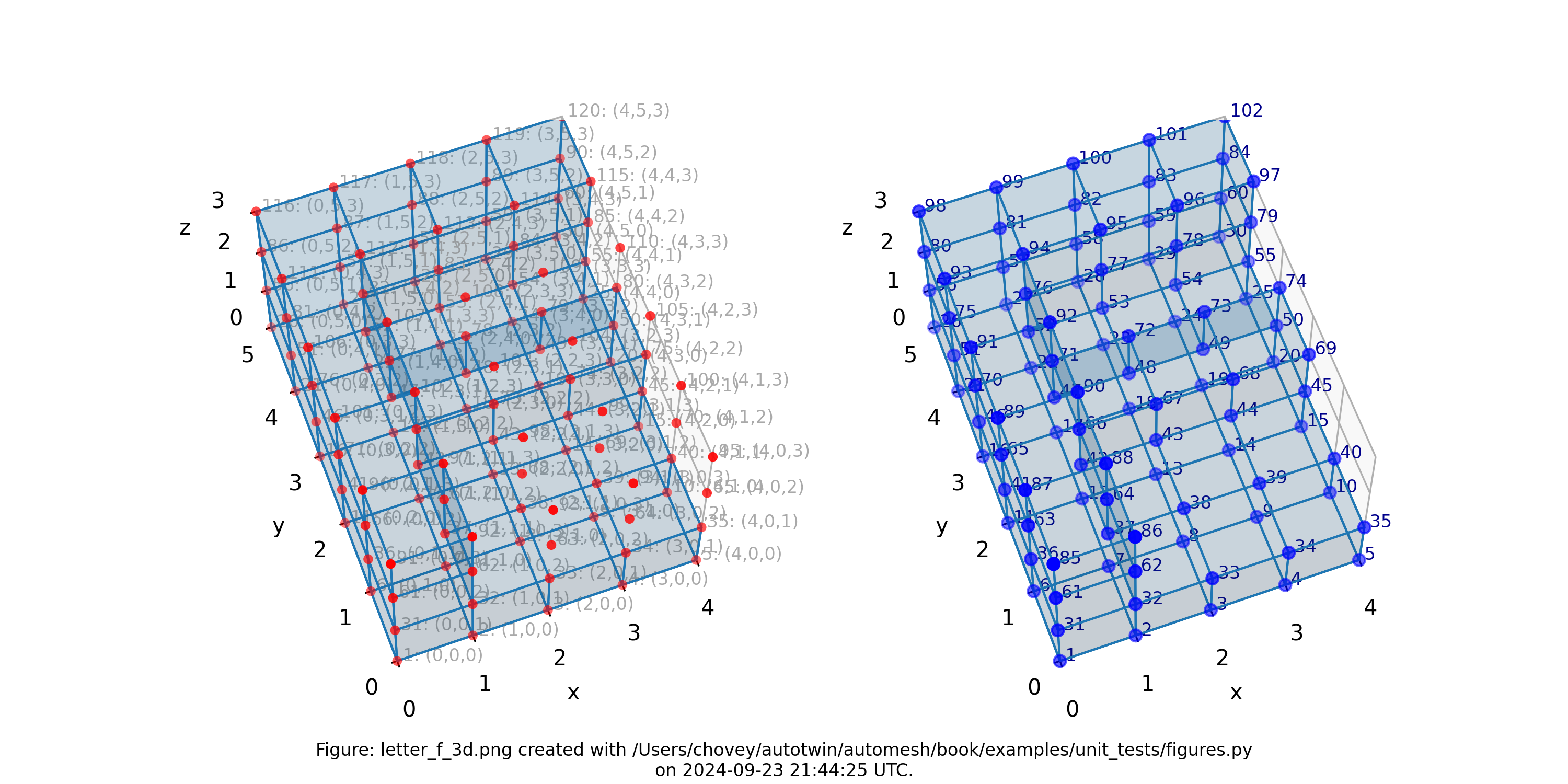

Letter F in 3D

1 # x:1 y:1 z:1

1 # 2 1 1

1 # 3 1 1

1 # _ 4 _ 1 1

1 # 1 2 1

1 # 2 2 1

1 # 3 2 1

1 # _ 4 _ 2 1

1 # 1 3 1

1 # 2 3 1

1 # 3 3 1

1 # _ 4 _ 3 1

1 # 1 4 1

1 # 2 4 1

1 # 3 4 1

1 # _ 4 _ 4 1

1 # 1 5 1

1 # 2 5 1

1 # 3 5 1

1 # _ 4 _ 5 _ 1

1 # x:1 y:1 z:2

0 # 2 1 2

0 # 3 1 2

0 # _ 4 _ 1 2

1 # 1 2 2

0 # 2 2 2

0 # 3 2 2

0 # _ 4 _ 2 2

1 # 1 3 2

1 # 2 3 2

1 # 3 3 2

1 # _ 4 _ 3 2

1 # 1 4 2

0 # 2 4 2

0 # 3 4 2

0 # _ 4 _ 4 2

1 # 1 5 2

1 # 2 5 2

1 # 3 5 2

1 # _ 4 _ 5 _ 2

1 # x:1 y:1 z:3

0 # 2 1 j

0 # 3 1 2

0 # _ 4 _ 1 2

1 # 1 2 3

0 # 2 2 3

0 # 3 2 3

0 # _ 4 _ 2 3

1 # 1 3 3

0 # 2 3 3

0 # 3 3 3

0 # _ 4 _ 3 3

1 # 1 4 3

0 # 2 4 3

0 # 3 4 3

0 # _ 4 _ 4 3

1 # 1 5 3

1 # 2 5 3

1 # 3 5 3

1 # _ 4 _ 5 _ 3

which corresponds to --nelx 4, --nely 5, and --nelz 3 in the

command line interface.

Figure: Mesh composed of a single block with thirty-nine elements, (left) lattice node numbers, (right) mesh node numbers.

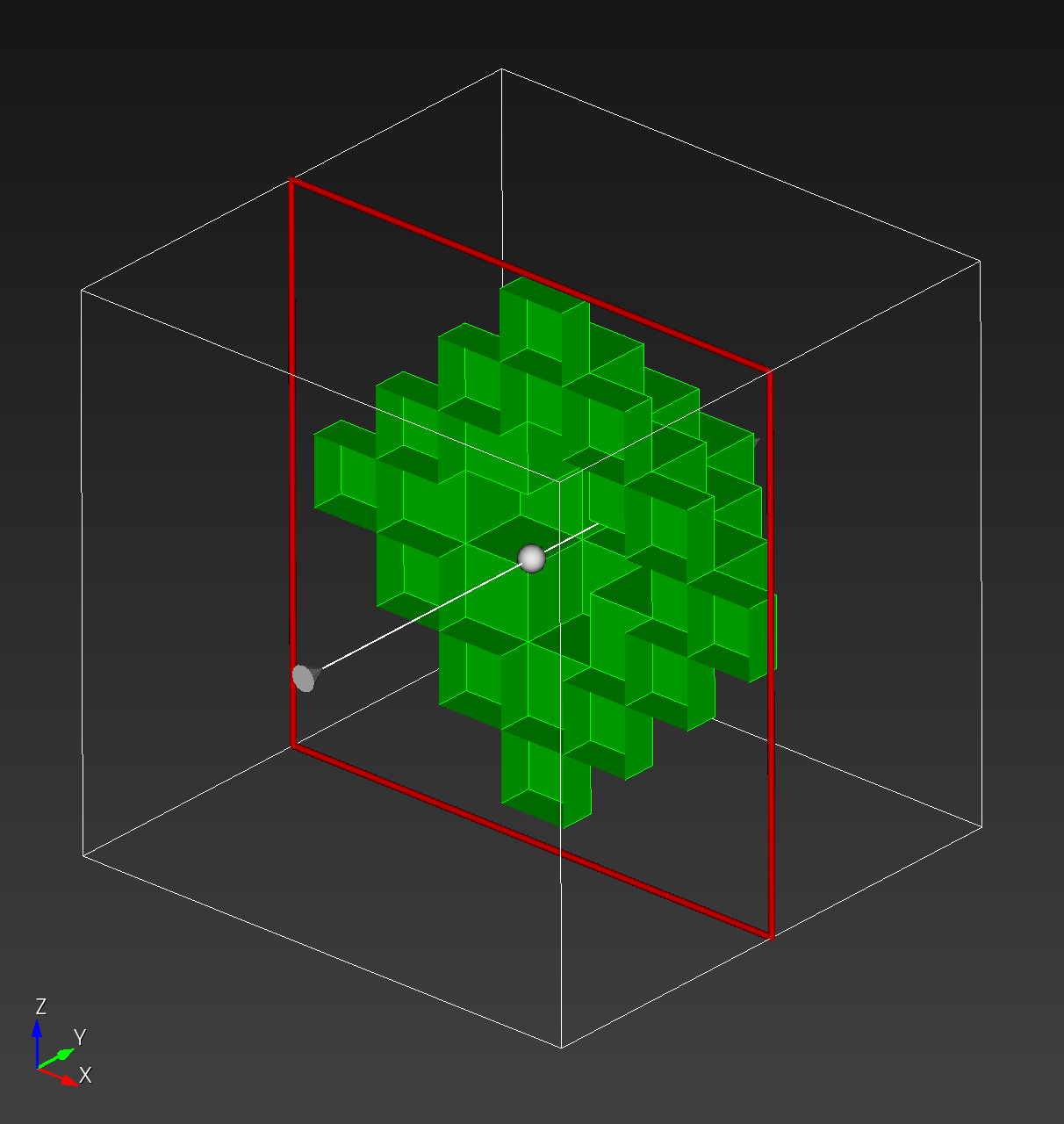

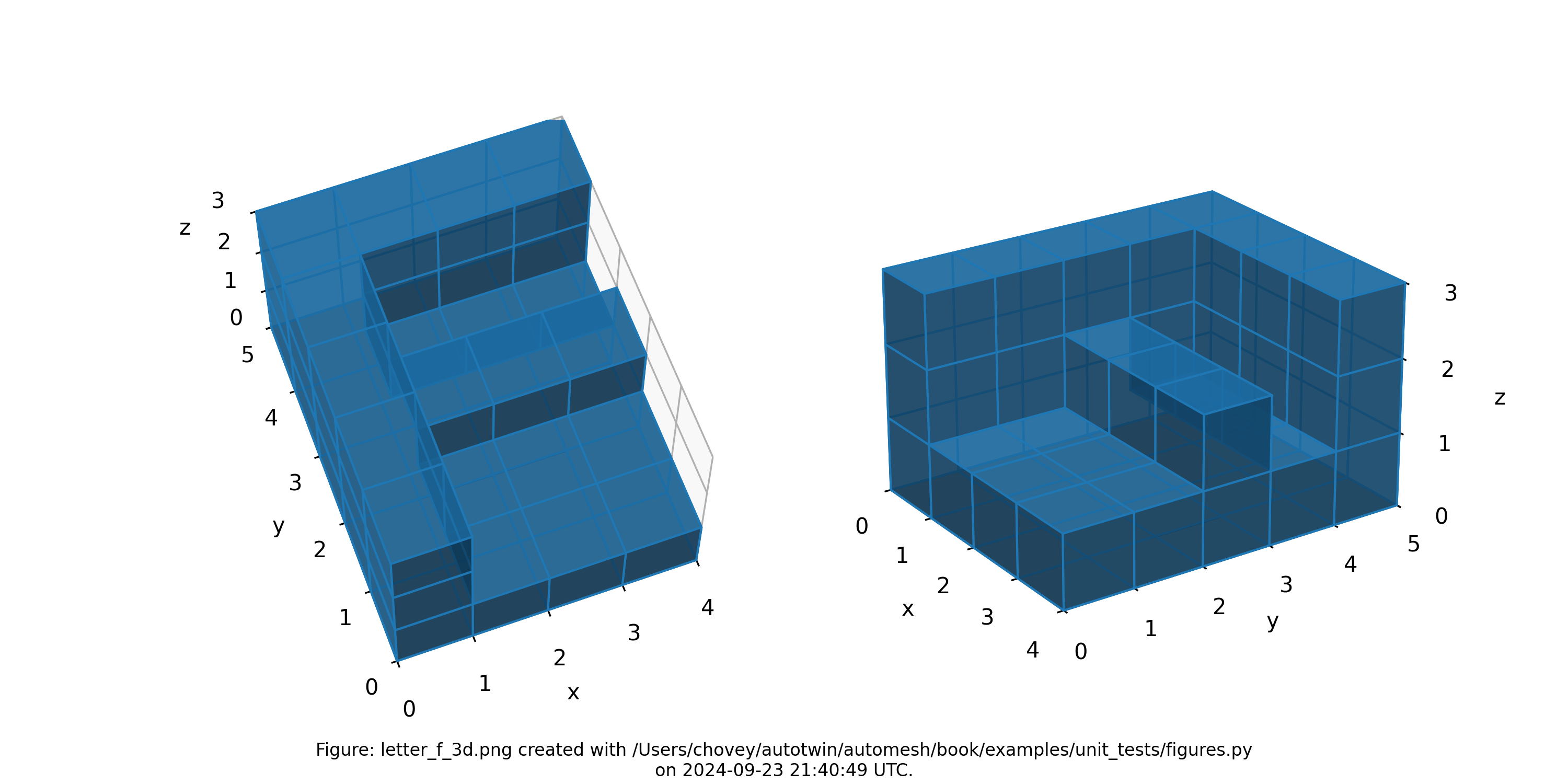

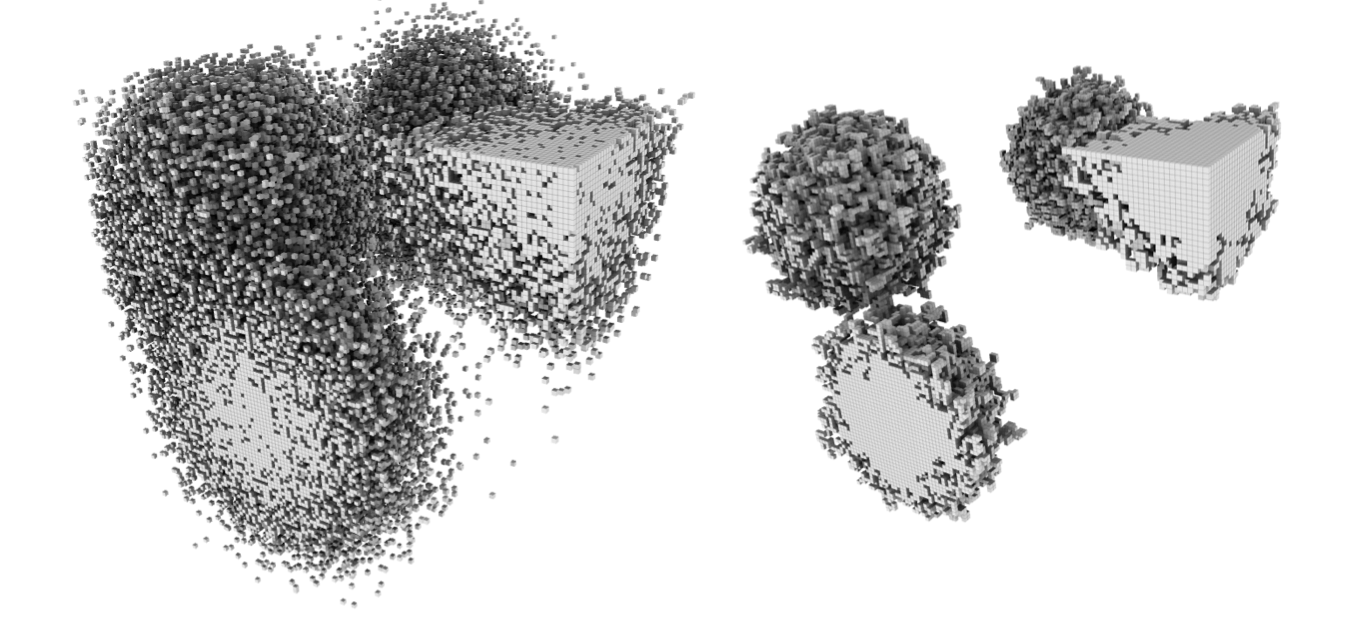

The shape of the solid segmentation is more easily seen without the lattice and element nodes, and with decreased opacity, as shown below:

Figure: Mesh composed of a single block with thirty-nine elements, shown with decreased opacity and without lattice and element node numbers.

Sparse

0 # x:1 y:1 z:1

0 # 2 1 1

0 # 3 1 1

0 # 4 1 1

2 # _ 5 _ 1 1

0 # 1 2 1

1 # 2 2 1

0 # 3 2 1

0 # 4 2 1

2 # _ 5 _ 2 1

1 # 1 3 1

2 # 2 3 1

0 # 3 3 1

2 # 4 3 1

0 # _ 5 _ 3 1

0 # 1 4 1

1 # 2 4 1

0 # 3 4 1

2 # 4 4 1

0 # _ 5 _ 4 1

1 # 1 5 1

0 # 2 5 1

0 # 3 5 1

0 # 4 5 1

1 # _ 5 _ 5 _ 1

2 # x:1 y:1 z:2

0 # 2 1 2

2 # 3 1 2

0 # 4 1 2

0 # _ 5 _ 1 2

1 # 1 2 2

1 # 2 2 2

0 # 3 2 2

2 # 4 2 2

2 # _ 5 _ 2 2

2 # 1 3 2

0 # 2 3 2

0 # 3 3 2

0 # 4 3 2

0 # _ 5 _ 3 2

1 # 1 4 2

0 # 2 4 2

0 # 3 4 2

2 # 4 4 2

0 # _ 5 _ 4 2

2 # 1 5 2

0 # 2 5 2

2 # 3 5 2

0 # 4 5 2

2 # _ 5 _ 5 _ 2

0 # x:1 y:1 z:3

0 # 2 1 3

1 # 3 1 3

0 # 4 1 3

2 # _ 5 _ 1 3

0 # 1 2 3

0 # 2 2 3

0 # 3 2 3

1 # 4 2 3

2 # _ 5 _ 2 3

0 # 1 3 3

0 # 2 3 3

2 # 3 3 3

2 # 4 3 3

2 # _ 5 _ 3 3

0 # 1 4 3

0 # 2 4 3

1 # 3 4 3

0 # 4 4 3

1 # _ 5 _ 4 3

0 # 1 5 3

1 # 2 5 3

0 # 3 5 3

1 # 4 5 3

0 # _ 5 _ 5 _ 3

0 # x:1 y:1 z:4

1 # 2 1 4

2 # 3 1 4

1 # 4 1 4

2 # _ 5 _ 1 4

2 # 1 2 4

0 # 2 2 4

2 # 3 2 4

0 # 4 2 4

1 # _ 5 _ 2 4

1 # 1 3 4

2 # 2 3 4

2 # 3 3 4

0 # 4 3 4

0 # _ 5 _ 3 4

2 # 1 4 4

1 # 2 4 4

1 # 3 4 4

1 # 4 4 4

1 # _ 5 _ 4 4

0 # 1 5 4

0 # 2 5 4

1 # 3 5 4

0 # 4 5 4

0 # _ 5 _ 5 _ 4

0 # x:1 y:1 z:5

1 # 2 1 5

0 # 3 1 5

2 # 4 1 5

0 # _ 5 _ 1 5

1 # 1 2 5

0 # 2 2 5

0 # 3 2 5

0 # 4 2 5

2 # _ 5 _ 2 5

0 # 1 3 5

1 # 2 3 5

0 # 3 3 5

0 # 4 3 5

0 # _ 5 _ 3 5

1 # 1 4 5

0 # 2 4 5

0 # 3 4 5

0 # 4 4 5

0 # _ 5 _ 4 5

0 # 1 5 5

0 # 2 5 5

1 # 3 5 5

2 # 4 5 5

1 # _ 5 _ 5 _ 5

where the segmentation 1 denotes block 1 and segmentation 2 denotes block 2 in the finite eelement mesh (with segmentation 0 excluded).

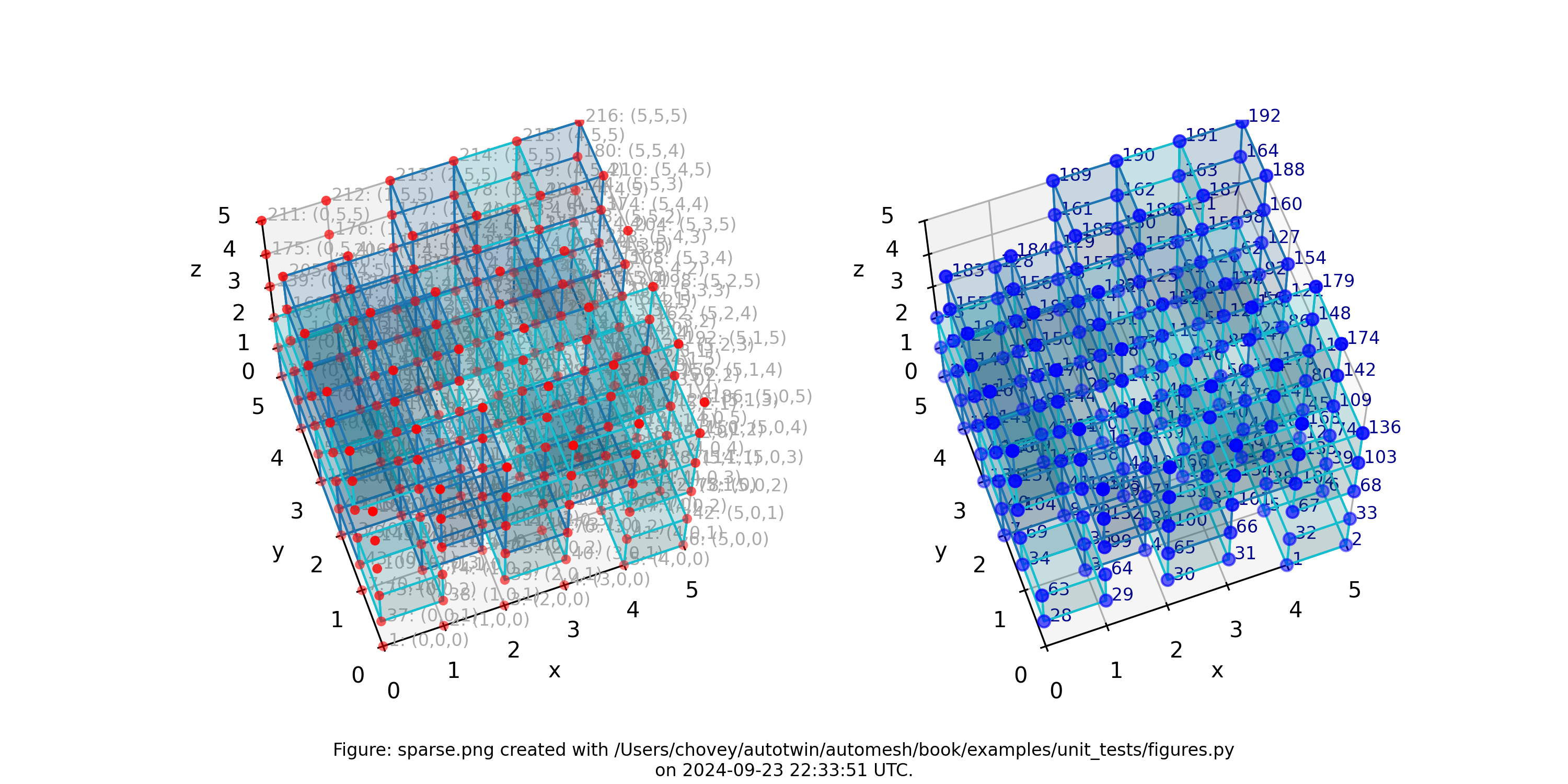

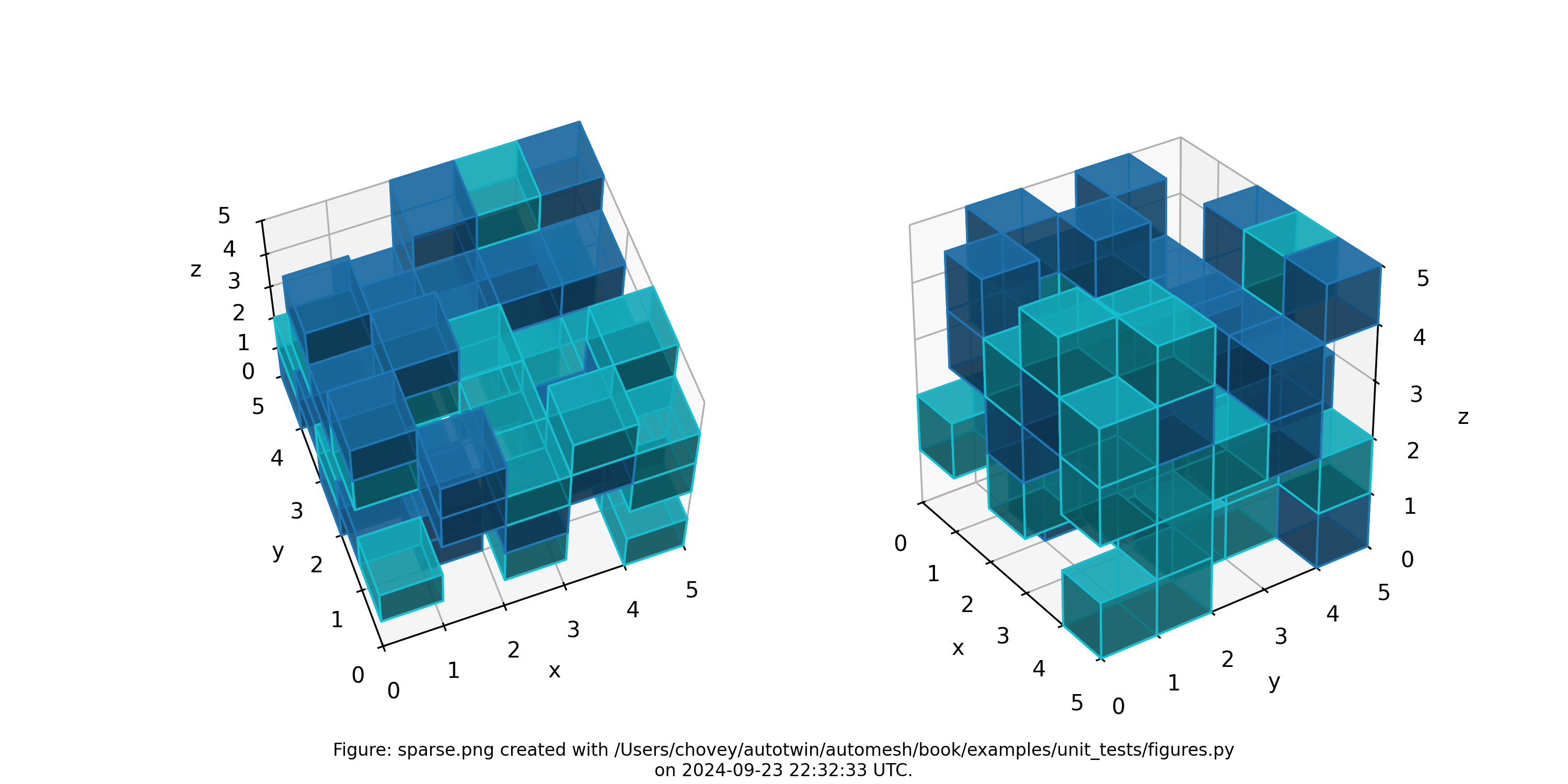

Figure: Sparse mesh composed of two materials at random voxel locations.

Figure: Sparse mesh composed of two materials at random voxel locations, shown with decreased opactity and without lattice and element node numbers.

Source

The figures were created with the following Python files:

examples_data.py

r"""This module, examples_data.py, contains the data for

the unit test examples.

"""

from typing import Final

import numpy as np

import examples_types as ty

# Type aliases

Example = ty.Example

COMMON_TITLE: Final[str] = "Lattice Index and Coordinates: "

class Single(Example):

"""A specific example of a single voxel."""

figure_title: str = COMMON_TITLE + "Single"

file_stem: str = "single"

segmentation = np.array(

[

[

[

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = ((1, 2, 4, 3, 5, 6, 8, 7),)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 4, 3, 5, 6, 8, 7),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 4, 3, 5, 6, 8, 7),

),

)

class DoubleX(Example):

"""A specific example of a double voxel, coursed along the x-axis."""

figure_title: str = COMMON_TITLE + "DoubleX"

file_stem: str = "double_x"

segmentation = np.array(

[

[

[

11,

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = (

(1, 2, 5, 4, 7, 8, 11, 10),

(2, 3, 6, 5, 8, 9, 12, 11),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 5, 4, 7, 8, 11, 10),

(2, 3, 6, 5, 8, 9, 12, 11),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 5, 4, 7, 8, 11, 10),

(2, 3, 6, 5, 8, 9, 12, 11),

),

)

class DoubleY(Example):

"""A specific example of a double voxel, coursed along the y-axis."""

figure_title: str = COMMON_TITLE + "DoubleY"

file_stem: str = "double_y"

segmentation = np.array(

[

[

[

11,

],

[

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = (

(1, 2, 4, 3, 7, 8, 10, 9),

(3, 4, 6, 5, 9, 10, 12, 11),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 4, 3, 7, 8, 10, 9),

(3, 4, 6, 5, 9, 10, 12, 11),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 4, 3, 7, 8, 10, 9),

(3, 4, 6, 5, 9, 10, 12, 11),

),

)

class TripleX(Example):

"""A triple voxel lattice, coursed along the x-axis."""

figure_title: str = COMMON_TITLE + "Triple"

file_stem: str = "triple_x"

segmentation = np.array(

[

[

[

11,

11,

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = (

(1, 2, 6, 5, 9, 10, 14, 13),

(2, 3, 7, 6, 10, 11, 15, 14),

(3, 4, 8, 7, 11, 12, 16, 15),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 6, 5, 9, 10, 14, 13),

(2, 3, 7, 6, 10, 11, 15, 14),

(3, 4, 8, 7, 11, 12, 16, 15),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 6, 5, 9, 10, 14, 13),

(2, 3, 7, 6, 10, 11, 15, 14),

(3, 4, 8, 7, 11, 12, 16, 15),

),

)

class QuadrupleX(Example):

"""A quadruple voxel lattice, coursed along the x-axis."""

figure_title: str = COMMON_TITLE + "Quadruple"

file_stem: str = "quadruple_x"

segmentation = np.array(

[

[

[

11,

11,

11,

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = (

(1, 2, 7, 6, 11, 12, 17, 16),

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

(4, 5, 10, 9, 14, 15, 20, 19),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 7, 6, 11, 12, 17, 16),

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

(4, 5, 10, 9, 14, 15, 20, 19),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 7, 6, 11, 12, 17, 16),

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

(4, 5, 10, 9, 14, 15, 20, 19),

),

)

class Quadruple2VoidsX(Example):

"""A quadruple voxel lattice, coursed along the x-axis, with two

intermediate voxels in the segmentation being void.

"""

figure_title: str = COMMON_TITLE + "Quadruple2VoidsX"

file_stem: str = "quadruple_2_voids_x"

segmentation = np.array(

[

[

[

99,

0,

0,

99,

],

],

],

dtype=np.uint8,

)

included_ids = (99,)

gold_lattice = (

(1, 2, 7, 6, 11, 12, 17, 16),

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

(4, 5, 10, 9, 14, 15, 20, 19),

)

gold_mesh_lattice_connectivity = (

(

99,

(1, 2, 7, 6, 11, 12, 17, 16),

(4, 5, 10, 9, 14, 15, 20, 19),

),

)

gold_mesh_element_connectivity = (

(

99,

(1, 2, 6, 5, 9, 10, 14, 13),

(3, 4, 8, 7, 11, 12, 16, 15),

),

)

class Quadruple2Blocks(Example):

"""A quadruple voxel lattice, with the first intermediate voxel being

the second block and the second intermediate voxel being void.

"""

figure_title: str = COMMON_TITLE + "Quadruple2Blocks"

file_stem: str = "quadruple_2_blocks"

segmentation = np.array(

[

[

[

100,

101,

101,

100,

],

],

],

dtype=np.uint8,

)

included_ids = (

100,

101,

)

gold_lattice = (

(1, 2, 7, 6, 11, 12, 17, 16),

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

(4, 5, 10, 9, 14, 15, 20, 19),

)

gold_mesh_lattice_connectivity = (

(

100,

(1, 2, 7, 6, 11, 12, 17, 16),

(4, 5, 10, 9, 14, 15, 20, 19),

),

(

101,

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

),

)

gold_mesh_element_connectivity = (

(

100,

(1, 2, 7, 6, 11, 12, 17, 16),

(4, 5, 10, 9, 14, 15, 20, 19),

),

(

101,

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

),

)

class Quadruple2BlocksVoid(Example):

"""A quadruple voxel lattice, with the first intermediate voxel being

the second block and the second intermediate voxel being void.

"""

figure_title: str = COMMON_TITLE + "Quadruple2BlocksVoid"

file_stem: str = "quadruple_2_blocks_void"

segmentation = np.array(

[

[

[

102,

103,

0,

102,

],

],

],

dtype=np.uint8,

)

included_ids = (

102,

103,

)

gold_lattice = (

(1, 2, 7, 6, 11, 12, 17, 16),

(2, 3, 8, 7, 12, 13, 18, 17),

(3, 4, 9, 8, 13, 14, 19, 18),

(4, 5, 10, 9, 14, 15, 20, 19),

)

gold_mesh_lattice_connectivity = (

(

102,

(1, 2, 7, 6, 11, 12, 17, 16),

(4, 5, 10, 9, 14, 15, 20, 19),

),

(

103,

(2, 3, 8, 7, 12, 13, 18, 17),

),

)

gold_mesh_element_connectivity = (

(

102,

(1, 2, 7, 6, 11, 12, 17, 16),

(4, 5, 10, 9, 14, 15, 20, 19),

),

(

103,

(2, 3, 8, 7, 12, 13, 18, 17),

),

)

class Cube(Example):

"""A (2 x 2 x 2) voxel cube."""

figure_title: str = COMMON_TITLE + "Cube"

file_stem: str = "cube"

segmentation = np.array(

[

[

[

11,

11,

],

[

11,

11,

],

],

[

[

11,

11,

],

[

11,

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = (

(1, 2, 5, 4, 10, 11, 14, 13),

(2, 3, 6, 5, 11, 12, 15, 14),

(4, 5, 8, 7, 13, 14, 17, 16),

(5, 6, 9, 8, 14, 15, 18, 17),

(10, 11, 14, 13, 19, 20, 23, 22),

(11, 12, 15, 14, 20, 21, 24, 23),

(13, 14, 17, 16, 22, 23, 26, 25),

(14, 15, 18, 17, 23, 24, 27, 26),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 5, 4, 10, 11, 14, 13),

(2, 3, 6, 5, 11, 12, 15, 14),

(4, 5, 8, 7, 13, 14, 17, 16),

(5, 6, 9, 8, 14, 15, 18, 17),

(10, 11, 14, 13, 19, 20, 23, 22),

(11, 12, 15, 14, 20, 21, 24, 23),

(13, 14, 17, 16, 22, 23, 26, 25),

(14, 15, 18, 17, 23, 24, 27, 26),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 5, 4, 10, 11, 14, 13),

(2, 3, 6, 5, 11, 12, 15, 14),

(4, 5, 8, 7, 13, 14, 17, 16),

(5, 6, 9, 8, 14, 15, 18, 17),

(10, 11, 14, 13, 19, 20, 23, 22),

(11, 12, 15, 14, 20, 21, 24, 23),

(13, 14, 17, 16, 22, 23, 26, 25),

(14, 15, 18, 17, 23, 24, 27, 26),

),

)

class CubeMulti(Example):

"""A (2 x 2 x 2) voxel cube with two voids and six elements."""

figure_title: str = COMMON_TITLE + "CubeMulti"

file_stem: str = "cube_multi"

segmentation = np.array(

[

[

[

82,

2,

],

[

2,

2,

],

],

[

[

0,

31,

],

[

0,

44,

],

],

],

dtype=np.uint8,

)

included_ids = (

82,

2,

31,

44,

)

gold_lattice = (

(1, 2, 5, 4, 10, 11, 14, 13),

(2, 3, 6, 5, 11, 12, 15, 14),

(4, 5, 8, 7, 13, 14, 17, 16),

(5, 6, 9, 8, 14, 15, 18, 17),

(10, 11, 14, 13, 19, 20, 23, 22),

(11, 12, 15, 14, 20, 21, 24, 23),

(13, 14, 17, 16, 22, 23, 26, 25),

(14, 15, 18, 17, 23, 24, 27, 26),

)

gold_mesh_lattice_connectivity = (

# (

# 0,

# (10, 11, 14, 13, 19, 20, 23, 22),

# ),

# (

# 0,

# (13, 14, 17, 16, 22, 23, 26, 25),

(

2,

(2, 3, 6, 5, 11, 12, 15, 14),

(4, 5, 8, 7, 13, 14, 17, 16),

(5, 6, 9, 8, 14, 15, 18, 17),

),

(

31,

(11, 12, 15, 14, 20, 21, 24, 23),

),

(

44,

(14, 15, 18, 17, 23, 24, 27, 26),

),

(

82,

(1, 2, 5, 4, 10, 11, 14, 13),

),

)

gold_mesh_element_connectivity = (

(

2,

(2, 3, 6, 5, 11, 12, 15, 14),

(4, 5, 8, 7, 13, 14, 17, 16),

(5, 6, 9, 8, 14, 15, 18, 17),

),

(

31,

(11, 12, 15, 14, 19, 20, 22, 21),

),

(

44,

(14, 15, 18, 17, 21, 22, 24, 23),

),

(

82,

(1, 2, 5, 4, 10, 11, 14, 13),

),

)

class CubeWithInclusion(Example):

"""A (3 x 3 x 3) voxel cube with a single voxel inclusion

at the center.

"""

figure_title: str = COMMON_TITLE + "CubeWithInclusion"

file_stem: str = "cube_with_inclusion"

segmentation = np.array(

[

[

[

11,

11,

11,

],

[

11,

11,

11,

],

[

11,

11,

11,

],

],

[

[

11,

11,

11,

],

[

11,

88,

11,

],

[

11,

11,

11,

],

],

[

[

11,

11,

11,

],

[

11,

11,

11,

],

[

11,

11,

11,

],

],

],

dtype=np.uint8,

)

included_ids = (

11,

88,

)

gold_lattice = (

(1, 2, 6, 5, 17, 18, 22, 21),

(2, 3, 7, 6, 18, 19, 23, 22),

(3, 4, 8, 7, 19, 20, 24, 23),

(5, 6, 10, 9, 21, 22, 26, 25),

(6, 7, 11, 10, 22, 23, 27, 26),

(7, 8, 12, 11, 23, 24, 28, 27),

(9, 10, 14, 13, 25, 26, 30, 29),

(10, 11, 15, 14, 26, 27, 31, 30),

(11, 12, 16, 15, 27, 28, 32, 31),

(17, 18, 22, 21, 33, 34, 38, 37),

(18, 19, 23, 22, 34, 35, 39, 38),

(19, 20, 24, 23, 35, 36, 40, 39),

(21, 22, 26, 25, 37, 38, 42, 41),

(22, 23, 27, 26, 38, 39, 43, 42),

(23, 24, 28, 27, 39, 40, 44, 43),

(25, 26, 30, 29, 41, 42, 46, 45),

(26, 27, 31, 30, 42, 43, 47, 46),

(27, 28, 32, 31, 43, 44, 48, 47),

(33, 34, 38, 37, 49, 50, 54, 53),

(34, 35, 39, 38, 50, 51, 55, 54),

(35, 36, 40, 39, 51, 52, 56, 55),

(37, 38, 42, 41, 53, 54, 58, 57),

(38, 39, 43, 42, 54, 55, 59, 58),

(39, 40, 44, 43, 55, 56, 60, 59),

(41, 42, 46, 45, 57, 58, 62, 61),

(42, 43, 47, 46, 58, 59, 63, 62),

(43, 44, 48, 47, 59, 60, 64, 63),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 6, 5, 17, 18, 22, 21),

(2, 3, 7, 6, 18, 19, 23, 22),

(3, 4, 8, 7, 19, 20, 24, 23),

(5, 6, 10, 9, 21, 22, 26, 25),

(6, 7, 11, 10, 22, 23, 27, 26),

(7, 8, 12, 11, 23, 24, 28, 27),

(9, 10, 14, 13, 25, 26, 30, 29),

(10, 11, 15, 14, 26, 27, 31, 30),

(11, 12, 16, 15, 27, 28, 32, 31),

(17, 18, 22, 21, 33, 34, 38, 37),

(18, 19, 23, 22, 34, 35, 39, 38),

(19, 20, 24, 23, 35, 36, 40, 39),

(21, 22, 26, 25, 37, 38, 42, 41),

(23, 24, 28, 27, 39, 40, 44, 43),

(25, 26, 30, 29, 41, 42, 46, 45),

(26, 27, 31, 30, 42, 43, 47, 46),

(27, 28, 32, 31, 43, 44, 48, 47),

(33, 34, 38, 37, 49, 50, 54, 53),

(34, 35, 39, 38, 50, 51, 55, 54),

(35, 36, 40, 39, 51, 52, 56, 55),

(37, 38, 42, 41, 53, 54, 58, 57),

(38, 39, 43, 42, 54, 55, 59, 58),

(39, 40, 44, 43, 55, 56, 60, 59),

(41, 42, 46, 45, 57, 58, 62, 61),

(42, 43, 47, 46, 58, 59, 63, 62),

(43, 44, 48, 47, 59, 60, 64, 63),

),

(

88,

(22, 23, 27, 26, 38, 39, 43, 42),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 6, 5, 17, 18, 22, 21),

(2, 3, 7, 6, 18, 19, 23, 22),

(3, 4, 8, 7, 19, 20, 24, 23),

(5, 6, 10, 9, 21, 22, 26, 25),

(6, 7, 11, 10, 22, 23, 27, 26),

(7, 8, 12, 11, 23, 24, 28, 27),

(9, 10, 14, 13, 25, 26, 30, 29),

(10, 11, 15, 14, 26, 27, 31, 30),

(11, 12, 16, 15, 27, 28, 32, 31),

(17, 18, 22, 21, 33, 34, 38, 37),

(18, 19, 23, 22, 34, 35, 39, 38),

(19, 20, 24, 23, 35, 36, 40, 39),

(21, 22, 26, 25, 37, 38, 42, 41),

(23, 24, 28, 27, 39, 40, 44, 43),

(25, 26, 30, 29, 41, 42, 46, 45),

(26, 27, 31, 30, 42, 43, 47, 46),

(27, 28, 32, 31, 43, 44, 48, 47),

(33, 34, 38, 37, 49, 50, 54, 53),

(34, 35, 39, 38, 50, 51, 55, 54),

(35, 36, 40, 39, 51, 52, 56, 55),

(37, 38, 42, 41, 53, 54, 58, 57),

(38, 39, 43, 42, 54, 55, 59, 58),

(39, 40, 44, 43, 55, 56, 60, 59),

(41, 42, 46, 45, 57, 58, 62, 61),

(42, 43, 47, 46, 58, 59, 63, 62),

(43, 44, 48, 47, 59, 60, 64, 63),

),

(

88,

(22, 23, 27, 26, 38, 39, 43, 42),

),

)

class Bracket(Example):

"""An L-shape bracket in the xy plane."""

figure_title: str = COMMON_TITLE + "Bracket"

file_stem: str = "bracket"

segmentation = np.array(

[

[

[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 0, 0],

[1, 1, 0, 0],

],

]

)

included_ids = (1,)

gold_lattice = (

(1, 2, 7, 6, 26, 27, 32, 31),

(2, 3, 8, 7, 27, 28, 33, 32),

(3, 4, 9, 8, 28, 29, 34, 33),

(4, 5, 10, 9, 29, 30, 35, 34),

(6, 7, 12, 11, 31, 32, 37, 36),

(7, 8, 13, 12, 32, 33, 38, 37),

(8, 9, 14, 13, 33, 34, 39, 38),

(9, 10, 15, 14, 34, 35, 40, 39),

(11, 12, 17, 16, 36, 37, 42, 41),

(12, 13, 18, 17, 37, 38, 43, 42),

(13, 14, 19, 18, 38, 39, 44, 43),

(14, 15, 20, 19, 39, 40, 45, 44),

(16, 17, 22, 21, 41, 42, 47, 46),

(17, 18, 23, 22, 42, 43, 48, 47),

(18, 19, 24, 23, 43, 44, 49, 48),

(19, 20, 25, 24, 44, 45, 50, 49),

)

gold_mesh_lattice_connectivity = (

(

1,

(1, 2, 7, 6, 26, 27, 32, 31),

(2, 3, 8, 7, 27, 28, 33, 32),

(3, 4, 9, 8, 28, 29, 34, 33),

(4, 5, 10, 9, 29, 30, 35, 34),

(6, 7, 12, 11, 31, 32, 37, 36),

(7, 8, 13, 12, 32, 33, 38, 37),

(8, 9, 14, 13, 33, 34, 39, 38),

(9, 10, 15, 14, 34, 35, 40, 39),

(11, 12, 17, 16, 36, 37, 42, 41),

(12, 13, 18, 17, 37, 38, 43, 42),

(16, 17, 22, 21, 41, 42, 47, 46),

(17, 18, 23, 22, 42, 43, 48, 47),

),

)

gold_mesh_element_connectivity = (

(

1,

(1, 2, 7, 6, 22, 23, 28, 27),

(2, 3, 8, 7, 23, 24, 29, 28),

(3, 4, 9, 8, 24, 25, 30, 29),

(4, 5, 10, 9, 25, 26, 31, 30),

(6, 7, 12, 11, 27, 28, 33, 32),

(7, 8, 13, 12, 28, 29, 34, 33),

(8, 9, 14, 13, 29, 30, 35, 34),

(9, 10, 15, 14, 30, 31, 36, 35),

(11, 12, 17, 16, 32, 33, 38, 37),

(12, 13, 18, 17, 33, 34, 39, 38),

(16, 17, 20, 19, 37, 38, 41, 40),

(17, 18, 21, 20, 38, 39, 42, 41),

),

)

class LetterF(Example):

"""A minimal letter F example."""

figure_title: str = COMMON_TITLE + "LetterF"

file_stem: str = "letter_f"

segmentation = np.array(

[

[

[

11,

0,

0,

],

[

11,

0,

0,

],

[

11,

11,

0,

],

[

11,

0,

0,

],

[

11,

11,

11,

],

],

],

dtype=np.uint8,

)

included_ids = (11,)

gold_lattice = (

(1, 2, 6, 5, 25, 26, 30, 29),

(2, 3, 7, 6, 26, 27, 31, 30),

(3, 4, 8, 7, 27, 28, 32, 31),

(5, 6, 10, 9, 29, 30, 34, 33),

(6, 7, 11, 10, 30, 31, 35, 34),

(7, 8, 12, 11, 31, 32, 36, 35),

(9, 10, 14, 13, 33, 34, 38, 37),

(10, 11, 15, 14, 34, 35, 39, 38),

(11, 12, 16, 15, 35, 36, 40, 39),

(13, 14, 18, 17, 37, 38, 42, 41),

(14, 15, 19, 18, 38, 39, 43, 42),

(15, 16, 20, 19, 39, 40, 44, 43),

(17, 18, 22, 21, 41, 42, 46, 45),

(18, 19, 23, 22, 42, 43, 47, 46),

(19, 20, 24, 23, 43, 44, 48, 47),

)

gold_mesh_lattice_connectivity = (

(

11,

(1, 2, 6, 5, 25, 26, 30, 29),

# (2, 3, 7, 6, 26, 27, 31, 30),

# (3, 4, 8, 7, 27, 28, 32, 31),

(5, 6, 10, 9, 29, 30, 34, 33),

# (6, 7, 11, 10, 30, 31, 35, 34),

# (7, 8, 12, 11, 31, 32, 36, 35),

(9, 10, 14, 13, 33, 34, 38, 37),

(10, 11, 15, 14, 34, 35, 39, 38),

# (11, 12, 16, 15, 35, 36, 40, 39),

(13, 14, 18, 17, 37, 38, 42, 41),

# (14, 15, 19, 18, 38, 39, 43, 42),

# (15, 16, 20, 19, 39, 40, 44, 43),

(17, 18, 22, 21, 41, 42, 46, 45),

(18, 19, 23, 22, 42, 43, 47, 46),

(19, 20, 24, 23, 43, 44, 48, 47),

),

)

gold_mesh_element_connectivity = (

(

11,

(1, 2, 4, 3, 19, 20, 22, 21),

#

#

(3, 4, 6, 5, 21, 22, 24, 23),

#

#

(5, 6, 9, 8, 23, 24, 27, 26),

(6, 7, 10, 9, 24, 25, 28, 27),

#

(8, 9, 12, 11, 26, 27, 30, 29),

#

#

(11, 12, 16, 15, 29, 30, 34, 33),

(12, 13, 17, 16, 30, 31, 35, 34),

(13, 14, 18, 17, 31, 32, 36, 35),

),

)

class LetterF3D(Example):

"""A three dimensional variation of the letter F, in a non-standard

orientation.

"""

figure_title: str = COMMON_TITLE + "LetterF3D"

file_stem: str = "letter_f_3d"

segmentation = np.array(

[

[

[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 1, 1],

],

[

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 1, 1, 1],

[1, 0, 0, 0],

[1, 1, 1, 1],

],

[

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 1, 1, 1],

],

],

dtype=np.uint8,

)

included_ids = (1,)

gold_lattice = (

(1, 2, 7, 6, 31, 32, 37, 36),

(2, 3, 8, 7, 32, 33, 38, 37),

(3, 4, 9, 8, 33, 34, 39, 38),

(4, 5, 10, 9, 34, 35, 40, 39),

(6, 7, 12, 11, 36, 37, 42, 41),

(7, 8, 13, 12, 37, 38, 43, 42),

(8, 9, 14, 13, 38, 39, 44, 43),

(9, 10, 15, 14, 39, 40, 45, 44),

(11, 12, 17, 16, 41, 42, 47, 46),

(12, 13, 18, 17, 42, 43, 48, 47),

(13, 14, 19, 18, 43, 44, 49, 48),

(14, 15, 20, 19, 44, 45, 50, 49),

(16, 17, 22, 21, 46, 47, 52, 51),

(17, 18, 23, 22, 47, 48, 53, 52),

(18, 19, 24, 23, 48, 49, 54, 53),

(19, 20, 25, 24, 49, 50, 55, 54),

(21, 22, 27, 26, 51, 52, 57, 56),

(22, 23, 28, 27, 52, 53, 58, 57),

(23, 24, 29, 28, 53, 54, 59, 58),

(24, 25, 30, 29, 54, 55, 60, 59),

(31, 32, 37, 36, 61, 62, 67, 66),

(32, 33, 38, 37, 62, 63, 68, 67),

(33, 34, 39, 38, 63, 64, 69, 68),

(34, 35, 40, 39, 64, 65, 70, 69),

(36, 37, 42, 41, 66, 67, 72, 71),

(37, 38, 43, 42, 67, 68, 73, 72),

(38, 39, 44, 43, 68, 69, 74, 73),

(39, 40, 45, 44, 69, 70, 75, 74),

(41, 42, 47, 46, 71, 72, 77, 76),

(42, 43, 48, 47, 72, 73, 78, 77),

(43, 44, 49, 48, 73, 74, 79, 78),

(44, 45, 50, 49, 74, 75, 80, 79),

(46, 47, 52, 51, 76, 77, 82, 81),

(47, 48, 53, 52, 77, 78, 83, 82),

(48, 49, 54, 53, 78, 79, 84, 83),

(49, 50, 55, 54, 79, 80, 85, 84),

(51, 52, 57, 56, 81, 82, 87, 86),

(52, 53, 58, 57, 82, 83, 88, 87),

(53, 54, 59, 58, 83, 84, 89, 88),

(54, 55, 60, 59, 84, 85, 90, 89),

(61, 62, 67, 66, 91, 92, 97, 96),

(62, 63, 68, 67, 92, 93, 98, 97),

(63, 64, 69, 68, 93, 94, 99, 98),

(64, 65, 70, 69, 94, 95, 100, 99),

(66, 67, 72, 71, 96, 97, 102, 101),

(67, 68, 73, 72, 97, 98, 103, 102),

(68, 69, 74, 73, 98, 99, 104, 103),

(69, 70, 75, 74, 99, 100, 105, 104),

(71, 72, 77, 76, 101, 102, 107, 106),

(72, 73, 78, 77, 102, 103, 108, 107),

(73, 74, 79, 78, 103, 104, 109, 108),

(74, 75, 80, 79, 104, 105, 110, 109),

(76, 77, 82, 81, 106, 107, 112, 111),

(77, 78, 83, 82, 107, 108, 113, 112),

(78, 79, 84, 83, 108, 109, 114, 113),

(79, 80, 85, 84, 109, 110, 115, 114),

(81, 82, 87, 86, 111, 112, 117, 116),

(82, 83, 88, 87, 112, 113, 118, 117),

(83, 84, 89, 88, 113, 114, 119, 118),

(84, 85, 90, 89, 114, 115, 120, 119),

)

gold_mesh_lattice_connectivity = (

(

1,

(1, 2, 7, 6, 31, 32, 37, 36),

(2, 3, 8, 7, 32, 33, 38, 37),

(3, 4, 9, 8, 33, 34, 39, 38),

(4, 5, 10, 9, 34, 35, 40, 39),

(6, 7, 12, 11, 36, 37, 42, 41),

(7, 8, 13, 12, 37, 38, 43, 42),

(8, 9, 14, 13, 38, 39, 44, 43),

(9, 10, 15, 14, 39, 40, 45, 44),

(11, 12, 17, 16, 41, 42, 47, 46),

(12, 13, 18, 17, 42, 43, 48, 47),

(13, 14, 19, 18, 43, 44, 49, 48),

(14, 15, 20, 19, 44, 45, 50, 49),

(16, 17, 22, 21, 46, 47, 52, 51),

(17, 18, 23, 22, 47, 48, 53, 52),

(18, 19, 24, 23, 48, 49, 54, 53),

(19, 20, 25, 24, 49, 50, 55, 54),

(21, 22, 27, 26, 51, 52, 57, 56),

(22, 23, 28, 27, 52, 53, 58, 57),

(23, 24, 29, 28, 53, 54, 59, 58),

(24, 25, 30, 29, 54, 55, 60, 59),

(31, 32, 37, 36, 61, 62, 67, 66),

(36, 37, 42, 41, 66, 67, 72, 71),

(41, 42, 47, 46, 71, 72, 77, 76),

(42, 43, 48, 47, 72, 73, 78, 77),

(43, 44, 49, 48, 73, 74, 79, 78),

(44, 45, 50, 49, 74, 75, 80, 79),

(46, 47, 52, 51, 76, 77, 82, 81),

(51, 52, 57, 56, 81, 82, 87, 86),

(52, 53, 58, 57, 82, 83, 88, 87),

(53, 54, 59, 58, 83, 84, 89, 88),

(54, 55, 60, 59, 84, 85, 90, 89),

(61, 62, 67, 66, 91, 92, 97, 96),

(66, 67, 72, 71, 96, 97, 102, 101),

(71, 72, 77, 76, 101, 102, 107, 106),

(76, 77, 82, 81, 106, 107, 112, 111),

(81, 82, 87, 86, 111, 112, 117, 116),

(82, 83, 88, 87, 112, 113, 118, 117),

(83, 84, 89, 88, 113, 114, 119, 118),

(84, 85, 90, 89, 114, 115, 120, 119),

),

)

gold_mesh_element_connectivity = (

(

1,

(1, 2, 7, 6, 31, 32, 37, 36),

(2, 3, 8, 7, 32, 33, 38, 37),

(3, 4, 9, 8, 33, 34, 39, 38),

(4, 5, 10, 9, 34, 35, 40, 39),

(6, 7, 12, 11, 36, 37, 42, 41),

(7, 8, 13, 12, 37, 38, 43, 42),

(8, 9, 14, 13, 38, 39, 44, 43),

(9, 10, 15, 14, 39, 40, 45, 44),

(11, 12, 17, 16, 41, 42, 47, 46),

(12, 13, 18, 17, 42, 43, 48, 47),

(13, 14, 19, 18, 43, 44, 49, 48),

(14, 15, 20, 19, 44, 45, 50, 49),

(16, 17, 22, 21, 46, 47, 52, 51),

(17, 18, 23, 22, 47, 48, 53, 52),

(18, 19, 24, 23, 48, 49, 54, 53),

(19, 20, 25, 24, 49, 50, 55, 54),

(21, 22, 27, 26, 51, 52, 57, 56),

(22, 23, 28, 27, 52, 53, 58, 57),

(23, 24, 29, 28, 53, 54, 59, 58),

(24, 25, 30, 29, 54, 55, 60, 59),

(31, 32, 37, 36, 61, 62, 64, 63),

(36, 37, 42, 41, 63, 64, 66, 65),

(41, 42, 47, 46, 65, 66, 71, 70),

(42, 43, 48, 47, 66, 67, 72, 71),

(43, 44, 49, 48, 67, 68, 73, 72),

(44, 45, 50, 49, 68, 69, 74, 73),

(46, 47, 52, 51, 70, 71, 76, 75),

(51, 52, 57, 56, 75, 76, 81, 80),

(52, 53, 58, 57, 76, 77, 82, 81),

(53, 54, 59, 58, 77, 78, 83, 82),

(54, 55, 60, 59, 78, 79, 84, 83),

(61, 62, 64, 63, 85, 86, 88, 87),

(63, 64, 66, 65, 87, 88, 90, 89),

(65, 66, 71, 70, 89, 90, 92, 91),

(70, 71, 76, 75, 91, 92, 94, 93),

(75, 76, 81, 80, 93, 94, 99, 98),

(76, 77, 82, 81, 94, 95, 100, 99),

(77, 78, 83, 82, 95, 96, 101, 100),

(78, 79, 84, 83, 96, 97, 102, 101),

),

)

class Sparse(Example):

"""A radomized 5x5x5 segmentation."""

figure_title: str = COMMON_TITLE + "Sparse"

file_stem: str = "sparse"

segmentation = np.array(

[

[

[0, 0, 0, 0, 2],

[0, 1, 0, 0, 2],

[1, 2, 0, 2, 0],

[0, 1, 0, 2, 0],

[1, 0, 0, 0, 1],

],

[

[2, 0, 2, 0, 0],

[1, 1, 0, 2, 2],

[2, 0, 0, 0, 0],

[1, 0, 0, 2, 0],

[2, 0, 2, 0, 2],

],

[

[0, 0, 1, 0, 2],

[0, 0, 0, 1, 2],

[0, 0, 2, 2, 2],

[0, 0, 1, 0, 1],

[0, 1, 0, 1, 0],

],

[

[0, 1, 2, 1, 2],

[2, 0, 2, 0, 1],

[1, 2, 2, 0, 0],

[2, 1, 1, 1, 1],

[0, 0, 1, 0, 0],

],

[

[0, 1, 0, 2, 0],

[1, 0, 0, 0, 2],

[0, 1, 0, 0, 0],

[1, 0, 0, 0, 0],

[0, 0, 1, 2, 1],

],

],

dtype=np.uint8,

)

included_ids = (

1,

2,

)

gold_lattice = (

(1, 2, 8, 7, 37, 38, 44, 43),

(2, 3, 9, 8, 38, 39, 45, 44),

(3, 4, 10, 9, 39, 40, 46, 45),

(4, 5, 11, 10, 40, 41, 47, 46),

(5, 6, 12, 11, 41, 42, 48, 47),

(7, 8, 14, 13, 43, 44, 50, 49),

(8, 9, 15, 14, 44, 45, 51, 50),

(9, 10, 16, 15, 45, 46, 52, 51),

(10, 11, 17, 16, 46, 47, 53, 52),

(11, 12, 18, 17, 47, 48, 54, 53),

(13, 14, 20, 19, 49, 50, 56, 55),

(14, 15, 21, 20, 50, 51, 57, 56),

(15, 16, 22, 21, 51, 52, 58, 57),

(16, 17, 23, 22, 52, 53, 59, 58),

(17, 18, 24, 23, 53, 54, 60, 59),

(19, 20, 26, 25, 55, 56, 62, 61),

(20, 21, 27, 26, 56, 57, 63, 62),

(21, 22, 28, 27, 57, 58, 64, 63),

(22, 23, 29, 28, 58, 59, 65, 64),

(23, 24, 30, 29, 59, 60, 66, 65),

(25, 26, 32, 31, 61, 62, 68, 67),

(26, 27, 33, 32, 62, 63, 69, 68),

(27, 28, 34, 33, 63, 64, 70, 69),

(28, 29, 35, 34, 64, 65, 71, 70),

(29, 30, 36, 35, 65, 66, 72, 71),

(37, 38, 44, 43, 73, 74, 80, 79),

(38, 39, 45, 44, 74, 75, 81, 80),

(39, 40, 46, 45, 75, 76, 82, 81),

(40, 41, 47, 46, 76, 77, 83, 82),

(41, 42, 48, 47, 77, 78, 84, 83),

(43, 44, 50, 49, 79, 80, 86, 85),

(44, 45, 51, 50, 80, 81, 87, 86),

(45, 46, 52, 51, 81, 82, 88, 87),

(46, 47, 53, 52, 82, 83, 89, 88),

(47, 48, 54, 53, 83, 84, 90, 89),

(49, 50, 56, 55, 85, 86, 92, 91),

(50, 51, 57, 56, 86, 87, 93, 92),

(51, 52, 58, 57, 87, 88, 94, 93),

(52, 53, 59, 58, 88, 89, 95, 94),

(53, 54, 60, 59, 89, 90, 96, 95),

(55, 56, 62, 61, 91, 92, 98, 97),

(56, 57, 63, 62, 92, 93, 99, 98),

(57, 58, 64, 63, 93, 94, 100, 99),

(58, 59, 65, 64, 94, 95, 101, 100),

(59, 60, 66, 65, 95, 96, 102, 101),

(61, 62, 68, 67, 97, 98, 104, 103),

(62, 63, 69, 68, 98, 99, 105, 104),

(63, 64, 70, 69, 99, 100, 106, 105),

(64, 65, 71, 70, 100, 101, 107, 106),

(65, 66, 72, 71, 101, 102, 108, 107),

(73, 74, 80, 79, 109, 110, 116, 115),

(74, 75, 81, 80, 110, 111, 117, 116),

(75, 76, 82, 81, 111, 112, 118, 117),

(76, 77, 83, 82, 112, 113, 119, 118),

(77, 78, 84, 83, 113, 114, 120, 119),

(79, 80, 86, 85, 115, 116, 122, 121),

(80, 81, 87, 86, 116, 117, 123, 122),

(81, 82, 88, 87, 117, 118, 124, 123),

(82, 83, 89, 88, 118, 119, 125, 124),

(83, 84, 90, 89, 119, 120, 126, 125),

(85, 86, 92, 91, 121, 122, 128, 127),

(86, 87, 93, 92, 122, 123, 129, 128),

(87, 88, 94, 93, 123, 124, 130, 129),

(88, 89, 95, 94, 124, 125, 131, 130),

(89, 90, 96, 95, 125, 126, 132, 131),

(91, 92, 98, 97, 127, 128, 134, 133),

(92, 93, 99, 98, 128, 129, 135, 134),

(93, 94, 100, 99, 129, 130, 136, 135),

(94, 95, 101, 100, 130, 131, 137, 136),

(95, 96, 102, 101, 131, 132, 138, 137),

(97, 98, 104, 103, 133, 134, 140, 139),

(98, 99, 105, 104, 134, 135, 141, 140),

(99, 100, 106, 105, 135, 136, 142, 141),

(100, 101, 107, 106, 136, 137, 143, 142),

(101, 102, 108, 107, 137, 138, 144, 143),

(109, 110, 116, 115, 145, 146, 152, 151),

(110, 111, 117, 116, 146, 147, 153, 152),

(111, 112, 118, 117, 147, 148, 154, 153),

(112, 113, 119, 118, 148, 149, 155, 154),

(113, 114, 120, 119, 149, 150, 156, 155),

(115, 116, 122, 121, 151, 152, 158, 157),

(116, 117, 123, 122, 152, 153, 159, 158),

(117, 118, 124, 123, 153, 154, 160, 159),

(118, 119, 125, 124, 154, 155, 161, 160),

(119, 120, 126, 125, 155, 156, 162, 161),

(121, 122, 128, 127, 157, 158, 164, 163),

(122, 123, 129, 128, 158, 159, 165, 164),

(123, 124, 130, 129, 159, 160, 166, 165),

(124, 125, 131, 130, 160, 161, 167, 166),

(125, 126, 132, 131, 161, 162, 168, 167),

(127, 128, 134, 133, 163, 164, 170, 169),

(128, 129, 135, 134, 164, 165, 171, 170),

(129, 130, 136, 135, 165, 166, 172, 171),

(130, 131, 137, 136, 166, 167, 173, 172),

(131, 132, 138, 137, 167, 168, 174, 173),

(133, 134, 140, 139, 169, 170, 176, 175),

(134, 135, 141, 140, 170, 171, 177, 176),

(135, 136, 142, 141, 171, 172, 178, 177),

(136, 137, 143, 142, 172, 173, 179, 178),

(137, 138, 144, 143, 173, 174, 180, 179),

(145, 146, 152, 151, 181, 182, 188, 187),

(146, 147, 153, 152, 182, 183, 189, 188),

(147, 148, 154, 153, 183, 184, 190, 189),

(148, 149, 155, 154, 184, 185, 191, 190),

(149, 150, 156, 155, 185, 186, 192, 191),

(151, 152, 158, 157, 187, 188, 194, 193),

(152, 153, 159, 158, 188, 189, 195, 194),

(153, 154, 160, 159, 189, 190, 196, 195),

(154, 155, 161, 160, 190, 191, 197, 196),

(155, 156, 162, 161, 191, 192, 198, 197),

(157, 158, 164, 163, 193, 194, 200, 199),

(158, 159, 165, 164, 194, 195, 201, 200),

(159, 160, 166, 165, 195, 196, 202, 201),

(160, 161, 167, 166, 196, 197, 203, 202),

(161, 162, 168, 167, 197, 198, 204, 203),

(163, 164, 170, 169, 199, 200, 206, 205),

(164, 165, 171, 170, 200, 201, 207, 206),

(165, 166, 172, 171, 201, 202, 208, 207),

(166, 167, 173, 172, 202, 203, 209, 208),

(167, 168, 174, 173, 203, 204, 210, 209),

(169, 170, 176, 175, 205, 206, 212, 211),

(170, 171, 177, 176, 206, 207, 213, 212),

(171, 172, 178, 177, 207, 208, 214, 213),

(172, 173, 179, 178, 208, 209, 215, 214),

(173, 174, 180, 179, 209, 210, 216, 215),

)

gold_mesh_lattice_connectivity = (

(

1,

(8, 9, 15, 14, 44, 45, 51, 50),

(13, 14, 20, 19, 49, 50, 56, 55),

(20, 21, 27, 26, 56, 57, 63, 62),

(25, 26, 32, 31, 61, 62, 68, 67),

(29, 30, 36, 35, 65, 66, 72, 71),

(43, 44, 50, 49, 79, 80, 86, 85),

(44, 45, 51, 50, 80, 81, 87, 86),

(55, 56, 62, 61, 91, 92, 98, 97),

(75, 76, 82, 81, 111, 112, 118, 117),

(82, 83, 89, 88, 118, 119, 125, 124),

(93, 94, 100, 99, 129, 130, 136, 135),

(95, 96, 102, 101, 131, 132, 138, 137),

(98, 99, 105, 104, 134, 135, 141, 140),

(100, 101, 107, 106, 136, 137, 143, 142),

(110, 111, 117, 116, 146, 147, 153, 152),

(112, 113, 119, 118, 148, 149, 155, 154),

(119, 120, 126, 125, 155, 156, 162, 161),

(121, 122, 128, 127, 157, 158, 164, 163),

(128, 129, 135, 134, 164, 165, 171, 170),

(129, 130, 136, 135, 165, 166, 172, 171),

(130, 131, 137, 136, 166, 167, 173, 172),

(131, 132, 138, 137, 167, 168, 174, 173),

(135, 136, 142, 141, 171, 172, 178, 177),

(146, 147, 153, 152, 182, 183, 189, 188),

(151, 152, 158, 157, 187, 188, 194, 193),

(158, 159, 165, 164, 194, 195, 201, 200),

(163, 164, 170, 169, 199, 200, 206, 205),

(171, 172, 178, 177, 207, 208, 214, 213),

(173, 174, 180, 179, 209, 210, 216, 215),

),

(

2,

(5, 6, 12, 11, 41, 42, 48, 47),

(11, 12, 18, 17, 47, 48, 54, 53),

(14, 15, 21, 20, 50, 51, 57, 56),

(16, 17, 23, 22, 52, 53, 59, 58),

(22, 23, 29, 28, 58, 59, 65, 64),

(37, 38, 44, 43, 73, 74, 80, 79),

(39, 40, 46, 45, 75, 76, 82, 81),

(46, 47, 53, 52, 82, 83, 89, 88),

(47, 48, 54, 53, 83, 84, 90, 89),

(49, 50, 56, 55, 85, 86, 92, 91),

(58, 59, 65, 64, 94, 95, 101, 100),

(61, 62, 68, 67, 97, 98, 104, 103),

(63, 64, 70, 69, 99, 100, 106, 105),

(65, 66, 72, 71, 101, 102, 108, 107),

(77, 78, 84, 83, 113, 114, 120, 119),

(83, 84, 90, 89, 119, 120, 126, 125),

(87, 88, 94, 93, 123, 124, 130, 129),

(88, 89, 95, 94, 124, 125, 131, 130),

(89, 90, 96, 95, 125, 126, 132, 131),

(111, 112, 118, 117, 147, 148, 154, 153),

(113, 114, 120, 119, 149, 150, 156, 155),

(115, 116, 122, 121, 151, 152, 158, 157),

(117, 118, 124, 123, 153, 154, 160, 159),

(122, 123, 129, 128, 158, 159, 165, 164),

(123, 124, 130, 129, 159, 160, 166, 165),

(127, 128, 134, 133, 163, 164, 170, 169),

(148, 149, 155, 154, 184, 185, 191, 190),

(155, 156, 162, 161, 191, 192, 198, 197),

(172, 173, 179, 178, 208, 209, 215, 214),

),

)

gold_mesh_element_connectivity = (

(

1,

(3, 4, 9, 8, 35, 36, 42, 41),

(7, 8, 14, 13, 40, 41, 47, 46),

(14, 15, 20, 19, 47, 48, 53, 52),

(18, 19, 25, 24, 51, 52, 58, 57),

(22, 23, 27, 26, 55, 56, 62, 61),

(34, 35, 41, 40, 69, 70, 76, 75),

(35, 36, 42, 41, 70, 71, 77, 76),

(46, 47, 52, 51, 81, 82, 88, 87),

(65, 66, 72, 71, 100, 101, 107, 106),

(72, 73, 79, 78, 107, 108, 114, 113),

(83, 84, 90, 89, 118, 119, 125, 124),

(85, 86, 92, 91, 120, 121, 127, 126),

(88, 89, 95, 94, 123, 124, 129, 128),

(90, 91, 97, 96, 125, 126, 131, 130),

(99, 100, 106, 105, 132, 133, 139, 138),

(101, 102, 108, 107, 134, 135, 141, 140),

(108, 109, 115, 114, 141, 142, 148, 147),

(110, 111, 117, 116, 143, 144, 150, 149),

(117, 118, 124, 123, 150, 151, 157, 156),

(118, 119, 125, 124, 151, 152, 158, 157),

(119, 120, 126, 125, 152, 153, 159, 158),

(120, 121, 127, 126, 153, 154, 160, 159),

(124, 125, 130, 129, 157, 158, 162, 161),

(132, 133, 139, 138, 165, 166, 171, 170),

(137, 138, 144, 143, 169, 170, 176, 175),

(144, 145, 151, 150, 176, 177, 182, 181),

(149, 150, 156, 155, 180, 181, 184, 183),

(157, 158, 162, 161, 185, 186, 190, 189),

(159, 160, 164, 163, 187, 188, 192, 191),

),

(

2,

(1, 2, 6, 5, 32, 33, 39, 38),

(5, 6, 12, 11, 38, 39, 45, 44),

(8, 9, 15, 14, 41, 42, 48, 47),

(10, 11, 17, 16, 43, 44, 50, 49),

(16, 17, 22, 21, 49, 50, 55, 54),

(28, 29, 35, 34, 63, 64, 70, 69),

(30, 31, 37, 36, 65, 66, 72, 71),

(37, 38, 44, 43, 72, 73, 79, 78),

(38, 39, 45, 44, 73, 74, 80, 79),

(40, 41, 47, 46, 75, 76, 82, 81),

(49, 50, 55, 54, 84, 85, 91, 90),

(51, 52, 58, 57, 87, 88, 94, 93),

(53, 54, 60, 59, 89, 90, 96, 95),

(55, 56, 62, 61, 91, 92, 98, 97),

(67, 68, 74, 73, 102, 103, 109, 108),

(73, 74, 80, 79, 108, 109, 115, 114),

(77, 78, 84, 83, 112, 113, 119, 118),

(78, 79, 85, 84, 113, 114, 120, 119),

(79, 80, 86, 85, 114, 115, 121, 120),

(100, 101, 107, 106, 133, 134, 140, 139),

(102, 103, 109, 108, 135, 136, 142, 141),

(104, 105, 111, 110, 137, 138, 144, 143),

(106, 107, 113, 112, 139, 140, 146, 145),

(111, 112, 118, 117, 144, 145, 151, 150),

(112, 113, 119, 118, 145, 146, 152, 151),

(116, 117, 123, 122, 149, 150, 156, 155),

(134, 135, 141, 140, 167, 168, 173, 172),

(141, 142, 148, 147, 173, 174, 179, 178),

(158, 159, 163, 162, 186, 187, 191, 190),

),

)

examples_figures.py

r"""This module, examples_figures.py, demonstrates creating a pixel slice in

the (x, y) plane, and then appending layers in the z axis, to create a 3D

voxel lattice, as a precursor for a hexahedral finite element mesh.

Example

-------

source ~/autotwin/automesh/.venv/bin/activate

pip install matplotlib

cd ~/autotwin/automesh/book/examples/unit_tests

python examples_figures.py

Ouputk

-----

The `output_npy` segmentation data files

The `output_png` visualization files

"""

# standard library

import datetime

from pathlib import Path

from typing import Final

# third-party libary

import matplotlib.pyplot as plt

from matplotlib.colors import LightSource

import numpy as np

from numpy.typing import NDArray

import examples_types as types

import examples_data as data

# Type aliases

Example = types.Example

def lattice_connectivity(ex: Example) -> NDArray[np.uint8]:

"""Given an Example, prints the lattice connectivity."""

offset = 0

nz, ny, nx = ex.segmentation.shape

nzp, nyp, nxp = nz + 1, ny + 1, nx + 1

# Generate the lattice nodes

lattice_nodes = []

lattice_node = 0

for k in range(nzp):

for j in range(nyp):

for i in range(nxp):

lattice_node += 1

lattice_nodes.append([lattice_node, i, j, k])

# connectivity for each voxel

cvs = []

offset = 0

# print("processing indices...")

for iz in range(nz):

for iy in range(ny):

for ix in range(nx):

# print(f"(ix, iy, iz) = ({ix}, {iy}, {iz})")

cv = offset + np.array(

[

(iz + 0) * (nxp * nyp) + (iy + 0) * nxp + ix + 1,

(iz + 0) * (nxp * nyp) + (iy + 0) * nxp + ix + 2,

(iz + 0) * (nxp * nyp) + (iy + 1) * nxp + ix + 2,

(iz + 0) * (nxp * nyp) + (iy + 1) * nxp + ix + 1,

(iz + 1) * (nxp * nyp) + (iy + 0) * nxp + ix + 1,

(iz + 1) * (nxp * nyp) + (iy + 0) * nxp + ix + 2,

(iz + 1) * (nxp * nyp) + (iy + 1) * nxp + ix + 2,

(iz + 1) * (nxp * nyp) + (iy + 1) * nxp + ix + 1,

]

)

cvs.append(cv)

cs = np.vstack(cvs)

# voxel by voxel comparison

# breakpoint()

vv = ex.gold_lattice == cs

assert np.all(vv)

return cs

def mesh_lattice_connectivity(

ex: Example,

lattice: np.ndarray,

) -> tuple:

"""Given an Example (in particular, the Example's voxel data structure,

a segmentation) and the `lattice_connectivity`, create the connectivity

for the mesh with lattice node numbers. A voxel with a segmentation id not

in the Example's included ids tuple is excluded from the mesh.

"""

# segmentation = ex.segmentation.flatten().squeeze()

segmentation = ex.segmentation.flatten()

# breakpoint()

# assert that the list of included ids is equal

included_set_unordered = set(ex.included_ids)

included_list_ordered = sorted(included_set_unordered)

# breakpoint()

seg_set = set(segmentation)

for item in included_list_ordered:

assert item in seg_set, (

f"Error: `included_ids` item {item} is not in the segmentation"

)

# Create a list of finite elements from the lattice elements. If the

# lattice element has a segmentation id that is not in the included_ids,

# exlude the voxel element from the collected list to create the finite

# element list

blocks = () # empty tuple

# breakpoint()

for bb in included_list_ordered:

# included_elements = []

elements = () # empty tuple

elements = elements + (bb,) # insert the block number

for i, element in enumerate(lattice):

if bb == segmentation[i]:

# breakpoint()

elements = elements + (tuple(element.tolist()),) # overwrite

blocks = blocks + (elements,) # overwrite

# breakpoint()

# return np.array(blocks)

return blocks

def renumber(source: tuple, old: tuple, new: tuple) -> tuple:

"""Given a source tuple, composed of a list of positive integers,

a tuple of `old` numbers that maps into `new` numbers, return the

source tuple with the `new` numbers."""

# the old and the new tuples musts have the same length

err = "Tuples `old` and `new` must have equal length."

assert len(old) == len(new), err

result = ()

for item in source:

idx = old.index(item)

new_value = new[idx]

result = result + (new_value,)

return result

def mesh_element_connectivity(mesh_with_lattice_connectivity: tuple):

"""Given a mesh with lattice connectivity, return a mesh with finite

element connectivity.

"""

# create a list of unordered lattice node numbers

ln = []

for item in mesh_with_lattice_connectivity:

# print(f"item is {item}")

# The first item is the block number

# block = item[0]

# The second and onward items are the elements

elements = item[1:]

for element in elements:

ln += list(element)

ln_set = set(ln) # sets are not necessarily ordered

ln_ordered = tuple(sorted(ln_set)) # now these unique integers are ordered

# and they will map into the new compressed unique interger list `mapsto`

mapsto = tuple(range(1, len(ln_ordered) + 1))

# now build a mesh_with_element_connectivity

mesh = () # empty tuple

# breakpoint()

for item in mesh_with_lattice_connectivity:

# The first item is the block number

block_number = item[0]

block_and_elements = () # empty tuple

# insert the block number

block_and_elements = block_and_elements + (block_number,)

for element in item[1:]:

new_element = renumber(source=element, old=ln_ordered, new=mapsto)

# overwrite

block_and_elements = block_and_elements + (new_element,)

mesh = mesh + (block_and_elements,) # overwrite

return mesh

def flatten_tuple(t):

"""Uses recursion to convert nested tuples into a single-sevel tuple.

Example:

nested_tuple = (1, (2, 3), (4, (5, 6)), 7)

flattened_tuple = flatten_tuple(nested_tuple)

print(flattened_tuple) # Output: (1, 2, 3, 4, 5, 6, 7)

"""

flat_list = []

for item in t:

if isinstance(item, tuple):

flat_list.extend(flatten_tuple(item))

else:

flat_list.append(item)

# breakpoint()

return tuple(flat_list)

def elements_without_block_ids(mesh: tuple) -> tuple:

"""Given a mesh, removes the block ids and returns only just the

element connectivities.

"""

aa = ()

for item in mesh: